Interesting use of technology.

https://www.youtube.com/watch?v=VtttfrmfMZw

https://arstechnica.com/gaming/2017/08/nvidia-remedy-neural-network-facial-animation/?amp=1

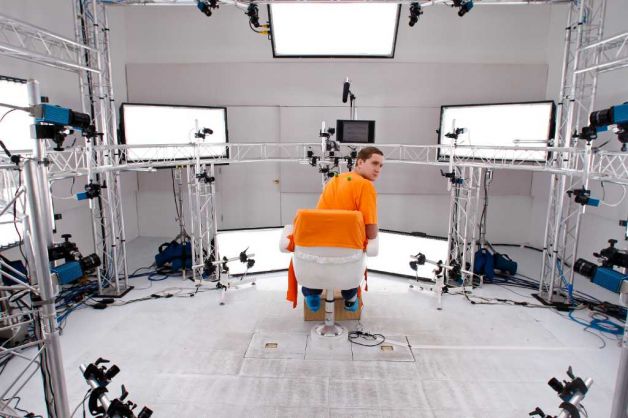

Remedy, the developer behind the likes of Alan Wake and Quantum Break, has teamed up with GPU-maker Nvidia to streamline one of the more costly parts of modern games development: motion capture and animation. As showcased at Siggraph, by using a deep learning neural networkrun on Nvidia's costly eight-GPU DGX-1 server, naturallyRemedy was able to feed in videos of actors performing lines, from which the network generated surprisingly sophisticated 3D facial animation. This, according Remedy and Nvidia, removes the hours of "labour-intensive data conversion and touch-ups" that are typically associated with traditional motion capture animation.

Aside from cost, facial animation, even when motion captured, rarely reaches the same level of fidelity as other animation. That odd, lifeless look seen in even the biggest of blockbuster games is often down to the limits of facial animation. Nvidia and Remedy believe its neural network solution is capable of producing results as good, if not better than that produced by traditional techniques. It's even possible to skip the video altogether and feed the neural network a mere audio clip, from which it's able to produce an animation based on prior results.

https://www.youtube.com/watch?v=VtttfrmfMZw

https://arstechnica.com/gaming/2017/08/nvidia-remedy-neural-network-facial-animation/?amp=1