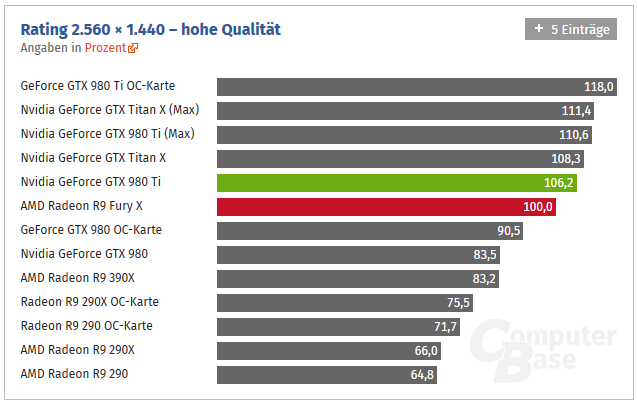

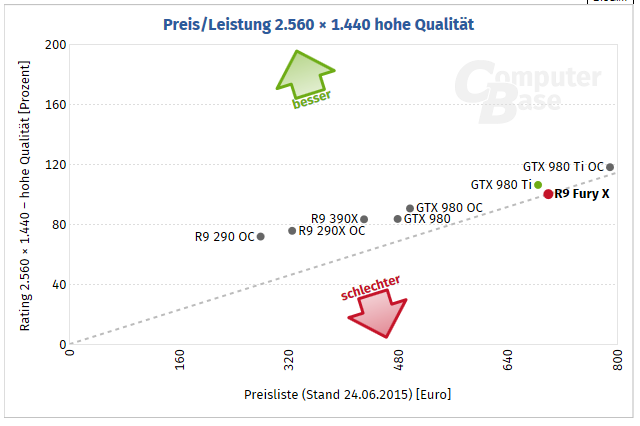

Now I don't regret buying a reference 980 Ti at launch. These benchmarks are close, especially at 4K, but the Fury X is awkwardly positioned overall. It delivers most of the performance that the 980 Ti does, but not all of the performance, and the Fury X has only 4 GB of VRAM, so how future-proof is it? The Fury X needs to be $599. At that price, it makes sense. Custom 980 Tis are priced into the stratosphere, so at six hundred bucks the Fury X will sell for sure.

To the people saying about bad drivers on the AMD side, there's some truth to that, but as a 980 Ti owner, I get several TDRs daily with Chrome, and phantom Witcher 3 crashes. NVIDIA is a lot better about supporting new games on release day, but let's not pretend that either green or red delivers great drivers.