-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AMD's Raja Koduri says that we need 16,000x16,000 at 240Hz for "true immersion" in VR

- Thread starter Enter the Dragon Punch

- Start date

Trogdor1123

Member

That would be great!

Saucycarpdog

Member

AMD might not even exist by the time this comes to fruition.

These slides ignore foveated rendering for a good reason. AMD can't bet on the tech maturing at any specific point in time.

Foveated rendering will definitely be a thing by the time we have 16Kx16K VR.

ichtyander

Member

I wonder if it'll be easier to build custom fragmented displays in a seamless grid of varying resolutions, so the 16k panel is in the center of the display, filling enough physical space to make the center of the image super sharp, while the other fragments technically support only lower resolutions and are positioned at the outer sides.

Not to mention all sorts of syncing and rendering problems, but the crispness would instantly be ruined the moment your eye wanders away from the center. Which is kind of a problem anyway as I understand it, because of how the lenses work, are made and the whole tight physical space between your eyes, lenses and the display. If I'm not mistaken (haven't tried any headset yet), with the current technology, you can't really look around with your eyes while keeping your head static because you'll just get a blurrier image. In that regard, this Frankenstein display might work out well, if it's even practical.

On the other hand, light field displays, shooting images into your retina etc. are somewhat different approaches to displaying images, you don't always think of display resolutions in the same way, might be some trickery to show dynamic resolutions, so there's that I guess, but I'm thinking it's still a ways off.

Not to mention all sorts of syncing and rendering problems, but the crispness would instantly be ruined the moment your eye wanders away from the center. Which is kind of a problem anyway as I understand it, because of how the lenses work, are made and the whole tight physical space between your eyes, lenses and the display. If I'm not mistaken (haven't tried any headset yet), with the current technology, you can't really look around with your eyes while keeping your head static because you'll just get a blurrier image. In that regard, this Frankenstein display might work out well, if it's even practical.

On the other hand, light field displays, shooting images into your retina etc. are somewhat different approaches to displaying images, you don't always think of display resolutions in the same way, might be some trickery to show dynamic resolutions, so there's that I guess, but I'm thinking it's still a ways off.

The parts you focus on are higher resolution while the periphery of your vision is lower res. So the part you are looking at is 16,000 x 16,000 while the rest is much lower.

.

Not a chance if we're talking single GPU. If we consider a 980Ti as sufficient for 4K at 60hz(hint:its not) then we would need at least 16x the power. And thats just a rough game agnostic estimation. Real world requirements are usually much more unforgiving.

If we're both still around, I bet you that in 8 years time this will be a possibility.

How far have we come along in this method?I was just pointing out how full of shit AMD is when they pretend foveated rendering doesn't exist. Because that's where the >1 PFLOPS figure comes from.

Is it usable in all cases?

ThoseDeafMutes

Member

But real life is 120,000x120,000 at 6000Hz

1 / plank time frames per second

So, we're talking about 500 PS4's duct taped together?

....I'm going to need more tape.

Edit: for context, my money is on the next round of consoles being roughly 12x as powerful as the PS4. That'd be overkill for 1080p visuals, but not so much for 4k and VR.

We didn't even get that this gen, and that was following an 8 year cycle. I don't see it happening.

1 / plank time frames per second

Isn't the number of plank units in a second > the number of seconds that have elapsed since the beginning of the universe?

Sword Of Doom

Member

by that time, why not just get a chip in my brain and surf the VR world like in all the cyberpunk things ever

Bypass screens altogether. Just tap straight into my brain

For then VR will already be obsolete and holograms will be the new thing, but of course we will need 20k 500fps holograms to have them look like real things, so we will have to wait again

Holograms wouldn't be very immersive though, right? I mean, yeah, I'm sure porn companies could find some uses that would tick that box, but VR would still be the go-to tech for immersion in a space you're actually trying to inhabit and/or navigate.

clem84

Gold Member

Where is he getting 240hz from? I thought Oculus did psychological studies that found ~88hz was where people stopped unconsciously noticing flickering. 16x16k per eye sounds about right though for "true" vr.

More is always better but, 4K in each eye at 120hz should be more than enough to be immersed. Saying we need 16K at 240hz is insane IMO.

More is always better but, 4K in each eye at 120hz should be more than enough to be immersed. Saying we need 16K at 240hz is insane IMO.

Pfft, you can't make a realistic hummingbird simulator at 120hz, bro.

More is always better but, 4K in each eye at 120hz should be more than enough to be immersed. Saying we need 16K at 240hz is insane IMO.

Indeed those are just numbers thrown out there to make noise and hype around VR and AMD GPU future, no one really knows what would take to make VR like real life, a thing which I think isn't even possible, also cause the biggest issues are not resolution and frame rate, but field of view, movements and controls, plus where is the smell and weather feedback? those would be essential for true immersion.

POWERSPHERE

Banned

Youd hope theres somewhere to go... These headsets will be like CRTs in 5 years

30yearsofhurt

Member

OP needs to correct title.

AMD is in for a rude awakening when they get on Intarwebz for the first time and learn about foveated rendering.

Spoken like a true "Chumpion" ...kidding but do you really think they are clueless on the latest and greatest upcoming technology?

Graphics Horse

Member

Hoping lightfield displays will be practical by then, but they need even higher res than that if done with a panel display.

I was wondering if we could eventually have extremely fast lcd pinholes opening and closing all over the display while you pulse corresponding oled pixels behind each one for a few microseconds, but I'm not sure it would work.

Nvidia have a cheap version with static pinholes but the resolution takes a big hit.

http://youtu.be/UYGa6n_0aUs

I was wondering if we could eventually have extremely fast lcd pinholes opening and closing all over the display while you pulse corresponding oled pixels behind each one for a few microseconds, but I'm not sure it would work.

Nvidia have a cheap version with static pinholes but the resolution takes a big hit.

http://youtu.be/UYGa6n_0aUs

Robottiimu2000

Member

I laughed out loud.

Large and intelligent changes in both GPU and software architectures will be the key to driving up performance while lowering power consumption, which is exactly what the AMD's Radeon Technologies group is working towards.

omg AMD will save VR

If VR really takes off than the current HMDs now are what 3D graphics were 1996 to us. Cool and all but in 20 years...

Nah. We'll look back on it more like mode 7

More is always better but, 4K in each eye at 120hz should be more than enough to be immersed. Saying we need 16K at 240hz is insane IMO.

For me honestly 1080p@75Hz with cheap optics was all I needed to be pretty damn immersed, and I think 1200p@90Hz with great optics will be incredble. Of course it will get better and better, but it's already at the point where it is extremely immersive, despite low resolutions. But yea for it to be completely indistinguishable from reality we've got a looong way to go.

poodaddy

Member

Make that wireless while you are at it

This is actually the biggest one for me. I mean, I understand that you need a higher display resolution and frame rate to achieve "immersion", but I don't give a good goddamn about immersion; I want to have fun and not look like a 90's cyber punk hack villain doing it. I'd be happy with 3k 90hz if it's wireless.

unholyrevenger72

Banned

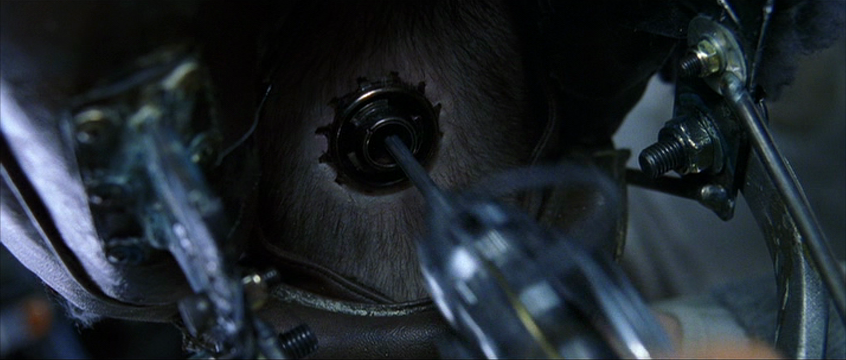

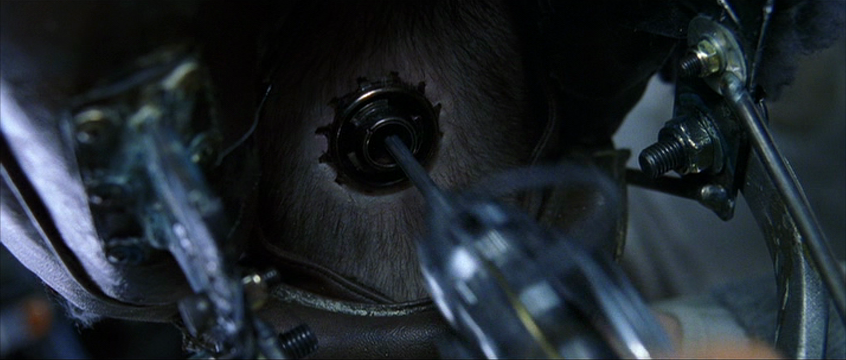

We'll have this

before 16kx16x

before 16kx16x

tru

turns out it's 8640p (15,360 × 8,640 progressive scan; aka "16K QUHD")

my bad i guess, but i thought VR is a square on each side anyways

das a lot of fuckin pixels tho

Can't remember about the PSVR but the Oculus Rift is a rectangle that's slightly higher than its width and the HTC Vive is either an oval or a circle. There's a diagram that shows them all floating around somewhere.

AcademicSaucer

Member

But real life is 120,000x120,000 at 6000Hz

Our eyes are really shitty tho, we have a blind spot that if any camera had we would throw out of the window.

the-pi-guy

Member

I don't think that the length of last gen doesn't matter, because the last gen consoles started off way overpowered.We didn't even get that this gen, and that was following an 8 year cycle. I don't see it happening.

Let's say consoles can only double every 5 years to keep at a certain price point.

1->(5 years) -> 2 -> (5 years) -> etc.

This got further messed up by releasing something more powerful early.

4-> (5 years) -> 20->

In order to hit that price point, they'd have to release something less powerful.

Of course, it becomes more complicated to consider because Sony/MS are now just using relatively standard hardware, instead of specialized stuff.

Also adding in how Moore's Law stands up.

Gamer79

Predicts the worst decade for Sony starting 2022

There are only 3 ways this is going to happen.

1. Reinvent processing power need for said resolution. At current Rates you would needs a Supercomputer for 16,000x16,000

2. Make Cloud Computing work. The sky is the limit if you can get millions of computers that all do just a bit of processing let alone dedicating serous resources.

3. A Quantum leap in how computers work. To have a computer that can run near one petaflop your talking about thousands if not tens of thousands of dollars. Quantum computing?

1. Reinvent processing power need for said resolution. At current Rates you would needs a Supercomputer for 16,000x16,000

2. Make Cloud Computing work. The sky is the limit if you can get millions of computers that all do just a bit of processing let alone dedicating serous resources.

3. A Quantum leap in how computers work. To have a computer that can run near one petaflop your talking about thousands if not tens of thousands of dollars. Quantum computing?

Rushersauce

Banned

Of course there's people shitting on AMD.

EventHorizon

Member

Spoken like a true "Chumpion" ...kidding but do you really think they are clueless on the latest and greatest upcoming technology?

It is difficult to get a man to understand something when his salary depends upon his not understanding it.

- Upton Sinclair

- Upton Sinclair

It is in AMD's best interest to hype up the demands of VR processing power as much as possible. We do still have a long way to go, but not as far as they would have you believe. Also note that at some point you start to hit a point of diminishing returns where most people simply won't care. For example I don't need anywhere near the capabilities that audiophiles desire in order to enjoy music and sound. I could train myself to tell the difference but to me that seems more like a choice than a necessity.

There are only 3 ways this is going to happen.

1. Reinvent processing power need for said resolution. At current Rates you would needs a Supercomputer for 16,000x16,000

2. Make Cloud Computing work. The sky is the limit if you can get millions of computers that all do just a bit of processing let alone dedicating serous resources.

3. A Quantum leap in how computers work. To have a computer that can run near one petaflop your talking about thousands if not tens of thousands of dollars. Quantum computing?

1) No doubt we will need greater processing power, but you don't have to brute force it with processing power alone

2) No amount of cloud computing can get around the latency requirements of running at 240Hz. That only gives you around 4ms per frame. The latency on the connection alone would be more than the processing time allotted to you. In addition, any solution needs to be economical. If it required a ton of computers on the back end working at 100% capacity then it would be too expensive.

3) Once again we won't have to brute force this.

As others have pointed out, eye tracking with foveated rendering lets us drastically cut the processing demands. In fact it is quite likely that because of foveated rendering with eye tracking, VR will eventually be able to achieve a higher total perceived resolution than traditional monitors. Although it is possible to use the same technologies monitors, it would be much easier to implement on someone wearing VR goggles.

n0razi

Member

Where is he getting 240hz from? I thought Oculus did psychological studies that found ~88hz was where people stopped unconsciously noticing flickering. 16x16k per eye sounds about right though for "true" vr.

Many GAFers have 144-165Hz gaming monitors and can see a difference

Because the Earth is square

This just horribly depressed me, and I don't even think it was intended as a reference....

All better now.

I believe him. Fortunately you dont need any of that to just have a really cool experience.

Rend386, some shutterglasses, and a slightly mutilated power glove was REALLY cool, and I'm pretty sure it fell slightly short of those specifications.

Actually, purely in terms of the visual system, these numbers are pretty reasonable.Indeed those are just numbers thrown out there to make noise and hype around VR and AMD GPU future, no one really knows what would take to make VR like real life

It's where you reach the physical limits of human visual perception.

Now, obviously you can have worthwhile VR at a much lower standard, but the eventual goal should be right around what he stated -- and this has been known in the VR community for a while.

And yeah, the way we will achieve that is by using eye tracking.

You know, the pessimist in me is wondering if eye-tracking shenanigans is going to be one of those cost-cutting standards that turns around and bites us in the ass a few decades down the road when you can brute force the 'proper' way to do things, but can't really steer the ship in that direction because of standardization and precedent.

Like non-square pixel aspect ratios for movie content (hi, everything up to DVD) or 29.97 NTSC being a standard. The latter wasn't so much a problem with power as it was a problem with interacting with existing infrastructure, but the point stands - it's annoying to deal with.

(With eye tracking, the obvious drawback would be recorded footage, not realtime usage)

Like non-square pixel aspect ratios for movie content (hi, everything up to DVD) or 29.97 NTSC being a standard. The latter wasn't so much a problem with power as it was a problem with interacting with existing infrastructure, but the point stands - it's annoying to deal with.

(With eye tracking, the obvious drawback would be recorded footage, not realtime usage)

I understand the concern, but I can't really think of a way it would manifest. Eye tracking as a means for reducing rendering load doesn't affect the content, just the way it is presented.You know, the pessimist in me is wondering if eye-tracking shenanigans is going to be one of those cost-cutting standards that turns around and bites us in the ass a few decades down the road when you can brute force the 'proper' way to do things.

Like non-square pixel aspect ratios for movie content (hi, everything up to DVD) or 29.97 being a standard. The latter wasn't so much a problem with power as it was a problem with interacting with existing infrastructure, but the point stands - it's annoying to deal with.

Well, like a game (but even more so) recording and then playing back VR footage is basically impossible.(With eye tracking, the obvious drawback would be recorded footage, not realtime usage)

I should learn to make edits prior to posting :V

Also when hardware is finally capable of letting us stream VR content, which isn't that much longer down the road from it being feasible. But then again, the experience of that first party is paramount anyway.

edit: To be clear, I meant twitch etc. streaming, as in broadcast entertainment, not realtime play streaming like Steam Link etc.

Also when hardware is finally capable of letting us stream VR content, which isn't that much longer down the road from it being feasible. But then again, the experience of that first party is paramount anyway.

edit: To be clear, I meant twitch etc. streaming, as in broadcast entertainment, not realtime play streaming like Steam Link etc.

malboroking

Banned

So, we're talking about 500 PS4's duct taped together?

....I'm going to need more tape.

Edit: for context, my money is on the next round of consoles being roughly 12x as powerful as the PS4. That'd be overkill for 1080p visuals, but not so much for 4k and VR.

You think the PS5 is going to have a 22 Teraflop GPU?

EventHorizon

Member

I should learn to make edits prior to posting :V

Also when hardware is finally capable of letting us stream VR content, which isn't that much longer down the road from it being feasible. But then again, the experience of that first party is paramount anyway.

edit: To be clear, I meant twitch etc. streaming, as in broadcast entertainment, not realtime play streaming like Steam Link etc.

Streaming won't be a problem. You wouldn't be streaming at the rendered resolution and FPS anyway due to bandwidth limitations.

I think for streaming setups like this will be the most viable option, and those won't be any more expensive than rendering a traditional monitor view of the scene (and won't use eye tracking obviously).I should learn to make edits prior to posting :V

Also when hardware is finally capable of letting us stream VR content, which isn't that much longer down the road from it being feasible. But then again, the experience of that first party is paramount anyway.

edit: To be clear, I meant twitch etc. streaming, as in broadcast entertainment, not realtime play streaming like Steam Link etc.

So having done a bit more research into human visual acuity... resolution requirements for absolute realism are quite a bit higher still.

Because

1. 20/20 vision is actually the lower limit of 'healthy visual acuity'.

2. Our FOV is very large... 180 without eye rotation, up to 270 with.

3. We process certain types of visual information better than other types... so while your generic scene is ok with the standard retina level resolution, information like 2 thin black lines parallel to each other with a gap can be resolved with a much higher level of detail, as can 2 thin black lines touching each other that are one pixel offset at the point they're 'touching'.

4. Our visual motion acuity is also higher for objects that are small, high contrast and fast moving - things like tennis balls and golf balls can be perceived smoothly, despite the equivalent need for 200-300 fps for it to animate as smoothly on a screen. Also, at the top end of visual motion acuity, people that have been selected and trained for strenuous visual motion tasks... i.e. fighter pilots, have been recognized with the ability to perceive differences of up to 2ms... or 500 fps. Also of small high contrast fast moving objects (i.e. enemy fighter jets).

So... raw absolute requirements are closer to something like 30k x 30k x 300 Hz for most people, and higher still if you're exceptional.

With that said... there are some resolution effects that, that raw figure doesn't account for - which is temporal resolution. That is to say, we don't perceive each 'frame' of an image like a computer renders it, rather, our retinal receptors fire in a stochastic manner with significant overlaps in timing between each firing. Additionally our eyes are microsaccading and saccading constantly (micro visual motion + larger broader visual motion), which combine to help create a perception of higher visual acuity then our visual system is actually receiving.

A lot of that information is held in temporary memory to build the perception of resolution... so when the CV1 is rendering at 1080x1200 per eye @ 90Hz - the temporal resolution is good enough that many people claim that they're seeing a higher resolution screen then they are.

Plus throw on top, foveated rendering, and you can reduce absolute computational requirements significantly.

Taking all that into account, we can reasonably expect to arrive at the computational requirements for absolutely life like VR (visually speaking anyway) at around 2025 for the lower bound estimate to 2035 for the upper bound estimate.

That's a pretty rough estimate - but it at least gives us an idea of how long it'll take for humanity to go mostly VR

Because

1. 20/20 vision is actually the lower limit of 'healthy visual acuity'.

2. Our FOV is very large... 180 without eye rotation, up to 270 with.

3. We process certain types of visual information better than other types... so while your generic scene is ok with the standard retina level resolution, information like 2 thin black lines parallel to each other with a gap can be resolved with a much higher level of detail, as can 2 thin black lines touching each other that are one pixel offset at the point they're 'touching'.

4. Our visual motion acuity is also higher for objects that are small, high contrast and fast moving - things like tennis balls and golf balls can be perceived smoothly, despite the equivalent need for 200-300 fps for it to animate as smoothly on a screen. Also, at the top end of visual motion acuity, people that have been selected and trained for strenuous visual motion tasks... i.e. fighter pilots, have been recognized with the ability to perceive differences of up to 2ms... or 500 fps. Also of small high contrast fast moving objects (i.e. enemy fighter jets).

So... raw absolute requirements are closer to something like 30k x 30k x 300 Hz for most people, and higher still if you're exceptional.

With that said... there are some resolution effects that, that raw figure doesn't account for - which is temporal resolution. That is to say, we don't perceive each 'frame' of an image like a computer renders it, rather, our retinal receptors fire in a stochastic manner with significant overlaps in timing between each firing. Additionally our eyes are microsaccading and saccading constantly (micro visual motion + larger broader visual motion), which combine to help create a perception of higher visual acuity then our visual system is actually receiving.

A lot of that information is held in temporary memory to build the perception of resolution... so when the CV1 is rendering at 1080x1200 per eye @ 90Hz - the temporal resolution is good enough that many people claim that they're seeing a higher resolution screen then they are.

Plus throw on top, foveated rendering, and you can reduce absolute computational requirements significantly.

Taking all that into account, we can reasonably expect to arrive at the computational requirements for absolutely life like VR (visually speaking anyway) at around 2025 for the lower bound estimate to 2035 for the upper bound estimate.

That's a pretty rough estimate - but it at least gives us an idea of how long it'll take for humanity to go mostly VR

EventHorizon

Member

I think for streaming setups like this will be the most viable option, and those won't be any more expensive than rendering a traditional monitor view of the scene (and won't use eye tracking obviously).

Unfortunately that could not use the rendered output the game produced. An entirely separate image would need to be produced.

However that brings up an interesting point. Could future games and hardware allow for a separate GPU to produce content specifically for streaming. Game streaming is big business for the streamers as well as free advertisement for games. No doubt that would be enough to warrant extra development and hardware costs to make VR streaming content as enticing as possible.

I think for streaming setups like this will be the most viable option, and those won't be any more expensive than rendering a traditional monitor view of the scene (and won't use eye tracking obviously).

Holy crap, that's cool.

But yeah, what I meant was essentially just footage of the rendered game output. The same way that footage of games on YouTube do not have the player agency component (...obviously) and you're just following along with what the person who recorded footage did, you obviously wouldn't have the same motion tracking/eye tracking experience. The eye-tracking solution will reduce the image quality of a large portion of the footage at all time, so it's not ideal for posterity and all that. Like I said, on one hand, it's the experience of the first party in real time that's paramount (especially for VR, durr Falk), but on the other, when we finally get to the point we can brute-force the necessary processing power half a century down the road, things may be so mired in precedent and standardization like the two examples I brought up that the 'proper way' has a slower adoption rate.