Reizo Ryuu

Member

Day 1 is today, but steam doesn't update until 6 hours from now; they'll probably start up communication when the game is officially out everywhere.So not a peep from devs yet? Do we even know if there will be a day 1 patch?

Day 1 is today, but steam doesn't update until 6 hours from now; they'll probably start up communication when the game is officially out everywhere.So not a peep from devs yet? Do we even know if there will be a day 1 patch?

Camera sensitivity settings helps? That's a new oneHoly tinfoil hat batman. I'm pretty sure if they had an AMD agenda they would go with a category more alluring than fucking 1080p Ultra + RT lmao.

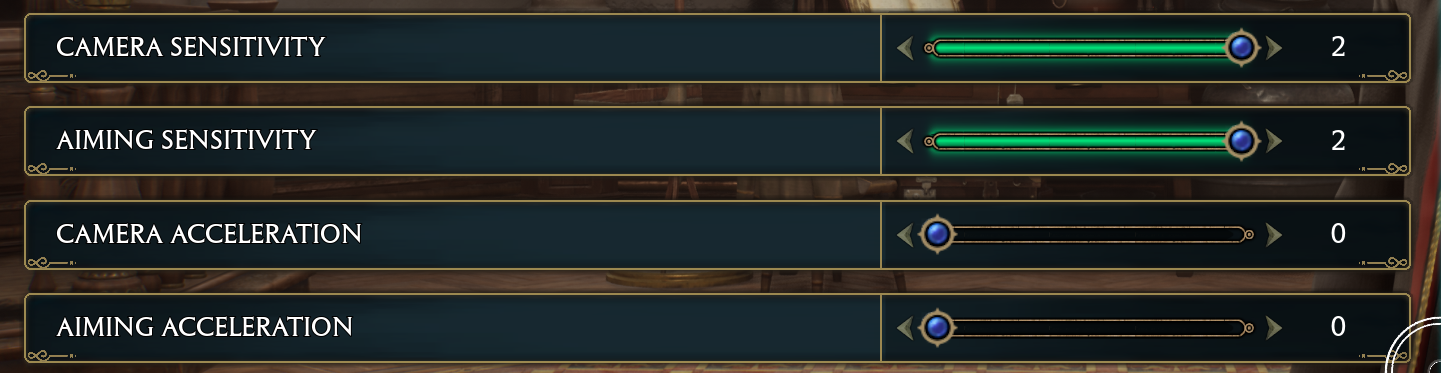

Playing with a controller? Turn off the camera acceleration settings in the options if you haven't already and max the camera sensitivity.

I'm also playing on a 3080 (with a 5800X3D), and done all the above as you. That + capping the FPS to 75 and I would say the game is 95% playable now. and I have everything on High except Materials/Textures/Foliage and NPC Density are Ultra.

How nice i have 8gb 3060 ti and a 3070 with 8gb.Yeah, it's a massacre for these GPUs with lower vram capacity.

Camera sensitivity settings helps? That's a new one. I will definitely try that too thanks.

Yes I turned rtx off right after I got to the castle. I'm pretty sure about that.Make sure your raytracing Shadows isnt on by accident, mine was on and it tanked my performance to 15fps at times, I thought I disabled all RT but Shadows was somehow turned back on.

It sounds silly I know. It may not make an actual performance difference, and its just a combination of all the other changes making it better but I feel its best to be thorough on every setting in the off chance it helps with the 'feel' of playing the game, and those camera settings definitely make using the camera feel better. I hope you get performance to a level you're happy with.Camera sensitivity settings helps? That's a new one. I will definitely try that too thanks.

It's understandable that you feel this way. I personally enjoy tinkering with settings, reading about other people experiences and what they have tested, but for every me there's like 1000 like you who just want to play the fucking game without silly performance problems that should have been resolved way before early access. Games are becoming more expensive, GPUs are more expensive and its not acceptable that we get half-assed optimisation on release with a 'we'll fix it later' attitude.My rig is possibly the best combination in the gaming arena (13900K + 4090 + 32GB 7400MHz DDR5 + PCI-e 4.0 SSD), but the unfortunate truth is the game is a stutterfest. Apparently, shader compilation at the beginning only compiles the crucial assets that doesn't help to overall performance.

Tried everything so far including FG On vs. Off, DLSS On vs. Off, engine.ini, Gameusersettings.ini etc... Nope, nothing works and that bears the question, does it worth all these troubleshooting when I am getting older day by day? It's very close for me to go pure console gaming very very fucking soon because if I cannot play the game even with the best hardware, Nvidia can shove their precious graphics cards to their assess unless they either help developers to implement impeccable Ray Tracing features along with the optimization or make it mandatory. Because this will only hurt their sales in the future if developers keep ignoring PC gaming with brute force optimization now that they can hide that with the features like DLSS + Frame Generation.

Sad and angry.

Dead Space is also vram limited on 3080. It is just the beginning. We will get more and more games that need more vram then 8-10.It hasnt. DLSS still exists. Hogwarts and Forspoken are not good pc ports nor should they be considered the standard going forward.

This is a trick also on console. It obviously doesnt help performance but makes the camera feel much more responsive.

It sounds silly I know. It may not make an actual performance difference, and its just a combination of all the other changes making it better but I feel its best to be thorough on every setting in the off chance it helps with the 'feel' of playing the game, and those camera settings definitely make using the camera feel better. I hope you get performance to a level you're happy with.

It's understandable that you feel this way. I personally enjoy tinkering with settings, reading about other people experiences and what they have tested, but for every me there's like 1000 like you who just want to play the fucking game without silly performance problems that should have been resolved way before early access. Games are becoming more expensive, GPUs are more expensive and its not acceptable that we get half-assed optimisation before release with a 'we'll fix it later' attitude.

That game is a stutter mess. Not exactly a good example either tho I've seen ppl with modest PC's running it fine. Ofc 4k 8gb is dead. 10 gb is at the limit tho but should be fine for many years if they use dlss and lower settings which lets be honest, no one should just straight up go for max, because visually theres rarely any difference. Most PC gamers know this, which is why u'll only see on gaf or 4080+ owners complaining about vram.Dead Space is also vram limited on 3080. It is just the beginning. We will get more and more games that need more vram then 8-10.

I wouldnt worry about RTAO and RT shadows. They are broken and kinda useless without RTGI. These devs have no idea how RT works and they just quickly slapped that shit at the end 100%. Games art was designed without RT in mind. You can keep reflections up tho cuz they screwed SSR as well.

My rig is possibly the best combination in the gaming arena (13900K + 4090 + 32GB 7400MHz DDR5 + PCI-e 4.0 SSD), but the unfortunate truth is the game is a stutterfest. Apparently, shader compilation at the beginning only compiles the crucial assets that doesn't help to overall performance.

Tried everything so far including FG On vs. Off, DLSS On vs. Off, engine.ini, Gameusersettings.ini etc... Nope, nothing works and that bears the question, does it worth all these troubleshooting when I am getting older day by day? It's very close for me to go pure console gaming very very fucking soon because if I cannot play the game even with the best hardware, Nvidia can shove their precious graphics cards to their assess unless they either help developers to implement impeccable Ray Tracing features along with the optimization or make it mandatory. Because this will only hurt their sales in the future if developers keep ignoring PC gaming with brute force optimization now that they can hide that with the features like DLSS + Frame Generation.

Sad and angry.

Dead Space is also vram limited on 3080. It is just the beginning. We will get more and more games that need more vram then 8-10.

I mean we will get more and more unoptimized games of this kind. I have already seen the video.Watch Digital Foundry’s video. The behaviour is not normal. We can’t point fingers at an architecture and just swipe under the carpet how incompetent devs are for PC ports lately.

I just hope UE5 solves this shit.Yeah it’s clearly broken and the devs don’t know what they are doing on PC or RT. It’s a steaming pile of shit in performance and here we are discussing memory limits.. I mean yeah, if it looked like it was optimized and visually pushing for that VRAM usage, then sure, but it really doesn’t. There’s a wave of shit ports on PC lately.

If a game like plague tale requiem which is DRIPPING with high quality textures at every pixels doesn’t choke a 3070, while yours looks like a PS4 game and does, then you fucked up.

Dead space memory management quirks found by digital foundry, Hogwarts legacy, calisto protocol, plague tale before patch, forspoken.. what a bad wave of ports. I hope Returnal is not on that list.

I also have a 3070, everything on high at 1440p with dlss set to quality, I get about 100 frames in the open world. Nothing to scoff atHow nice i have 8gb 3060 ti and a 3070 with 8gb.

I have a 3070. I'm curious if my CPU is the issue? I have 3700x.I also have a 3070, everything on high at 1440p with dlss set to quality, I get about 100 frames in the open world. Nothing to scoff at

You're on a dead platform like me. I had a 3700x, I upgraded to a 5600X, get like 10% better sometimes. If you want the best upgrade you can I would get the 5800X3D. It's as good as all the current new CPU's on new platforms. But after that, it's new MOBO time.I have a 3070. I'm curious if my CPU is the issue? I have 3700x.

Also, are you running on high or medium or a mix of both?

Just 10% better performance? Should I not wait in that case? Unless CPU is holding performance back massively.You're on a dead platform like me. I had a 3700x, I upgraded to a 5600X, get like 10% better sometimes. If you want the best upgrade you can I would get the 5800X3D. It's as good as all the current new CPU's on new platforms. But after that, it's new MOBO time.

You're on a dead platform like me. I had a 3700x, I upgraded to a 5600X, get like 10% better sometimes. If you want the best upgrade you can I would get the 5800X3D. It's as good as all the current new CPU's on new platforms. But after that, it's new MOBO time.

The 5800X3D would be like 40% better.Just 10% better performance? Should I not wait in that case? Unless CPU is holding performance back massively.

A 5600X should be around 20% faster than a 3700X. Unless you are playing at 4K.

But then, it's the 3070 that is limiting your performance, not the CPU.

Also, remember that Zen likes fast memory.

I recently upgraded to a 5700x, and I have 32gb ram. X470 mobo. I did a benchmark and it put it all on High, I may have turned the textures to medium but I dont think it did much. I might have put it back to high.I have a 3070. I'm curious if my CPU is the issue? I have 3700x.

Also, are you running on high or medium or a mix of both?

The 5600/5600X's are super cheap right now. But honestly, If I had the cash I would spring for a 5800X3D.

G.Skill Trident Z Neo Series 32GB (2 x 16GB) 288-Pin SDRAM PC4-28800 DDR4 3600 CL16-19-19-39 1.35VThe 5800X3D would be like 40% better.

I may be off on my numbers.

I run 3600 CL16 ram. Not sure what zkorejo has.

The 5600/5600X's are super cheap right now. But honestly, If I had the cash I would spring for a 5800X3D.

There is no RTGI right? So RT doesn't make much difference graphically I guessOk the game run 98% flawlessly with rtx off (all 3 settings), almost zero stutter and no pop in at all.

I'm not sure if i would call this a bad port, but i also have a 4000 series with framegen.

Rtx seems to be what makes the game stutter a lot, at least in my case.

Yeah. Pretty annoying changing it every start up.Anyone else having an issue with upscale sharpness always resetting itself?

It does make a difference imo. It's much more vibrant as lights shadows and reflections make everything look better. Especially Rtx AO. Reflections also make things look shiny alot more but I can live without it. AO did make it seem much better to my eyes though.There is no RTGI right? So RT doesn't make much difference graphically I guess

And they are right, because in CPU bound scenarios, the frontend on AMD GPUs has a lower overhead than the NVidia frontend.

It has been this way, pretty much since Kepler, as NVidia simplified the frontend on Fermi, to make it use less power and less die space. But it also means it has to offload more driver work into the CPU.

But since no one uses ultra high end GPUs at 1080p, it doesn't really matter.

That's all good and well but they are the first outlet that I've seen take this approach to GPU benchmarks. It's bizarre and I don't think it's a coincidence given their following skews heavily towards the AMD side of things.

Sites like anandtech, techreport, tomshardware, guru3d, have been making CPU scaling benchmark for close to 25 years now.

Anyone else having an issue with upscale sharpness always resetting itself?

Those are specific CPU benchmarks, no?

They were done for several reasons. Sometimes it was to test CPU scaling with new GPUs, sometimes scaling with memory, sometimes with CPU overclocking, etc.

In this case, what HU is important, because if someone has a weaker CPU and can't upgrade, choosing a GPU that doesn't scale well you be very limiting to performance.

Now this is not so much for the people that can afford a 4090, since these can also afford a Zen4 or Raptor lake CPU.

But for people considering mid to low range GPUs, this is important.

From that perspective it's fair enough, but it that is indeed their intention then they should frame it as such. Plenty of people in the comments saying their results don't match up with what they are experiencing on an identical GPU, most likely due to their odd choice of CPU.

They have done plenty of these CPU scaling tests, with several games. And they have explained this in some of their monthly Q&As.

One of the things they repeat constantly through the video is that this game has weird performance scaling. You can see that best when they talked about the test on the Hogsmead vs Hogswards Ground. They also kept repeating that the CPU had very low utilization.

There is also the issue with the game applying settings that the user didn't choose. Like the one with the DLSS3.

Then there are the variations in what is running in the background. What memory is being used, both clocks and timmings.

This is why if you go to more tech focused forums, people tend to look at several reviews. Because journalists will use different hardware configurations, different test methodologies, etc.

If they're going to purposefully go down that road then I'd at least like to see a couple of CPU scaling benchmarks.

What they have come up with is unhelpful for anyone but 7700x (or similar) owners because other than at 4k it doesn't provide an insight into how GPU's actually scale with the game.

I'm not the right guy to ask this type of questions, i barely notice notice any form of rtx, even the most bullsh... emh "transformative" ones...There is no RTGI right? So RT doesn't make much difference graphically I guess

My math may be wrong but that is 1431 test runs?Other CPUs will have similar scaling.

But yes, a benchmark run with several CPUs with this game would be really nice, if they can make it.

Consider just how much they have made already. This is 53 GPUs, multiplied by 1080p, 1440p and 2160p, then Medium, Ultra and Ultra+RT.

And they run each benchmark 3 times, then average the result. This is a lot of work.

My math may be wrong but that is 1431 test runs?

Each resolution was ran at three settings each, so 3 times 3 is 9. Each one of those were ran three times each. 9*3 = 27. Then 53 GPUS so 53*27 = 1431. Maybe?I think that's the right number. But what do I know