...Even Cerny said it could be done. No one said it couldn't be done.

Come on now. A lot of people on GAF said that.

...Even Cerny said it could be done. No one said it couldn't be done.

awesome, hope the x1 servers can provide this kind of power to devs.

Titans, yes.Ok so the video keeps crapping out on me and have just been able to see the title screen.

But I assume the the systems composing this cloud are using GPU's.. is that the case?

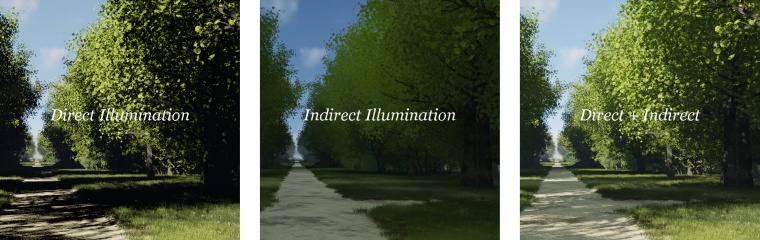

/deadFirst realtime screenshot of cloud based indirect lightning

Yeah, no. I was specifically told that I was an idiot for believing that the cloud would ever be used to improve graphics.

Yep, it is definitely not going to replace hardware at this point, just augment it.I like it!

Its not like everyone was guessing .. the cloud servers do not render the frames for you they just render certain data aspects and stream that data to the client to be added to the local rendering pipeline. Its doesn't have to be 1:1 frame rendering.

This is a very good sign for both the ps4 and Xbone. Think of it as a way to upgrade the machines in the future without really upgrading their hardware.

Yes, in an alternate universe where servers are free. Sure

I don't ever want to buy a game knowing that 5 years down the line it'll be unplayable because the publisher couldn't financially justify keeping the indirect lighting cloud servers online.

Maybe that's just me though.

No, realistically the cost is the biggest barrier.Yes, realistically it's still an unrealistic feat with existing tech. Variable lag is the biggest barrier.

First realtime screenshot of cloud based indirect lightning:

...Even Cerny said it could be done. No one said it couldn't be done.

The tablet solution is full cloud rendering while for desktops it feeds data into the local rendering pipeline.So one thing that needs to be clarified is if this is running completely on the cloud or is it augmenting an local client. Cause if its the former it's nothing new and has zero implication on XB1's "infinite power"

this. but it's your choice if you want to support this, don't weep later thoughI don't ever want to buy a game knowing that 5 years down the line it'll be unplayable because the publisher couldn't financially justify keeping the indirect lighting cloud servers online.

Maybe that's just me though.

Titans, yes.

I don't ever want to buy a game knowing that 5 years down the line it'll be unplayable because the publisher couldn't financially justify keeping the indirect lighting cloud servers online.

Maybe that's just me though.

First realtime screenshot of cloud based indirect lightning:

Ok, hope enough people realize there is a lot of difference between a cloud system with GPU's and one with only CPU's..

Anyway, will have to wait to see the damn video...

Now We just need the US to invest in a modern country wide data infrastructure...balls.

Ok so the video keeps crapping out on me and have just been able to see the title screen.

But I assume the the systems composing this cloud are using GPU's.. is that the case?

All our indirect lighting algorithms run in the Cloud on a GeForce TITAN. The voxel algorithm streams video to the user and relies on a secondary GPU to render view-dependent frames and perform H.264 encoding. Because this secondary GPU leverages direct GPU-to-GPU transfer features of NVIDIA Quadro cards (to quickly transfer voxel data), we use a Quadro K5000 as this secondary GPU. Timing numbers for client-side photon reconstruction occurred on a GeForce 670.

No, realistically the cost is the biggest barrier.

Ok, hope enough people realize there is a lot of difference between a cloud system with GPU's and one with only CPU's..

Anyway, will have to wait to see the damn video...

I can already see where this is going. For months we've been arguing against the "INFINITE POWER OF THE CLOUD, IT'S AMAZING!" basically trying to say it has a lot of limitations and is far, far from the idealistic picture that it's being portrayed as. The system has tons of barriers standing in its way like, what if people are offline? What if it's a multi-platform game? What what happen on the other platforms if they don't have any backend server support? Overhead? Worth implementing? Azure being a CPU farm primarily whilst this stuff also need GPU rendering as proven in the OP video, how will that work? Basically trying to deflate the pumped up PR image of the cloud in its current.

That is slowly turning into; LOL EVERYONE SAID THE CLOUD WAS FAKE AND IT DIDN'T WORK!!!1

APUs?

All our indirect lighting algorithms run in the Cloud on a GeForce TITAN. The voxel algorithm streams video to the user and relies on a secondary GPU to render view-dependent frames and perform H.264 encoding. Because this secondary GPU leverages direct GPU-to-GPU transfer features of NVIDIA Quadro cards (to quickly transfer voxel data), we use a Quadro K5000 as this secondary GPU. Timing numbers for client-side photon reconstruction occurred on a GeForce 670.

Is Gaikai still using Nvidia Grid?

Is Gaikai still using Nvidia Grid?

Come on now. A lot of people on GAF said that.

Seriously?

I can already see where this is going. For months we've been arguing against the "INFINITE POWER OF THE CLOUD, IT'S AMAZING!" basically trying to say it has a lot of limitations and is far, far from the idealistic picture that it's being portrayed as. The system has tons of barriers standing in its way like, what if people are offline? What if it's a multi-platform game? What will happen on the other platforms if they don't have any backend server support? Overhead? Worth implementing? Azure being a CPU farm primarily whilst this stuff also need GPU rendering as proven in the OP video, how will that work? Basically trying to deflate the pumped up PR image of the cloud in its current.

That is slowly turning into; LOL EVERYONE SAID THE CLOUD WAS FAKE AND IT DIDN'T WORK!!!1

Ok that's cool.The tablet solution is full cloud rendering while for desktops it feeds data into the local rendering pipeline.

Ok that's cool.

Found this.

Seems like its somewhat augmenting the lighting, but is it improving it?

Yeah, no. I was specifically told that I was an idiot for believing that the cloud would ever be used to improve graphics.

The prophecy has been fulfilled.

It's doing the part of the lighting that is traditionally pre-rendered.Ok that's cool.

Found this.

Seems like its somewhat augmenting the lighting, but is it improving it?

Ok that's cool.

Found this.

Seems like its somewhat augmenting the lighting, but is it improving it?

Used in the real world? Nope.

Used in proof of concept? Sure.

Yeah but DriveClub looks poor graphically so it'd be hard to argue that offloading the lighting wouldn't be of any benefit.That's what I'd like to know too. It also still seems to work better with better hardware. Maybe someone more well versed can break it down. I know a few next gen games are using Global Illumination in conjunction with real time lighting and shadows (DriveClub) so just how resource or computationally intensive can it be to justify the use of cloud and such expensive cloud hardware?

Ok that's cool.

Found this.

Seems like its somewhat augmenting the lighting, but is it improving it?