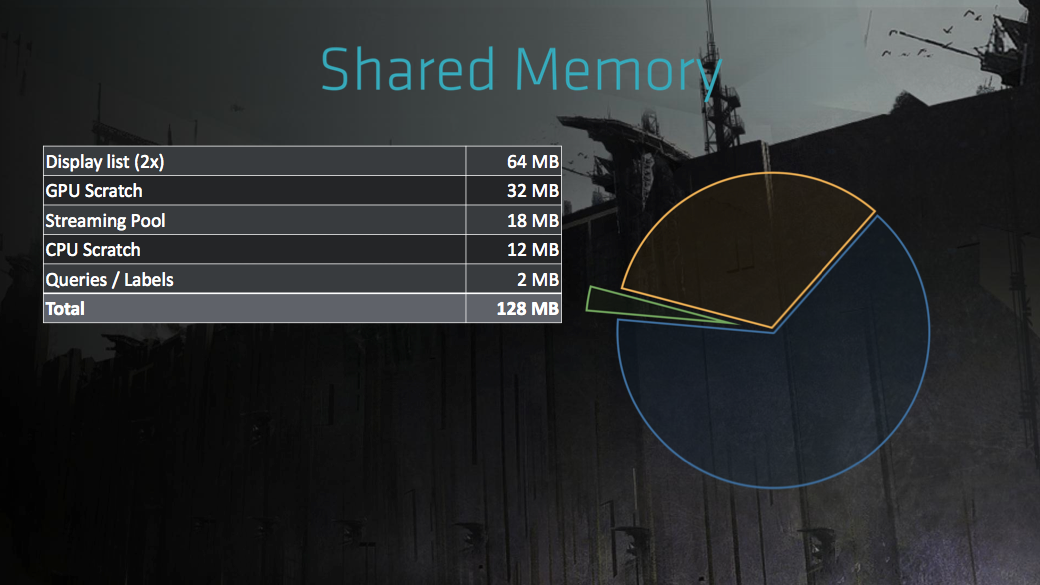

"First, we added another bus to the GPU that allows it to read directly from system memory or write directly to system memory, bypassing its own L1 and L2 caches. As a result, if the data that's being passed back and forth between CPU and GPU is small, you don't have issues with synchronization between them anymore. And by small, I just mean small in next-gen terms. We can pass almost 20 gigabytes a second down that bus. That's not very small in todays terms -- its larger than the PCIe on most PCs!

"Next, to support the case where you want to use the GPU L2 cache simultaneously for both graphics processing and asynchronous compute, we have added a bit in the tags of the cache lines, we call it the 'volatile' bit. You can then selectively mark all accesses by compute as 'volatile,' and when it's time for compute to read from system memory, it can invalidate, selectively, the lines it uses in the L2. When it comes time to write back the results, it can write back selectively the lines that it uses. This innovation allows compute to use the GPU L2 cache and perform the required operations without significantly impacting the graphics operations going on at the same time -- in other words, it radically reduces the overhead of running compute and graphics together on the GPU."

Thirdly, said Cerny, "The original AMD GCN architecture allowed for one source of graphics commands, and two sources of compute commands. For PS4, weve worked with AMD to increase the limit to 64 sources of compute commands -- the idea is if you have some asynchronous compute you want to perform, you put commands in one of these 64 queues, and then there are multiple levels of arbitration in the hardware to determine what runs, how it runs, and when it runs, alongside the graphics that's in the system."