cleveridea

Member

I doubt it. AMD has effectively become the "other" brand. In short, it's the alternative brand. I don't know why any one dropping such serious coin on GPUs would entertain the idea of the Fury X.

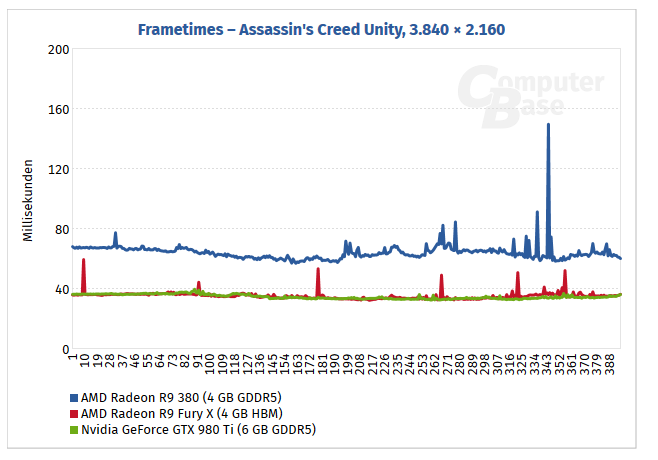

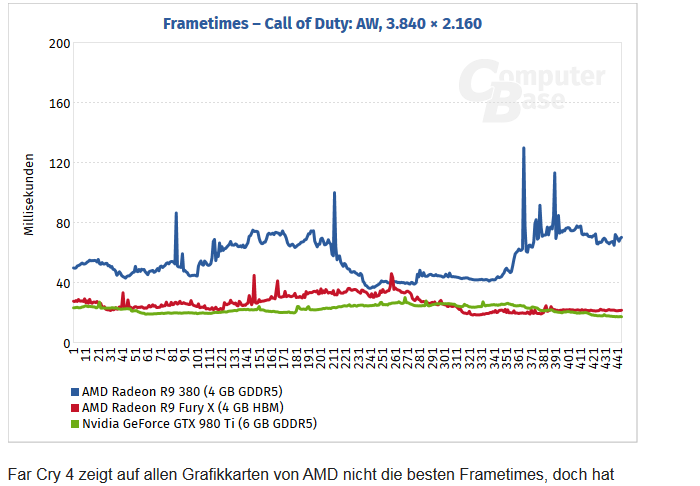

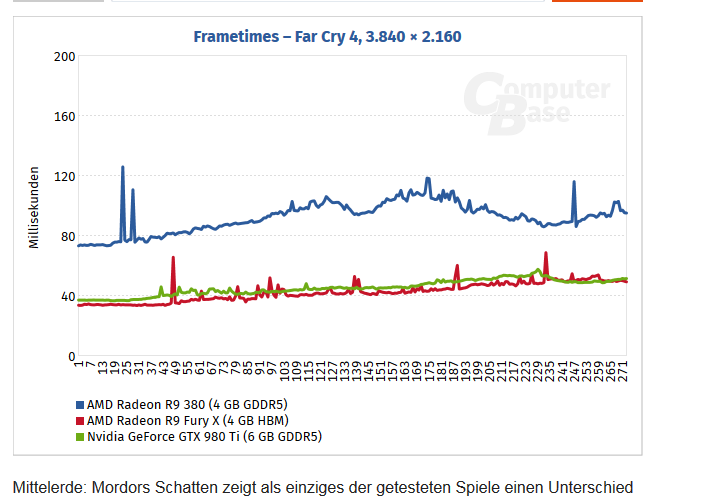

I know people will damage control and say it isn't a bad card- I agree. However, it does nothing to help AMD's current situation. They've developed an awful reputation for piss poor performance on day 1 titles (Arkham Knight, AC: Unity, GTA V) that people go out of their way to avoid purchasing their cards.

This card was supposed to have a healthy performance lead in front of the competing Nvidia cards AND be under priced. Instead it achieves none of that and positions itself as a boutique solution. Effectively endangering it to a niche product status.

Godspeed AMD. Drop the price, put HDMI 2.0 in, and get your drivers together.

Yeah I dont know if its just effects missing until a patch, but batman looks so superior with nvida effects on than off its ridiculous