This is the type of like for like benchmarks that i love from DF. I don't know why they stopped doing them in their more recent videos, they used to do long benchmarks side by side comparissons, now instead they show one console at a time, first one console in one area and then they show the other in a slightly or totally different run.

I agree, in that sense today VG Tech is much better, the problem is that they do very little analysis.

On the topic at hand, I think we think too much about hardware differences when, for example, Cyberpunk ran better on PS4 Pro than on One X:

Cyberpunk 2077's 1.2 patch arrived last week, accompanied by an absolutely gigantic list of bug fixes, tweaks and upgra…

www.eurogamer.net

You can see in the video how this affects the overall presentation, especially on PlayStation 4 Pro, but the upshot is that there are clear performance benefits. Sony's enhanced console always ran the game best, even beating out the more powerful Xbox One X.

PS4 Pro performs much better than Xbox One X in this game. We know that the power difference was large in favor of One X and the latter far outperformed the PS4 Pro in most cross-platform games.

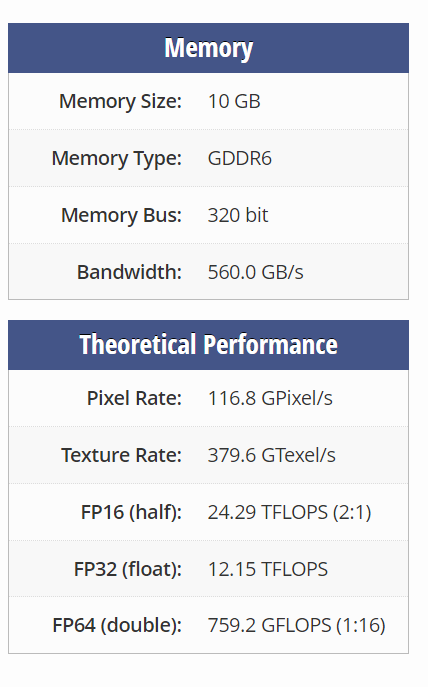

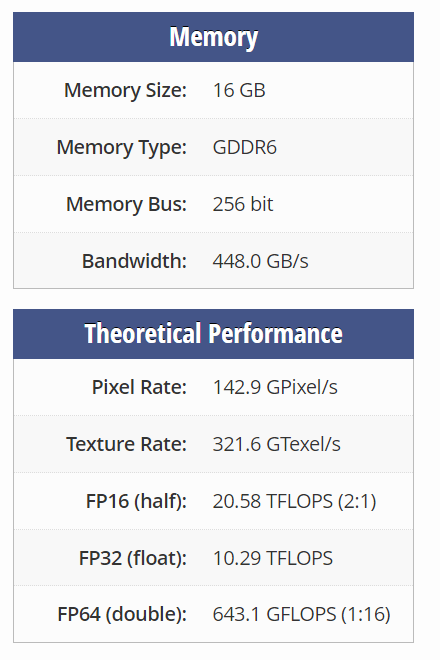

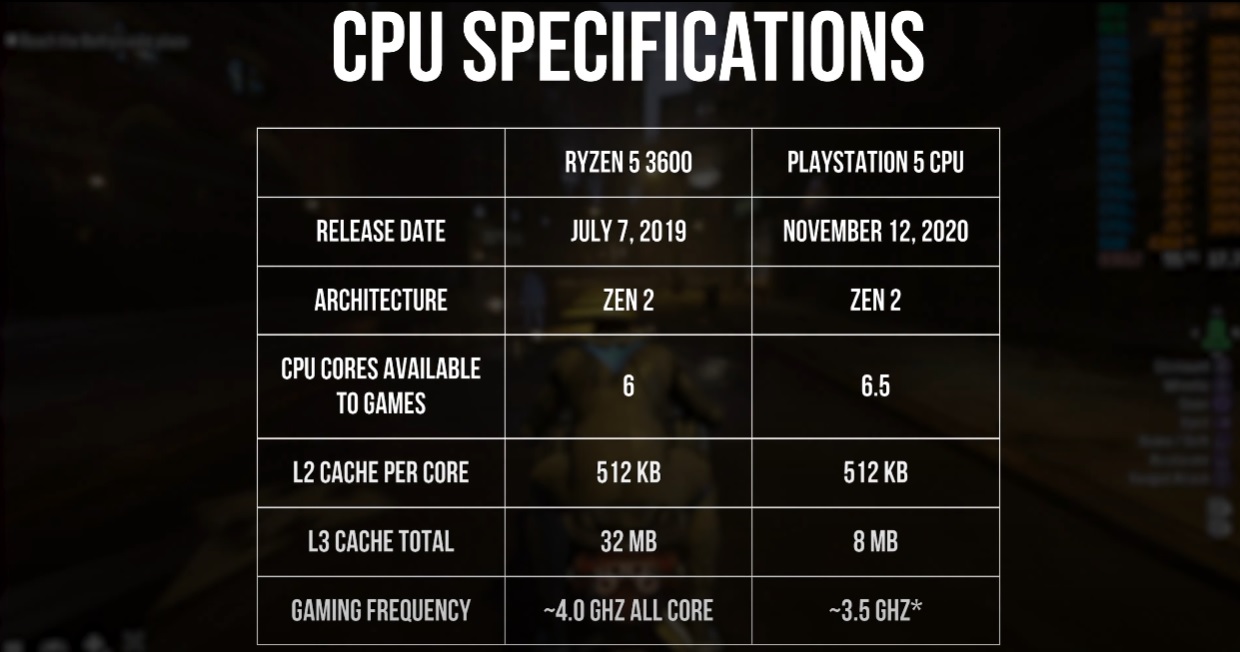

It has a more powerful GPU across the board, a slightly faster CPU, more VRAM, more bandwidth, etc.

There is nothing at the hardware level that justifies this. By this I mean we can speculate, but in the end, it most likely comes down to the most important factor: software.

We have already seen that in each multiplatform the difference can vary.

Alan Wake 2 runs best on XSX.

Cyberpunk works best on PS5.

Prince of Persia: The Lost Crown works best on XSX.

Baldurs Gate 3 works best on PS5.

The Talos Principle works best in XSX.

Call of Duty MW3 works best on PS5.

In some the differences are relatively large (Alan Wake 2, Cyberpunk), and in others very scarce (COD), and we have examples of games in which there is no clear winner (Robocop has a better framerate in XSX but a reduced graphic setting, Avatar has a higher resolution in XSX but has more stuttering and more noise, AC: Mirage has a higher minimum resolution in XSX but tends to have more low frame rates...).

Even in the cases cited above there is debate, because for example Cyberpunk also works at a slightly higher resolution on XSX, and COD: MW3 according to NXGamer in 120fps mode works better on XSX.

I PERSONALLY think that XSX has a slight advantage in hardware.

This explains why it is more common to run at slightly higher resolutions, or the DRS is less aggressive, but if PS5 is the main console for game development and receives better treatment (optimization), then this is the result, which remains in technical tie.