-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Substance Engine benchmark implies PS4 CPU is faster than Xbox One's

- Thread starter Brad Grenz

- Start date

Night Angel

Member

Because they aren't desperate for a (minor) advantage and they've already used the "most powerful console" card. Why would they?Why wouldn't Sony be touting this?

Melchiah

Member

Because they aren't desperate for a (minor) advantage and they've already used the "most powerful console" card. Why would they?

Well, amusingly Microsoft is marketing the XB1 here in Finland as "the best console of new generation".

Because they aren't desperate for a (minor) advantage and they've already used the "most powerful console" card. Why would they?

Because MS has been pushing their CPU as more powerful. If your competitor is lying about your product in a way that fights your marketing strategy, you set the record straight. What reason do they have for not simply tweeting, "The CPU is ### GHz?"

Since this is the only real data we have, we have to go with it. It's just confusing to me why Sony would let MS have that advantage when they've released info on so many other things.

I love these types of threads.

In my ignorance I can never work out if every participant is an expert in their field or whether random factoids are simply pulled from the nearest passing ass.

Everybody can't be right, can they?

They are when they all live in a different world.

Because MS has been pushing their CPU as more powerful. If your competitor is lying about your product in a way that fights your marketing strategy, you set the record straight. What reason do they have for not simply tweeting, "The CPU is ### GHz?"

Since this is the only real data we have, we have to go with it. It's just confusing to me why Sony would let MS have that advantage when they've released info on so many other things.

Sony have already established the idea amongst the hardcore than PS4 has more power. There is no need to address this snippet of information since it is irrelevant to the bigger picture.

Sony have already established the idea amongst the hardcore than PS4 has more power. There is no need to address this snippet of information since it is irrelevant to the bigger picture.

It's not like it's hard work to state our cpu is such and such speed. They did it for their RAM, GPU and everything else. It just seems strange.

Apophis2036

Banned

So the PS4 CPU is clocked at 2GHz ?, wow if true, I thought 1.6 was confirmed by official Sony documents.

The PS3 was even more at $600 and the games for it until MGS4 hit were not that appealing. Yet it still sold better than the XBox 360 on average per year.

Sony barely got away with that because the PS3 came after their best selling predecessors (PS1 & PS2), & because that the PS brand was big at the time (& still most likely is).

Microsoft only got big in the U.S. & the UK with the 360 because of Sony's constant foul-ups with the PS3 (which Robbie Bach himself admitted). They weren't even that popular with the original Xbox.

You complain too much to be 'impartial'. So drop the act, it's pretty transparent.

You really don't like any one talking "ill" of xone huh?

You try to play the neutral poster guy but your posting habits resembles someone who sees Sony "fanboys" in his sleep.

Your posts extend beyond this thread. You jump in wherever a bad word is spoke of Xbox. You don't seem to run to the defense of Sony or Nintendo.

Exactly. If he doesn't like posts that talks bad about Xbox One, then why does he keep responding to them?

LOL. He just keeps on doing it over & over...& he still doesn't learn from it.

travisbickle

Member

So the PS4 CPU is clocked at 2GHz ?, wow if true, I thought 1.6 was confirmed by official Sony documents.

I actually believe 1.6 was only ever confirmed by MS for the One's CPU post up clock.

All part of game journalists willingness to make stories out of half-information.

I quoted you word for word - you didn't "simply" say it was interesting. There was a little more to it than that and doubt was certainly expressed.???

I'm not doubting what he said at all. I simply said that it's interesting.

Many people buying it as a DVD player doesn't mean *everyone* bought it for that reason, or was even aware. And there's certainly a question of how long that remained even widely suggested of the PS2, past it's first year when standalone DVD players started outpacing the PS2's cost.And yes, many people got the PS2 due to it being a cheap DVD player. DVD movie sales greatly went up after the PS2 was released.

Honestly, that seemed a much bigger selling point in Japan than anywhere else. By the time the PS2 arrived in North America, it was only another year or so before standalone DVD players were in the $100-200 range.

Goodacre0081

Member

Because they aren't desperate for a (minor) advantage and they've already used the "most powerful console" card. Why would they?

MS played that card and it wasn't even true.

KoolAidPitcher

Member

Lots of PS4 fanboys here, but I want to stress that benchmarks like these cannot exist in a vacuum. The faster RAM could have easily impacted the CPU benchmarks.

Last edited:

serversurfer

Member

You seem to be clinging to the notion that PS4 is clocked at 1.6 GHz while Xbone is clocked at 1.75 GHz. You're claiming Sony would "need" to clock at 1.6 GHz to save money, but that argument would apply equally to MS, and we know they're at 1.75 GHz.They are the EXACT SAME CPU modules clocked at different speeds.

The only logical explanation for the benchmark on the PS4 outperforming the Xbox One is that the Xbox One's operating systems are greedier and reserve more of the hardware for themselves.

More to the point, this test seems to indicate the PS4 is not clocked at 1.6 GHz; the math doesn't work out otherwise. It seems fairly clear the PS4 is clocked at 1.75 or 2 GHz — depending on how the chips are being tested — and I'm currently leaning towards the latter.

Again, this was covered in the OP. The test is not affected by bandwidth or other external concerns; it's purely based on the performance of the CPU itself.Perhaps there is also a slight advantage to the PS4 when writing to and reading from RAM.

Can I ask again how you're determining that? Both the iPad 2 and iPhone 5 are dual-core machines, with the latter on a newer architecture and a 30% higher clock. How does a 66% performance advantage for the iPhone 5 show us the test is per-core rather than per-CPU?… but this performance graph is for single core only, the gulf between the ios cpus prove that.

That said, comparing the iPhone 5 to the Tegra 4 would seem to indicate a per-core test. That, or the quad-core Tegra 4 is really shitty at this test when compared to the dual-core A6. lol

KoolAidPitcher

Member

You seem to be clinging to the notion that PS4 is clocked at 1.6 GHz while Xbone is clocked at 1.75 GHz.

Every source I can find online claims the PS4 is clocked at 1.6GHz and the Xbox One is clocked at 1.75GHz. Since the CPU modules on the APU are THE SAME SILICON as the Xbox One's CPU, they will preform better than the PS4's in a vacuum (without considering OS, Memory, etc.) by a small margin. If indeed, these facts are wrong (please provide a source if so), then you may be right.

You're claiming Sony would "need" to clock at 1.6 GHz to save money, but that argument would apply equally to MS, and we know they're at 1.75 GHz.

As for my 'argument,' it would apply more to the Xbox One than the PS4 because the Xbox One APUs are larger and more costly to produce, especially due to the ESRAM. My point was that Microsoft and Sony both took into consideration AMDs chip yields. For example, if 80% of APUs were able to be clocked at 1.75GHz, that yield might have been good for Microsoft but Sony wanted a 90% chip yield and opted for the lower clock speed to save costs.

More to the point, this test seems to indicate the PS4 is not clocked at 1.6 GHz; the math doesn't work out otherwise. It seems fairly clear the PS4 is clocked at 1.75 or 2 GHz — depending on how the chips are being tested — and I'm currently leaning towards the latter.

The 'math,' does not prove anything. A benchmark is not conducted inside of a vacuum. Unless the benchmark is not running on an operating system and does not use memory (only using the CPU registers and LU Caches), then it cannot possibly be testing only the CPU performance, and from all the data I have seen, since the Xbox One's CPU module is THE SAME SILICON as the PS4's CPU module and is at a higher clock, it will outperform the PS4's CPU by a small margin.

Again, this was covered in the OP. The test is not affected by bandwidth or other external concerns; it's purely based on the performance of the CPU itself.

How? As an engineer, I would like to know the exact methodology behind this test. Does it not run on an operating system? How does it not use RAM? Was the test small enough to run entirely in the LUCache, and if so, how did the operating system not interfere? I did not see that information in the OP, so I am skeptical of this assumption.

For all we know, all this benchmark is proving is that the FreeBSD operating system kernel used on the PS4 outperforms the Windows operating system kernel used on the Xbox One. Biiiiig surprise there...

There is a caveat to all of this. If the Xbox One has disabled more CPU cores than the PS4 in order to improve chip yields, this could also mean the PS4's lower clock-rate CPU will outperform.

Every source I can find online claims the PS4 is clocked at 1.6GHz and the Xbox One is clocked at 1.75GHz.

Wat.

How can there be a source if Sony has never given the CPU speed. Those sources of yours are giving there opinion on what they BELIEVE the CPU speed is based off comparing it to similar chips.

KoolAidPitcher

Member

Wat.

How can there be a source if Sony has never given the CPU speed. Those sources of yours are giving there opinion on what they BELIEVE the CPU speed is based off comparing it to similar chips.

Once again-- I just searched and every article I can find claims the PS4's CPU is 1.6GHz, including wikipedia. I'm not going to say that it isn't; however, I think it would be safe to assume 1.6GHz until proven otherwise.

Also, this 1.75GHz and 2GHz rumor has been started due to an apple to oranges comparison. Due to differences in RAM and Operating Systems, the Xbox One benchmark results cannot be compared directly to the PS4s. If one apple benchmarks at 1.25x the rate of an orange, that does not mean I have 1.25 apples.

Every source I can find online claims the PS4 is clocked at 1.6GHz and the Xbox One is clocked at 1.75GHz. Since the CPU modules on the APU are THE SAME SILICON as the Xbox One's CPU, they will preform better than the PS4's in a vacuum (without considering OS, Memory, etc.) by a small margin. If indeed, these facts are wrong (please provide a source if so), then you may be right.

there in lies your problem does it not??...because there have been ZERO official sources to say that the PS4's CPU runs at 1.6ghz...it was always the assumption...as it was with the Xbone...

of course it was also widely assumed at one point that the Xbone would be significantly more powerful...and it was a damn near GUARANTEE at one point that the PS4 would ship with 4GB of GDDR5 vs the Xbone's 8GB of DDR3...we all know how those assumptions and "sources" turned out huh??...

i also like your assumption that that the silicon is identical...modular or not, the APU's are of significant difference that it would not shock me one bit to find out that the CPU architecture has been tweaked...

was it not said by AMD that the very agreements between Sony and MS were completely different? with AMD licensing technology to MS for them to design their own silicon?...

in a vacuum your ASSUMPTION would hold true, however it is you that has the burden of proof here...there is evidence in the OP that suggest the PS4 CPU runs at a higher clock speed...you refute this...you need to provide the information to support your claim...

your pulling numbers out of your ass and they mean nothing...you dont know what yeild numbers either company was happy with or aiming for...you have no idea what thermal characteristics each side was looking for...or for that matter what the clock speed Sony was aiming for is...none of us do...As for my 'argument,' it would apply more to the Xbox One than the PS4 because the Xbox One APUs are larger and more costly to produce, especially due to the ESRAM. My point was that Microsoft and Sony both took into consideration AMDs chip yields. For example, if 80% of APUs were able to be clocked at 1.75GHz, that yield might have been good for Microsoft but Sony wanted a 90% chip yield and opted for the lower clock speed to save costs.

The 'math,' does not prove anything. A benchmark is not conducted inside of a vacuum. Unless the benchmark is not running on an operating system and does not use memory (only using the CPU registers and LU Caches), then it cannot possibly be testing only the CPU performance, and from all the data I have seen, since the Xbox One's CPU module is THE SAME SILICON as the PS4's CPU module and is at a higher clock, it will outperform the PS4's CPU by a small margin.

again with the assumptions...yes, the CPU cores are all Jaguar, yes the GPU's are from the sale family...but that does not mean the CPU's are identical...there are SIGNIFICANT differences in EVERY SINGLE other part of the APU...why not the CPU cores?...

you're also once again ASSUMING the Xbone CPU runs at a higher clock speed...when the only hard data on the matter (this benchmark) suggests otherwise...

How? As an engineer, I would like to know the exact methodology behind this test. Does it not run on an operating system? How does it not use RAM? Was the test small enough to run entirely in the LUCache, and if so, how did the operating system not interfere? I did not see that information in the OP, so I am skeptical of this assumption.

as an engineer i would expect you to be able to put together an argument that doesn't hinge completely on baseless assumptions...

what we DO know is the PS4's CPU outperformed the Xbone's...For all we know, all this benchmark is proving is that FreeBSD operating system kernel used on the PS4 outperforms the Windows operating system kernel used on the Xbox One. Biiiiig surprise there...

There is a caveat to all of this. If the Xbox One has disabled more CPU cores than the PS4 in order to improve chip yields, this could also mean the PS4's lower clock-rate CPU will outperform.

much more likely that the Xbone reserves an additional core for OS related activities...iirc the chip x-rays showed no additional CPU cores for redundancy on either APU, while each APU has 2 disabled CU's on the GPU side...

so a 1core vs 2core OS reservation would certainly benefit the PS4...

unless your name is misterX and you want me to believe that the Xbone uses "dark silicon" and has additional CPU and GPU cores stacked underneath the ones we know about and cant be seen by xrays....then of course your train of thought makes sense...

Dr. Kitty Muffins

Member

Why wouldn't Sony be touting this?

I think it doesn't hurt to constantly mention having the most powerful console in interviews. The selling point isn't hurting them. Might as well talk about it especially since mainstream gamers don't know.

Yes, you can get more out of the PS4's CPU than you can the Xbox's.

Forgive me, it may sound rude but I mean nothing negative by it, consider it my ignorance...

Who are you exactly, I take it you work in games development but could you share a little with me as to whom you work with and what games you have developed for either of these new consoles?

Again, I mean nothing negative by my questioning.

KoolAidPitcher

Member

At the very most, there are only minor tweaks which will not affect performance one way or another. I designed computer circuitry in college, and no modern CPU is designed by hand anymore. The circuitry for CPUs is created using a programming language called Verilog or a similar programming language. If there are any differences between the PS4 and Xbox One silicon, it is the equivalent of modifying a few lines of Verilog.i also like your assumption that that the silicon is identical...modular or not, the APU's are of significant difference that it would not shock me one bit to find out that the CPU architecture has been tweaked...

Read above. They both have Jaguar cores. The Verilog for both processors is either identical, or 99.9% the same.was it not said by AMD that the very agreements between Sony and MS were completely different? with AMD licensing technology to MS for them to design their own silicon?...

If you would have read what I said, I did not outright refute this; however, I am very skeptical. I'm going to quote myself...in a vacuum your ASSUMPTION would hold true, however it is you that has the burden of proof here...there is evidence in the OP that suggest the PS4 CPU runs at a higher clock speed...you refute this...you need to provide the information to support your claim...

"As an engineer, I would like to know the exact methodology behind this test. Does it not run on an operating system? How does it not use RAM? Was the test small enough to run entirely in the LUCache, and if so, how did the operating system not interfere?"

These are the valid questions I have which have not been answered, and will not be answered.

I'm done arguing with you. I have designed computer circuitry and you have not. My assumptions made are safe assumptions for anyone who knows the industry to be making.

Why wouldn't Sony be touting this?

They've flaunted the resolution advantage a few times. I think they're pretty content to keep it at that; it's much more relevant, immediately identifiable information to most of the audience than raw hardware numbers.

At the very most, there are only minor tweaks which will not affect performance one way or another. I designed computer circuitry in college, and no modern CPU is designed by hand anymore. The circuitry for CPUs is created using a programming language called Verilog or a similar programming language. If there are any differences between the PS4 and Xbox One silicon, it is the equivalent of modifying a few lines of Verilog.

Read above. They both have Jaguar cores. The Verilog for both processors is either identical, or 99.9% the same.

youre still basing everything off assmptions with nothing to actually back up your claim...

These are the valid questions I have which have not been answered, and will not be answered.

wether your questions are answered or not does not prove your theory by any stretch...the fact remains...the ONLY data related to the subject goes against your theory...ball is in your court to prove otherwise...

I'm done arguing with you. I have designed computer circuitry and you have not. My assumptions made are safe assumptions for anyone who knows the industry to be making.

its the typical response of someone who lacks the mental acuity to participate in intelligent discussions in which their point of view is challenged in any form to hide behind the tired "im done, because i know what im talking about, and you dont" crap... *yawn*

i frankly dont care whether you have designed computer circuitry and i have not...i have no idea who you are but id put my intellectual capacity against yours any day of the week and be confident in the outcome

your ENTIRE argument is based on the 1.75 vs 1.6 argument...yet we only have confirmation of one of those numbers...

Once again-- I just searched and every article I can find claims the PS4's CPU is 1.6GHz, including wikipedia. I'm not going to say that it isn't; however, I think it would be safe to assume 1.6GHz until proven otherwise.

Also, this 1.75GHz and 2GHz rumor has been started due to an apple to oranges comparison. Due to differences in RAM and Operating Systems, the Xbox One benchmark results cannot be compared directly to the PS4s. If one apple benchmarks at 1.25x the rate of an orange, that does not mean I have 1.25 apples.

Not really gonna argue with you over the clock speeds but more of your sources.

You claimed to have several sources that claim they know the clock speed but then the only one you link me is wikipediathatcantevenbeusedinaresearchpaperbecauseofitbeingerrorprone.

And even then the wiki page says " The CPU clock speed is said to be 1.6 GHz."

Said to be? LOL

malboroking

Banned

At the very most, there are only minor tweaks which will not affect performance one way or another. I designed computer circuitry in college, and no modern CPU is designed by hand anymore. The circuitry for CPUs is created using a programming language called Verilog or a similar programming language. If there are any differences between the PS4 and Xbox One silicon, it is the equivalent.

Read above. They both have Jaguar cores. The Verilog for both processors is either identical, or 99.9% the same.

If you would have read what I said, I did not outright refute this; however, I am very skeptical. I'm going to quote myself...

"As an engineer, I would like to know the exact methodology behind this test. Does it not run on an operating system? How does it not use RAM? Was the test small enough to run entirely in the LUCache, and if so, how did the operating system not interfere?"

These are the valid questions I have which have not been answered, and will not be answered.

I'm done arguing with you. I have designed computer circuitry and you have not. My assumptions made are safe assumptions for anyone who knows the industry to be making.

First off, no one cares that you took an introduction to computer circuitry in college. Whoopdeydoo, so did everyone else with a Comp Sci. Degree. Second of all, we know the cpus are both made of THE SAME SILICON, we've known that for monthes, you don't need to bring it up over and over again. Lastly, the test that was performed is strictly CPU based, it does not use Ram, so the bandwidth difference would not change the results of the test. Look up the test being performed before posting next time. As a dozen other computer literate people have already pointed out, this test shows that either the PS4 cpu is clocked higher thean the XB1s, or it is clocked the same and reserves one less core for the OS. We've had a game developer come in thread and confirm these findings, and quite frankly I'd believe a confirmed developer over you any day of the week. Either way the cpu for the PS4 is clocked higher than 1.6 Ghz.

Brad Grenz

Member

Once again-- I just searched and every article I can find claims the PS4's CPU is 1.6GHz, including wikipedia. I'm not going to say that it isn't; however, I think it would be safe to assume 1.6GHz until proven otherwise.

Well, the point of this thread is to highlight that all of those reports were apocryphal to begin with. Sony has never officially confirmed what the final clockspeed of their CPU is, and the evidence everyone points to refers an alpha kit in February and at the time the Xbox One CPU also ran at 1.6Ghz, and the PS4 only had 4GB of RAM. Things change, and this benchmark certainly lends strong evidence to the conclusion that the PS4 CPU is clocked higher than everyone, including Microsoft, assumed.

Also, this 1.75GHz and 2GHz rumor has been started due to an apple to oranges comparison. Due to differences in RAM and Operating Systems, the Xbox One benchmark results cannot be compared directly to the PS4s. If one apple benchmarks at 1.25x the rate of an orange, that does not mean I have 1.25 apples.

It can't be apples to oranges, since the benchmark has both doing the same exact work.

The software in question is some kind of algorithmic texture generation system. Since the results it outputs are measured in tens of megabytes per second, and the whole point of progressive art generation is not having large art assets to begin with, it's unlikely bandwidth plays a large role in the results.

We already know Microsoft's OS reservation on the Xbox One is 2 or the 8 cores, so there should not be a concern of the OS stealing time from the other 6 in this benchmark. I suppose we could say the compiler is to blame, but given both are using AMD CPUs you would think the compilers for both rely heavily on the same underlying AMD provided technology.

The only other theory is that the hypervisor in the Xbox One is terribly inefficient. If we assume, as you have, that the PS4 is still clocked at 1.6Ghz, with the Xbox One at 1.75Ghz, that means the PS4 is nearly 30% faster clock for clock in this test. Frankly, I find it far easier to believe the PS4 clockspeed was set at 2Ghz for final hardware than to believe the Xbox One's virtual machine setup is so dismally inefficient.

Ultimately, we don't have enough information to say exactly why the Xbox One's CPU is slower, but we do now have evidence showing that's the case. It doesn't matter how this is achieved, the results are what is important. And the only reason this minor difference is even particularly notable is that Microsoft was claiming a CPU advantage on this very forum, and they've earned every bit of dirt that's been kicked in their face this year.

serversurfer

Member

As mentioned, the 1.6GHz figure you're seeing is being repeated from the original VG Leaks report. AFAIK, Sony have never confirmed the actual clock.Every source I can find online claims the PS4 is clocked at 1.6GHz and the Xbox One is clocked at 1.75GHz. Since the CPU modules on the APU are THE SAME SILICON as the Xbox One's CPU, they will preform better than the PS4's in a vacuum (without considering OS, Memory, etc.) by a small margin. If indeed, these facts are wrong (please provide a source if so), then you may be right.

If we assume PS4 is 1.6GHz and Xbone is 1.75GHz, then you're right, and we should see the XBone outperform the PS4. Yet we see the opposite. Hmm, perhaps our initial assumption is flawed in some way…

If you think MS would be more motivated to downclock for yield — and I agree — then why would you assume Sony were the ones who actually did so?As for my 'argument,' it would apply more to the Xbox One than the PS4 because the Xbox One APUs are larger and more costly to produce, especially due to the ESRAM. My point was that Microsoft and Sony both took into consideration AMDs chip yields. For example, if 80% of APUs were able to be clocked at 1.75GHz, that yield might have been good for Microsoft but Sony wanted a 90% chip yield and opted for the lower clock speed to save costs.

Well, there are certainly benchmarks designed to run entirely within a chip's cache, and since those caches are managed by the chip rather than the OS, I don't understand why the OS would have any effect on the test. I could see compiler differences affecting the test, but if Allegorithmic were getting incongruous results — say, if the lower-spec'd chip were outperforming the higher-spec'd one — I would assume they would correct the issue rather than publishing flawed results.The 'math,' does not prove anything. A benchmark is not conducted inside of a vacuum. Unless the benchmark is not running on an operating system and does not use memory (only using the CPU registers and LU Caches), then it cannot possibly be testing only the CPU performance, and from all the data I have seen, since the Xbox One's CPU module is THE SAME SILICON as the PS4's CPU module and is at a higher clock, it will outperform the PS4's CPU by a small margin.

That said, after re-reading the linked article, I don't see where it actually says the test can't be memory-bound, though the XBone is only producing 12 MB of data per second. It would seem even the anemic DDR3 on the XBone would be more than capable of handling that kind of output. Brad, can you shed some light here? Where did that claim come from?

Err, I don't think either disables any CPU cores at all, though rumor has it XBone reserves two for the OS, while PS4 reserves only one. Both systems disable two GPU cores though, leaving 12 active on XBone and 18 active on PS4. Perhaps that's what you're thinking of? But again, looking at the Tegra 4 and A6 results, this seems to be testing the performance of a single core, meaning OS reservations/interference aren't relevant here.There is a caveat to all of this. If the Xbox One has disabled more CPU cores than the PS4 in order to improve chip yields, this could also mean the PS4's lower clock-rate CPU will outperform.

Edit: Well, speak of the Devil, and who should appear but the Devil himself??

Thanks for the explanation. Also, yay, I'm smart!!The software in question is some kind of algorithmic texture generation system. Since the results it outputs are measured in tens of megabytes per second, and the whole point of progressive art generation is not having large art assets to begin with, it's unlikely bandwidth plays a large role in the results.

KoolAidPitcher

Member

Well, the point of this thread is to highlight that all of those reports were apocryphal to begin with. Sony has never officially confirmed what the final clockspeed of their CPU is, and the evidence everyone points to refers an alpha kit in February and at the time the Xbox One CPU also ran at 1.6Ghz, and the PS4 only had 4GB of RAM. Things change, and this benchmark certainly lends strong evidence to the conclusion that the PS4 CPU is clocked higher than everyone, including Microsoft, assumed.

It can't be apples to oranges, since the benchmark has both doing the same exact work.

The software in question is some kind of algorithmic texture generation system. Since the results it outputs are measured in tens of megabytes per second, and the whole point of progressive art generation is not having large art assets to begin with, it's unlikely bandwidth plays a large role in the results.

We already know Microsoft's OS reservation on the Xbox One is 2 or the 8 cores, so there should not be a concern of the OS stealing time from the other 6 in this benchmark. I suppose we could say the compiler is to blame, but given both are using AMD CPUs you would think the compilers for both rely heavily on the same underlying AMD provided technology.

The only other theory is that the hypervisor in the Xbox One is terribly inefficient. If we assume, as you have, that the PS4 is still clocked at 1.6Ghz, with the Xbox One at 1.75Ghz, that means the PS4 is nearly 30% faster clock for clock in this test. Frankly, I find it far easier to believe the PS4 clockspeed was set at 2Ghz for final hardware than to believe the Xbox One's virtual machine setup is so dismally inefficient.

Ultimately, we don't have enough information to say exactly why the Xbox One's CPU is slower, but we do now have evidence showing that's the case. It doesn't matter how this is achieved, the results are what is important. And the only reason this minor difference is even particularly notable is that Microsoft was claiming a CPU advantage on this very forum, and they've earned every bit of dirt that's been kicked in their face this year.

These are all good points-- especially about the hypervisor, and it could be that the PS4 is clocked greater than 1.6GHz; however, I would caution away from relying on benchmarks for determining clock speed. Until I see an official announcement, I am going to continue to believe it is 1.6GHz.

As for compilation, the code on PS3 was most likely compiled with a gcc compiler, while the code on the Xbox One was compiled with an MSBuild compiler; however, this is just speculation.

One point however--

The software in question is some kind of algorithmic texture generation system. Since the results it outputs are measured in tens of megabytes per second, and the whole point of progressive art generation is not having large art assets to begin with, it's unlikely bandwidth plays a large role in the results.

If each individual read and write is slower, the CPU could pipeline the operations differently and the Xbox One could preform the work less efficiently than the PS4, even though it has the same silicon and a higher clock speed. Moreover, the Xbox One may be compensating for less efficient RAM by relying slightly more on branch prediction and doing more work to accomplish the same goal; however, this shouldn't make a terribly large difference.

Apophis2036

Banned

Why doesn't someone just ask Yoshida on Twitter what the CPU is clocked at ?, he will prob answer as he is a total boss  .

.

serversurfer

Member

I was gonna do that, but I forgot.Why doesn't someone just ask Yoshida on Twitter what the CPU is clocked at ?, he will prob answer as he is a total boss.

We should all just tweet-bomb him until he replies.

These are all good points-- especially about the hypervisor, and it could be that the PS4 is clocked greater than 1.6GHz; however, I would caution away from relying on benchmarks for determining clock speed. Until I see an official announcement, I am going to continue to believe it is 1.6GHz.

As for compilation, the code on PS3 was most likely compiled with a gcc compiler, while the code on the Xbox One was compiled with an MSBuild compiler; however, this is just speculation.

One point however--

If each individual read and write is slower, the CPU could pipeline the operations differently and the Xbox One could preform the work less efficiently than the PS4, even though it has the same silicon and a higher clock speed. Moreover, the Xbox One may be compensating for less efficient RAM by relying slightly more on branch prediction and doing more work to accomplish the same goal; however, this shouldn't make a terribly large difference.

I have never met someone as close minded as this before. Even when you're wrong, you still believe you're right. Wow, amazing. Your whole argument is based on an assumption. If you did go to university and take anything related to computer science or engineering, you would have been required to take several logic courses. Based on your arguments, I'm going to go ahead and say that there is no way you're an engineer. Your logic is broken and your arguments are based on an assumption. This assumption has been proven false by the information in the OP. I'm just finishing up my comp sci degree but if there is one thing I can guarantee, it's that math does not lie when it relates to computers. We do not know the details of the test but we do know that even if assumptions are made, the worst possible outcome is one where both processors have the same speed.

The thing I find funny is that you will continue to believe the 1.6 until official numbers are released. 1.6 is not an official number but an assumed figure. So you are essentially saying is that you will continue to believe an assumption over new information verified by mathematics until an official figure is released.

Edit:

I almost forgot about the second part of your paragraph which is a complete joke. it is a paragraph filled with assumptions which are used to justify other assumptions. In your argument, you have not presented one fact. It's essentially false until proven true. It's a theory and nothing more. Until you have facts, you should stop arguing.

iceatcs

Junior Member

I actually believe 1.6 was only ever confirmed by MS for the One's CPU post up clock.

All part of game journalists willingness to make stories out of half-information.

Even cool, Sony did nothing to say.

Really embarrassment game journalists. They are suppose get the information, not see the information.

serversurfer

Member

Yeah, all from a single rumor that came out 10 months before launch, but it's gospel until Sony say otherwise, benchmarks be damned. =/The thing I find funny is claim that you will continue to believe the 1.6 until official numbers are released. 1.6 is not an official number but an assumed figure. So you are essentially saying that you will continue to believe an assumption over new information verified by mathematics until an official figure is released.

symmetrical

Member

Every source I can find online claims the PS4 is clocked at 1.6GHz and the Xbox One is clocked at 1.75GHz. Since the CPU modules on the APU are THE SAME SILICON as the Xbox One's CPU, they will preform better than the PS4's in a vacuum (without considering OS, Memory, etc.) by a small margin. If indeed, these facts are wrong (please provide a source if so), then you may be right.

As for my 'argument,' it would apply more to the Xbox One than the PS4 because the Xbox One APUs are larger and more costly to produce, especially due to the ESRAM. My point was that Microsoft and Sony both took into consideration AMDs chip yields. For example, if 80% of APUs were able to be clocked at 1.75GHz, that yield might have been good for Microsoft but Sony wanted a 90% chip yield and opted for the lower clock speed to save costs.

The 'math,' does not prove anything. A benchmark is not conducted inside of a vacuum. Unless the benchmark is not running on an operating system and does not use memory (only using the CPU registers and LU Caches), then it cannot possibly be testing only the CPU performance, and from all the data I have seen, since the Xbox One's CPU module is THE SAME SILICON as the PS4's CPU module and is at a higher clock, it will outperform the PS4's CPU by a small margin.

How? As an engineer, I would like to know the exact methodology behind this test. Does it not run on an operating system? How does it not use RAM? Was the test small enough to run entirely in the LUCache, and if so, how did the operating system not interfere? I did not see that information in the OP, so I am skeptical of this assumption.

For all we know, all this benchmark is proving is that the FreeBSD operating system kernel used on the PS4 outperforms the Windows operating system kernel used on the Xbox One. Biiiiig surprise there...

There is a caveat to all of this. If the Xbox One has disabled more CPU cores than the PS4 in order to improve chip yields, this could also mean the PS4's lower clock-rate CPU will outperform.

Hasn't it already been unveiled that the APU in the PS4 is different than the X1 seeing as how the X1 Cpu is designed with esram on the die?

Or are you saying only the cpu portion itself is the same?

That makes sense, but do keep in mind AMD only help Sony and MS design the chips, while actual supply is from Global Foundries I believe.

Kocolino67

Member

MS played that card and it wasn't even true.

Wait, that wasn't true?

Most powerful console? No!Wait, that wasn't true?

Wait, that wasn't true?

It's true if you preordered a PS4.

Kocolino67

Member

Most powerful console? No!

It's true if you preordered a PS4.

Puh, I was just worried about the achievement.

These are all good points-- especially about the hypervisor, and it could be that the PS4 is clocked greater than 1.6GHz; however, I would caution away from relying on benchmarks for determining clock speed. Until I see an official announcement, I am going to continue to believe it is 1.6GHz.

As for compilation, the code on PS3 was most likely compiled with a gcc compiler, while the code on the Xbox One was compiled with an MSBuild compiler; however, this is just speculation.

One point however--

If each individual read and write is slower, the CPU could pipeline the operations differently and the Xbox One could preform the work less efficiently than the PS4, even though it has the same silicon and a higher clock speed. Moreover, the Xbox One may be compensating for less efficient RAM by relying slightly more on branch prediction and doing more work to accomplish the same goal; however, this shouldn't make a terribly large difference.

needs more expounding .were getting into compiler differences now? And also how ram affects cpus etc ... just saying this post needs a lot more explanation (not saying youre wrong but for meaningful discussion more technical details need to be put forth)

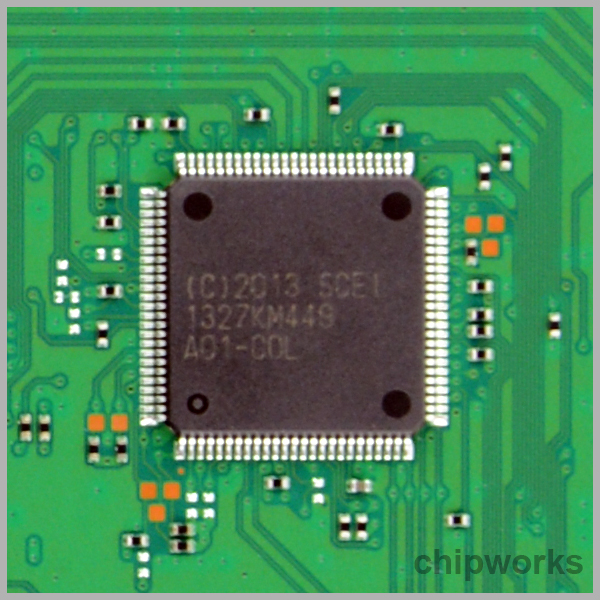

Maybe whatever the hell this is boost the CPU up by 25% when running the Substance Engine because it's some kinda co-processor or accelerator?

context? is that the extra chip they have for handling video compression on the fly downloading etc?

needs more expounding .were getting into compiler differences now?

dude, PS4 is using -funroll-loops

Xbone needs to read up on the documentation, it's -O3 the letter, not -03 the number.

dude, PS4 is using -funroll-loops

Xbone needs to read up on the documentation, it's -O3 the letter, not -03 the number.

the number of 3s in your post seems to suggest you are an insider who confirms hl3 exists!!! im off to make a new topic

context? is that the extra chip they have for handling video compression on the fly downloading etc?

No the chip I posted no one has any idea what it's for right now but it's connected to the main APU. I think you're talking about the secondary chip.

Before anyone calls me a fanboy for the Xbox One-- I'm a PS4 owner and do not have an Xbox One; however, I plan to get one when Microsoft drops the price and takes Kinect out of the box.

Wow, this discussion is still going on? I hate to burst everyone's bubbles; however, the Xbox One and PS4 CPU module on the APU is most likely THE SAME HARDWARE clocked at different speeds. AMD would not design two different CPUs with the exact same purpose. And for those of you who will say something like, "but the PS4 has more graphics cores," or "but the Xbox One has ESRAM;" know this-- the APUs are modular in their design and just because one APU has something different does not mean the individual modules comprising the APU are any different.

Most people don't understand, but CPUs are expensive to make. AMD and intel only design a few different CPU designs every year, if that. All of the different clock speeds are actually the same hardware. After the CPU is manufactured, assuming it is not dead on arrival, it is tested to see what clock speed it will reliably run at. Also, since the demand for lower-end CPUs tends to be greater than the demand for higher-end CPUs, often times CPUs which in test are able to preform better are underclocked.

http://www.tomshardware.com/picturestory/514-27-intel-cpu-processor-core-i7.html

"Based on the test result of class testing processors with the same capabilities are put into the same transporting trays. This process is called "binning," a process with which many Tom's Hardware readers will be familiar. Binning determines the maximum operating frequency of a processor, and batches are divided and sold according to stable specifications."

The PS4 CPU module is probably clocked at a slightly lower speed to improve chip yields. Being able to have many different CPUs of different clock speeds is true for desktop CPUs; however, there will not be a 1.75GHz PS4 and a 1.6GHz PS4, obviously. Therefore, any APUs which are unable to reliably run at the PS4's clock speed will have to be discarded. This is a large expense, because the APU also houses the graphics processing which would explain why Sony was prudent with their CPU clock speed. I repeat: They are the EXACT SAME CPU modules clocked at different speeds.

The only logical explanation for the benchmark on the PS4 outperforming the Xbox One is that the Xbox One's operating systems are greedier and reserve more of the hardware for themselves. Perhaps there is also a slight advantage to the PS4 when writing to and reading from RAM.

I'm sure you feel you have made a point....

What was it?

needs more expounding .were getting into compiler differences now? And also how ram affects cpus etc ... just saying this post needs a lot more explanation (not saying youre wrong but for meaningful discussion more technical details need to be put forth)

He's trying to say even though the cpu is slower it could be faster...

Or something.

So, basically PS4 has either a) Higher Ghz OR b) more cores enabled?

#Team7Cores

Even 7 cores at 1.6GHz wouldn't explain the ~ 17% advantage over the Xbox One 6 cores at 1.75GHz that would only be about a 7% advantage.

It's closer to what 8 cores at 1.6GHz would be vs 6 cores at 1.75GHz.