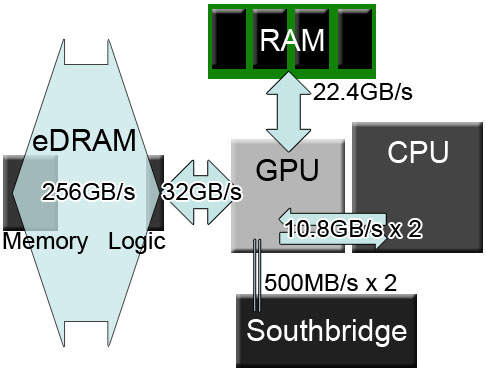

I can see why it would be a hassle, managing so many different areas with slightly different latencies could cause a headache. This is not the way you make a memory system simple and user friendly.

Nothing has suggested the other 10MB is dev accessible, it possibly just reduces other memory accesses invisibly as a victim buffer, or has another purpose. Everything I've seen has indicated 32MB was under dev control.

That complication is also what that Wii U subsystem you were just praising does a lot of, with a 32MB chunk, a 2MB chunk with different latencies, and a further 1MB chunk with different latencies.

My perspective though is that Wii U for it's performance has the best memory set up, and is similar to Intel's "Iris" configuration, with the difference being that the CPU is not on the same die (though again it can still read/write to this memory which is hugely important for some special tasks like gpgpu)

There's a huge distinction between Iris Pro and the consoles (actually two), the first being that the Iris has an automatically managed cache that greatly reduces main memory requirements without dev interaction. This is great for automatically increasing the performance in all games, but the consoles don't do this, presumably for performance consistency reasons, giving control over it to the devs with no automatic caching.

The second difference being that the Iris Pro Crystalwell memory does not actually store framebuffers at all, interestingly. Rather it's focused on caching assets before they'll be needed.

Intel said you would need a 170GB/s GPU memory bandwidth to equal what it has with the eDRAM+DDR3.

Also, like you said the CPU can use it, but it's used as a victim buffer for anything that spills out of L3, again automatically, and again great for increasing performance of everything, but the consoles (the Wii U alone in this case) again want consistency and dev control so it's manual if you want to use it as an in-between scratchpad.

The slower Wii U ram is fast enough for assets, you can't use the main memory for GPU operations to speed up performance, which is the main reason you'd want embedded memory on the chip rather than across a bandwidth limited bridge anyways. The latency is too high to help with individual frames, however you can brute force past this, which is what PS4 does with over twice XBone's bandwidth (on the main memory) However if programmed without care, it can lead to frame drops that XBone's ESram will avoid by feeding the GPU. (Since PS4's memory has much more latency than embedded on die memory of either other console)

Not sure where you're getting this. GPUs are by design highly latency-insensitive. And frames are 16-33

milliseconds, while memory speeds are measured in single to low double digit

nanoseconds, even with GDDR5. You could absolutely find assets through main memory in time for a frame.