http://www.extremetech.com/gaming/1...d-odd-soc-architecture-confirmed-by-microsoft

Much more at the link.

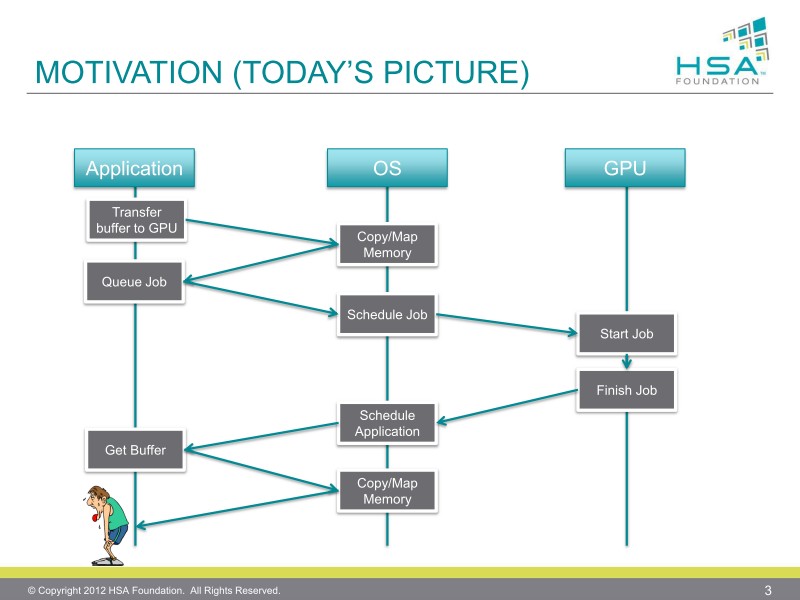

The Xbox One SoC appears to be implemented like an enormous variant of the Llano/Piledriver architecture we described for the PS4. One of our theories was that the chip would use the same Onion and Garlic buses. That appears to be exactly what Microsoft did.

That slide is from Llano/Piledriver.

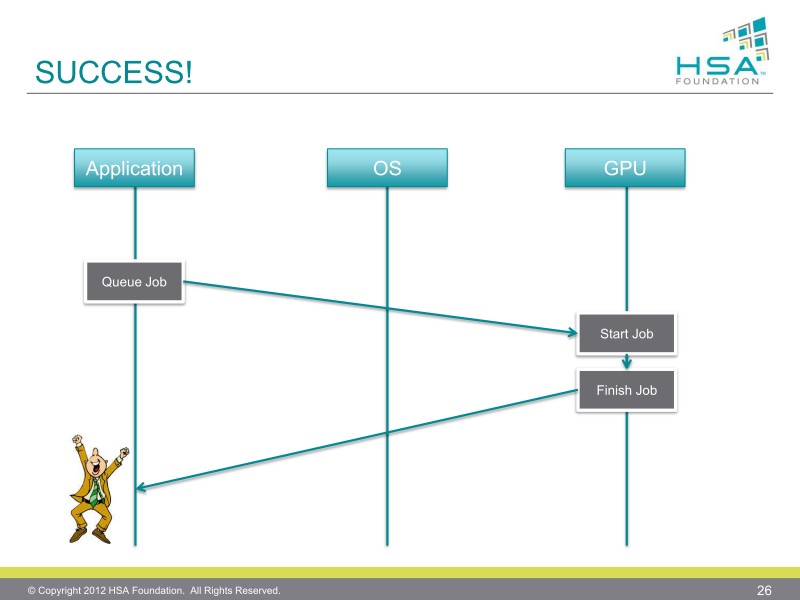

Heres the important points, for comparisons sake. The CPU cache block attaches to the GPU MMU, which drives the entire graphics core and video engine. Of particular interest for our purposes is this bit: CPU, GPU, special processors, and I/O share memory via host-guest MMUs and synchronized page tables. If Microsoft is using synchronized page tables, this strongly suggests that the Xbox One supports HSA/hUMA and that we were mistaken in our assertion to the contrary. Mea culpa.

You can see the Onion and Garlic buses represented in both AMDs diagram and the Microsoft image above. The GPU has a non-cache-coherent bus connection to the DDR3 memory pool and a cache-coherent bus attached to the CPU. Bandwidth to main memory is 68GB/s using 4×64 DDR3 links or 36GB/s if passed through the cache coherent interface. Cache coherency is always slower than non-coherent access, so the discrepancy makes sense.

The big picture takeaway from this is that the Xbox One probably is HSA capable, and the underlying architecture is very similar to a super-charged APU with much higher internal bandwidth than a normal AMD chip. Thats a non-trivial difference the 68GB/s of bandwidth devoted to Jaguar in the Xbox One dwarfs the quad-channel DDR3-1600 bandwidth that ships in an Intel X79 motherboard. For all the debates over the Xbox Ones competitive positioning against the PS4, this should be an interesting micro-architecture in its own right. There are still questions regarding the ESRAM cache breaking it into four 8MB chunks is interesting, but doesnt tell us much about how those pieces will be used. If the cache really is 1024 bits wide, and the developers can make suitable use of it, then the Xbox Ones performance might surprise us.

Much more at the link.