Is it where Microsoft hides second GPU inside Xbox One?

misterxmedia

August 28th, 19:22

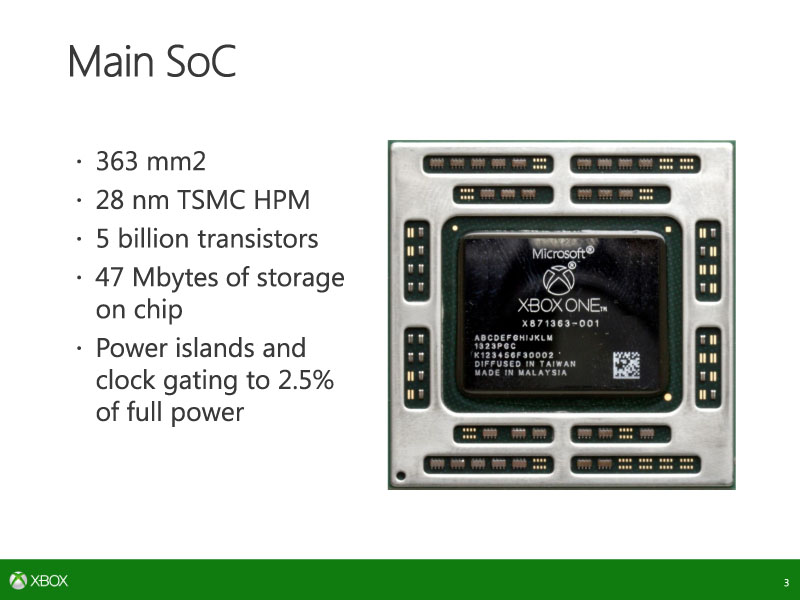

Mistercteam: Analyisis about XBOX 1 actually bigger than 363 mm^2, also why MS said 363mm^2 is only for MainSOC

some backup data

- X1 MS claim X1 Main SOC = 363mm^2 = ~ 5 Billion

- 7970 is 365 mm^2

- Both 7970 and X1 use chip pacakaging that industri standard, that why you can perfectly match the rectangle area (Chip pacakaging dimension is the same but die area is not)

original source of image

-

-

-

First ReProcessed image source to make it same orientation and mark the rectagle area for overlay purposes:

*) to make it more openly i provide the source

7970

XB1

7970 rotate 45 degress

7970+X1 overlay each other plus reposition the X1 mark to 7970 die

Now Using Ruler tool

the 7970 --> 363-365 mm^2

the XB1 is suprisingly 522-525 mm^2

Basically there are 175 mm^2 space for something

All below assumption and further analysis is based on

remaining Transistor is for dGPU, also the dGPU SRAM already moved to MainSOC -> 47MB remember (more than 32MB, as 11-12 MB are for dGPU probably)

so with assumption all remaining Transistor budget for dGPU or Custom DX 11.2 or VI tech

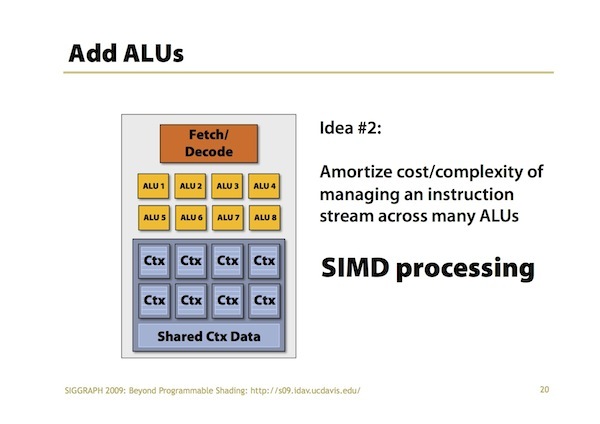

then let we see. (remember 30-40% of 7970 for example is for SRAM budget, so 1792-2048 ALU without L2 SRAM takes less transistor budget than 4 billion of 7970)

so Analysis of 175mm^2

1st assumption 175 mm^2 is 28nm too (TSMC cowos can intermixed node process)

-> 175mm^2 without SRAM means : 2-3 Billion transitor enough for 1792-2048 ALU

2nd assumption 175 mm^2 is 20nm (die made from Glofo 20nm)

-> 175 mm^2 --> ~ 4Billion enough for 2304-2560 ALU

with SRAM & small CPU

--> 28nm => 1152-1280 ALU

--> 20nm -> 1792-2048 ALU

Imagine with 3D stack W2W

the good thing

it is 100% sure that 5 Billion is only for Main SOC, and MS still not tell the other transistor budget

My Own specualtion:

In my believe that probably it is only 2.5D COWOS and not full 3D but with above explanation who complaint, even with lower estimation the dGPU still pack > 3TF

but if W2W well damn.

Also explain why China rumor said 384bit --> radeon 8880 --> 1792/2048 ALU future GPU also why pastebin listed as 20-26CU, 2.5-3TF

Interesting