nextgeneration

Member

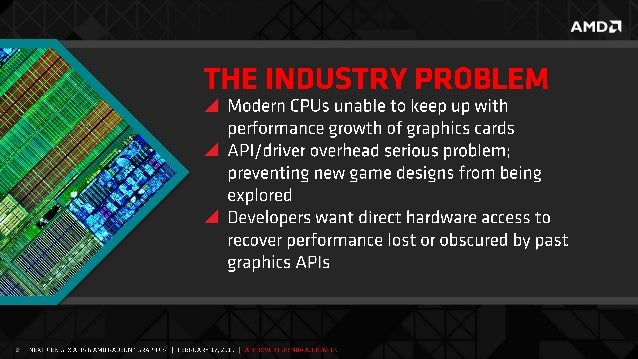

I've learned a lot about PC's and how they work over the past couple of years but I must ask:

If I were to keep my i5 processor and get a Titan X, would it be a bad idea?

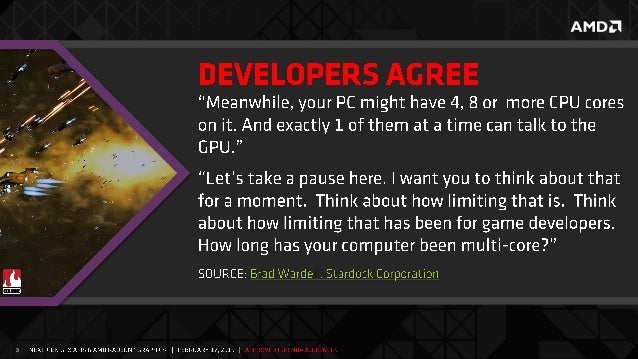

You definitely would not be using Titan X to its full potential in that scenario. I went from an i7 975 Extreme to a 4770k, and with the same gpu (gtx 680), I saw significant gains in many games - all that from just a cpu upgrade.