One of the things I like about neogaf is that new info doesn't have to be burried in megathreads thanks to Evilore's policy.

Anyway, Rösti posted this in the massive VGleaks thread, & since it has nothing to do with VGleaks and people might find it interesting I'm giving it its own thread. Again all credit and thanks goes to Rösti.

http://appft1.uspto.gov/netacgi/nph-Parser?Sect1=PTO2&Sect2=HITOFF&p=1&u=%2Fnetahtml%2FPTO%2Fsearch-bool.html&r=2&f=G&l=50&co1=AND&d=PG01&s1=Microsoft.AS.&s2=Xbox&OS=AN/Microsoft+AND+Xbox&RS=AN/Microsoft+AND+Xbox

Anyway, Rösti posted this in the massive VGleaks thread, & since it has nothing to do with VGleaks and people might find it interesting I'm giving it its own thread. Again all credit and thanks goes to Rösti.

Rösti said:Another day, another patent. Ok, I saw this while doing my daily patent search at USPTO (and OHIM). While I'm not 100% sure this relates to Durango, the description doesn't sound like Xbox 360 or the contemporary Kinect. But I'll post it here first instead of making a new thread, and let people more tech-savvy than me come with a verdict. Most likely it's for the next generation Kinect but it also describes Durango (hopefully) a bit. It's quite lengthy and includes many images, but I thought it would be better with more than less.

United States Patent Application 20130027296

Kind Code A1

Klein; Christian ; et al. January 31, 2013

COMPOUND GESTURE-SPEECH COMMANDS

A multimedia entertainment system combines both gestures and voice commands to provide an enhanced control scheme. A user's body position or motion may be recognized as a gesture, and may be used to provide context to recognize user generated sounds, such as speech input. Likewise, speech input may be recognized as a voice command, and may be used to provide context to recognize a body position or motion as a gesture. Weights may be assigned to the inputs to facilitate processing. When a gesture is recognized, a limited set of voice commands associated with the recognized gesture are loaded for use. Further, additional sets of voice commands may be structured in a hierarchical manner such that speaking a voice command from one set of voice commands leads to the system loading a next set of voice commands.Rösti said:Abstract

Inventors: Klein; Christian; (Duvall, WA) ; Vassigh; Ali M.; (Redmond, WA) ; Flaks; Jason S.; (Bellevue, WA) ; Larco; Vanessa; (Kirkland, WA) ; Soemo; Thomas M.; (Redmond, WA)

Assignee: MICROSOFT CORPORATION

Redmond

WA

Serial No.: 646692

Series Code: 13

Filed: October 6, 2012

Rösti said:Claims

1. A method for controlling a computing system using a voice commands, comprising: accessing multiple depth images from a depth sensor system; recognizing a gesture from the multiple depth images; in response to recognizing a gesture, choosing a subset of a set of sound commands based on the recognized gesture, the set of sound commands includes multiple subsets, each subset is associated with one or more gestures and sound command recognition data for the respective subset; receiving sound input; recognizing a sound command from the chosen subset based on the sound input; and performing an action in response to the recognized sound command.

11. The method of claim 1, wherein: the depth images include a two-dimensional pixel area of a captured scene, each pixel in the two-dimensional pixel area represents a depth value of one or more objects in the scene captured by the depth sensor system; the method further comprises displaying the chosen subset of sound commands in response to the recognizing the gesture; the sound input is received after displaying the chosen subset of sound commands; and the recognizing includes attempting to match the sound input to sound commands in the chosen subset and not to sound commands in other subsets of the set of sound commands.

12. A computing system, comprising: a monitor for displaying multimedia content; a depth sensor for capturing depth images; a microphone for capturing sounds; and a processor in communication with the depth sensor, the microphone and the monitor; the processor communicates with the monitor to display an object, the processor receives multiple depth images from the depth sensor and recognizes a gesture from the multiple depth images, the processor chooses a subset of a set of sound commands based on and in response to the recognized gesture, the set of sound commands includes multiple subsets, the processor loads sound command recognition data for the chosen subset of sound commands in response to the recognized gesture, the processor receives sound input from the microphone and recognizes a sound command from the chosen subset based on the sound input and the loaded sound command recognition data without searching all of the set of sound commands, processor performs an action in response to the recognized sound command.

Rösti said:Detailed description:

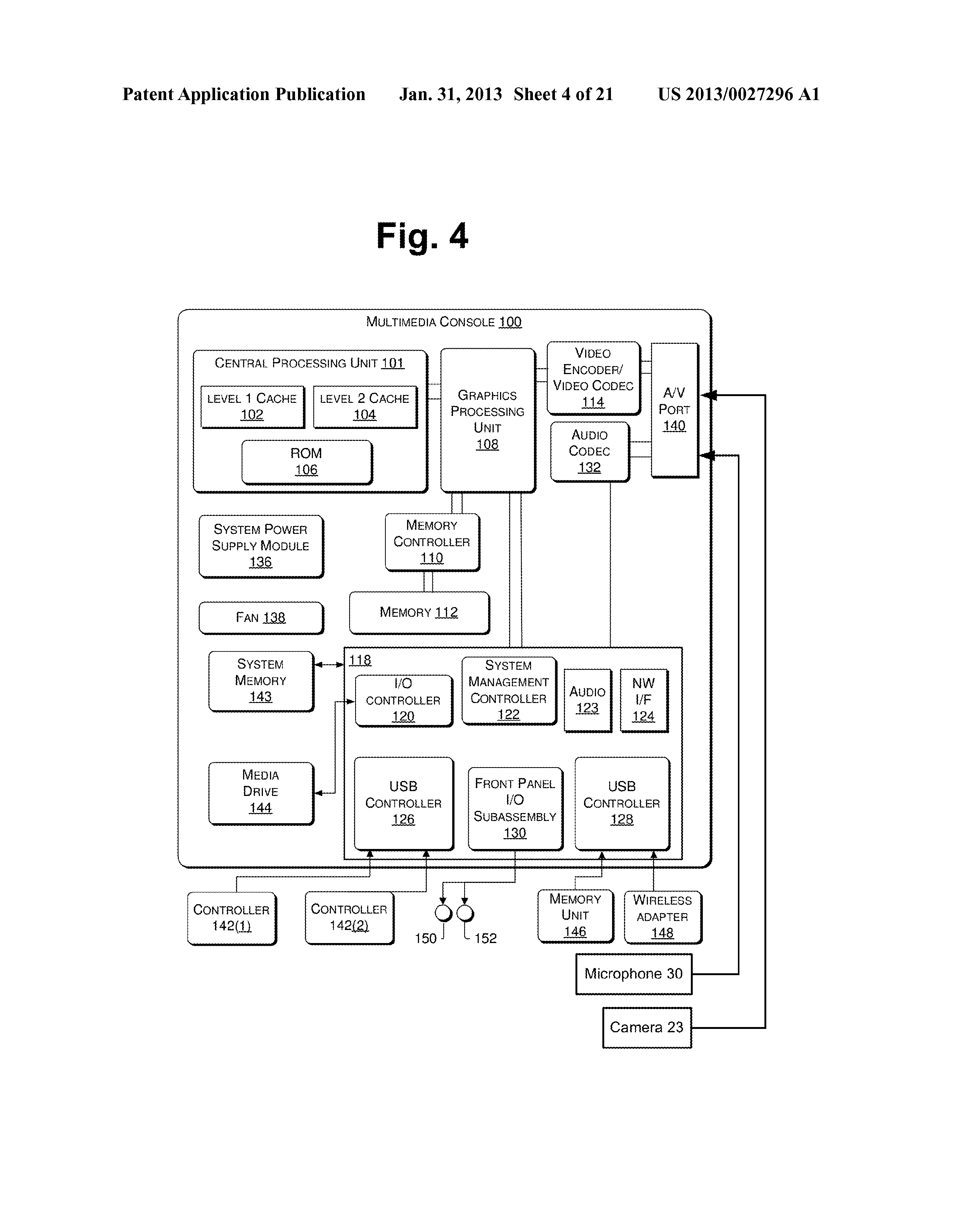

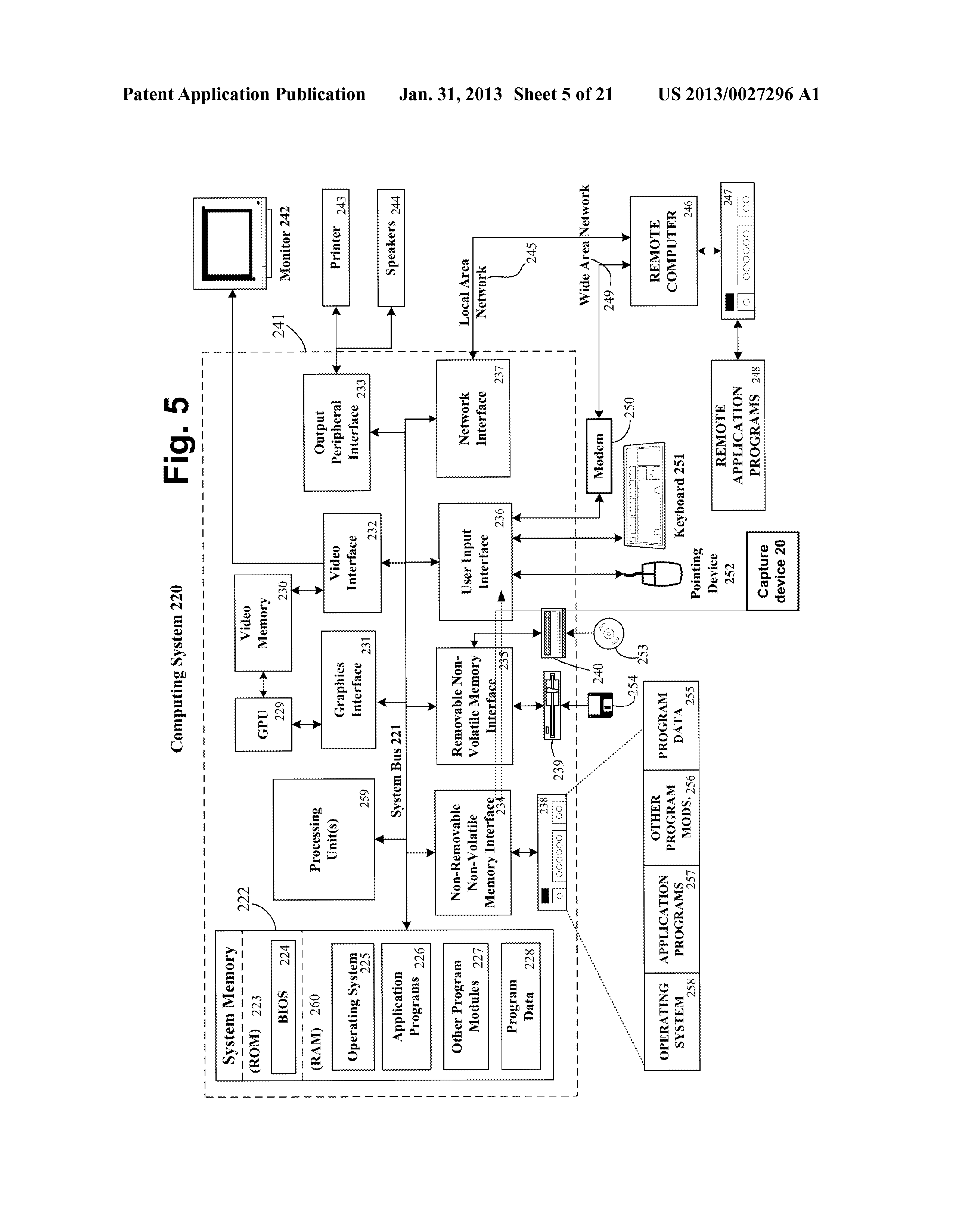

[0064] FIG. 4 illustrates one embodiment of the controller 12 shown in FIG. 1 implemented as a multimedia console 100, such as a gaming console. The multimedia console 100 has a central processing unit (CPU) 101 having a level 1 cache 102, a level 2 cache 104, and a flash ROM (Read Only Memory) 106. The level 1 cache 102 and a level 2 cache 104 temporarily store data and hence reduce the number of memory access cycles, thereby improving processing speed and throughput. The CPU 101 may be provided having more than one core, and thus, additional level 1 and level 2 caches 102 and 104. The flash ROM 106 may store executable code that is loaded during an initial phase of a boot process when the multimedia console 100 is powered on.

[0065] A graphics processing unit (GPU) 108 and a video encoder/video codec (coder/decoder) 114 form a video processing pipeline for high speed and high resolution graphics processing. Data is carried from the graphics processing unit 108 to the video encoder/video codec 114 via a bus. The video processing pipeline outputs data to an A/V (audio/video) port 140 for transmission to a television or other display. A memory controller 110 is connected to the GPU 108 to facilitate processor access to various types of memory 112, such as, but not limited to, a RAM (Random Access Memory).

[0066] The multimedia console 100 includes an I/0 controller 120, a system management controller 122, an audio processing unit 123, a network interface controller 124, a first USB host controller 126, a second USB controller 128 and a front panel I/O subassembly 130 that are preferably implemented on a module 118. The USB controllers 126 and 128 serve as hosts for peripheral controllers 142(1)-142(2), a wireless adapter 148, and an external memory device 146 (e.g., flash memory, external CD/DVD ROM drive, removable media, etc.). The network interface 124 and/or wireless adapter 148 provide access to a network (e.g., the Internet, home network, etc.) and may be any of a wide variety of various wired or wireless adapter components including an Ethernet card, a modem, a Bluetooth module, a cable modem, and the like.

[0067] System memory 143 is provided to store application data that is loaded during the boot process. A media drive 144 is provided and may comprise a DVD/CD drive, Blu-Ray drive, hard disk drive, or other removable media drive, etc. The media drive 144 may be internal or external to the multimedia console 100. Application data may be accessed via the media drive 144 for execution, playback, etc. by the multimedia console 100. The media drive 144 is connected to the I/O controller 120 via a bus, such as a Serial ATA bus or other high speed connection (e.g., IEEE 1394).

[0068] The system management controller 122 provides a variety of service functions related to assuring availability of the multimedia console 100. The audio processing unit 123 and an audio codec 132 form a corresponding audio processing pipeline with high fidelity and stereo processing. Audio data is carried between the audio processing unit 123 and the audio codec 132 via a communication link. The audio processing pipeline outputs data to the A/V port 140 for reproduction by an external audio user or device having audio capabilities.

[0069] The front panel I/O subassembly 130 supports the functionality of the power button 150 and the eject button 152, as well as any LEDs (light emitting diodes) or other indicators exposed on the outer surface of the multimedia console 100. A system power supply module 136 provides power to the components of the multimedia console 100. A fan 138 cools the circuitry within the multimedia console 100.

[0070] The CPU 101, GPU 108, memory controller 110, and various other components within the multimedia console 100 are interconnected via one or more buses, including serial and parallel buses, a memory bus, a peripheral bus, and a processor or local bus using any of a variety of bus architectures. By way of example, such architectures can include a Peripheral Component Interconnects (PCI) bus, PCI-Express bus, etc.

[0071] When the multimedia console 100 is powered on, application data may be loaded from the system memory 143 into memory 112 and/or caches 102, 104 and executed on the CPU 101. The application may present a graphical user interface that provides a consistent user experience when navigating to different media types available on the multimedia console 100. In operation, applications and/or other media contained within the media drive 144 may be launched or played from the media drive 144 to provide additional functionalities to the multimedia console 100.

[0072] The multimedia console 100 may be operated as a standalone system by simply connecting the system to a television or other display. In this standalone mode, the multimedia console 100 allows one or more users to interact with the system, watch movies, or listen to music. However, with the integration of broadband connectivity made available through the network interface 124 or the wireless adapter 148, the multimedia console 100 may further be operated as a participant in a larger network community.

[0073] When the multimedia console 100 is powered ON, a set amount of hardware resources are reserved for system use by the multimedia console operating system. These resources may include a reservation of memory (e.g., 16 MB), CPU and GPU cycles (e.g., 5%), networking bandwidth (e.g., 8 kbs), etc. Because these resources are reserved at system boot time, the reserved resources do not exist from the application's view.

[0074] In particular, the memory reservation preferably is large enough to contain the launch kernel, concurrent system applications and drivers. The CPU reservation is preferably constant such that if the reserved CPU usage is not used by the system applications, an idle thread will consume any unused cycles.

[0075] With regard to the GPU reservation, lightweight messages generated by the system applications (e.g., pop ups) are displayed by using a GPU interrupt to schedule code to render popup into an overlay. The amount of memory required for an overlay depends on the overlay area size and the overlay preferably scales with screen resolution. Where a full user interface is used by the concurrent system application, it is preferable to use a resolution independent of application resolution. A scaler may be used to set this resolution such that the need to change frequency and cause a TV resynch is eliminated.

[0076] After the multimedia console 100 boots and system resources are reserved, concurrent system applications execute to provide system functionalities. The system functionalities are encapsulated in a set of system applications that execute within the reserved system resources described above. The operating system kernel identifies threads that are system application threads versus gaming application threads. The system applications are preferably scheduled to run on the CPU 101 at predetermined times and intervals in order to provide a consistent system resource view to the application. The scheduling is to minimize cache disruption for the gaming application running on the console.

[0077] When a concurrent system application requires audio, audio processing is scheduled asynchronously to the gaming application due to time sensitivity. A multimedia console application manager (described below) controls the gaming application audio level (e.g., mute, attenuate) when system applications are active.

[0078] Input devices (e.g., controllers 142(1) and 142(2)) are shared by gaming applications and system applications. The input devices are not reserved resources, but are to be switched between system applications and the gaming application such that each will have a focus of the device. The application manager preferably controls the switching of input stream, without knowledge the gaming application's knowledge and a driver maintains state information regarding focus switches. For example, the cameras 26, 28 and capture device 20 may define additional input devices for the console 100 via USB controller 126 or other interface.

The bolded segments are what I find most interesting here. Especially the audio processing unit (which I assume is not that of Xbox 360's hardware accelerated audio decompression). 0073 is bolded just for reference since it's a rather obvious thing for most (all?) operating systems.

And lastly, the images, with description first. Note that many of these are just flowcharts of Kinect operations, but hardware is described here as well, note for example the appearance of the camera.

Rösti said:BRIEF DESCRIPTION OF THE DRAWINGS

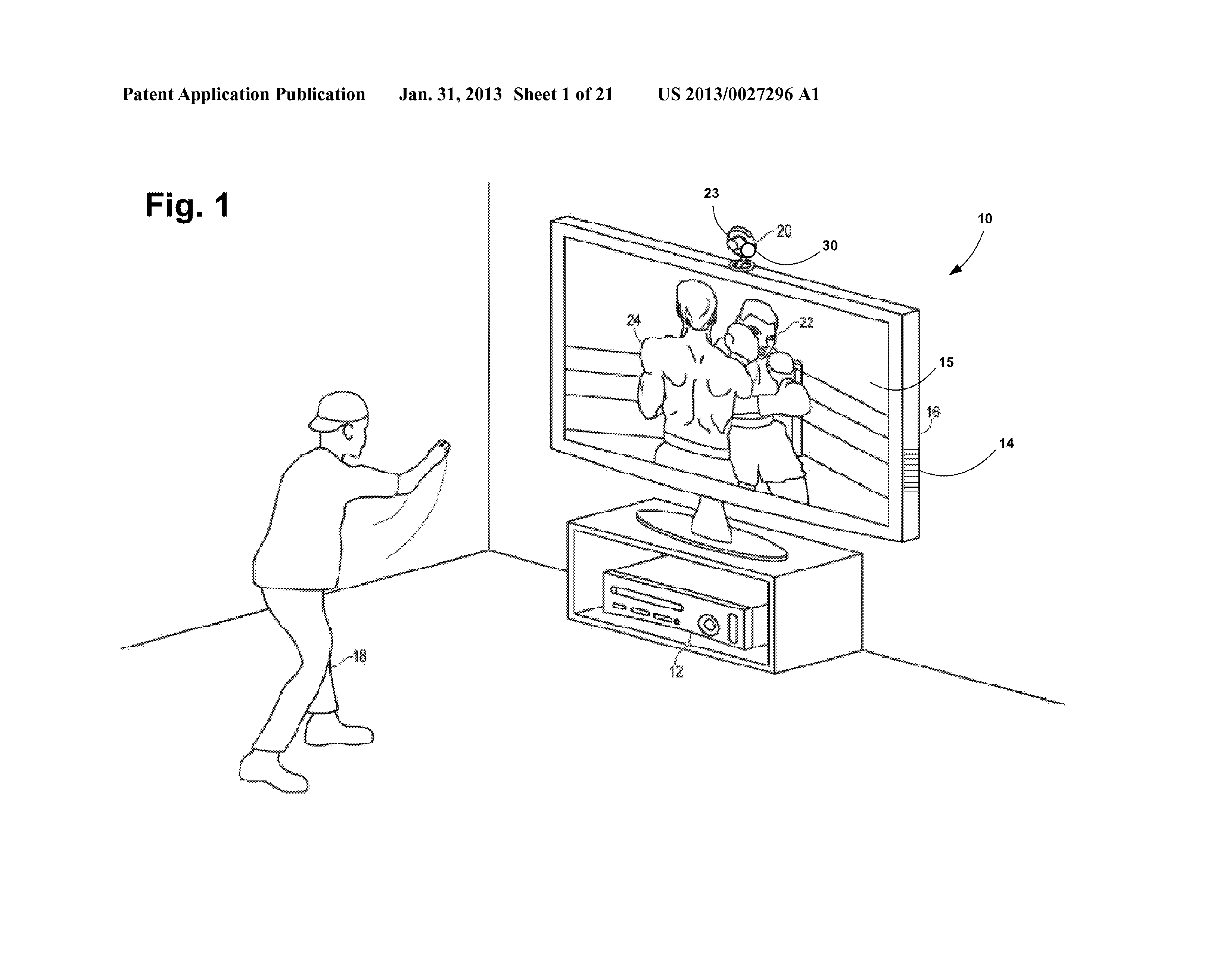

[0009] FIG. 1 illustrates a user in an exemplary multimedia environment having a capture device for capturing and tracking user body positions and movements and receiving user sound commands.

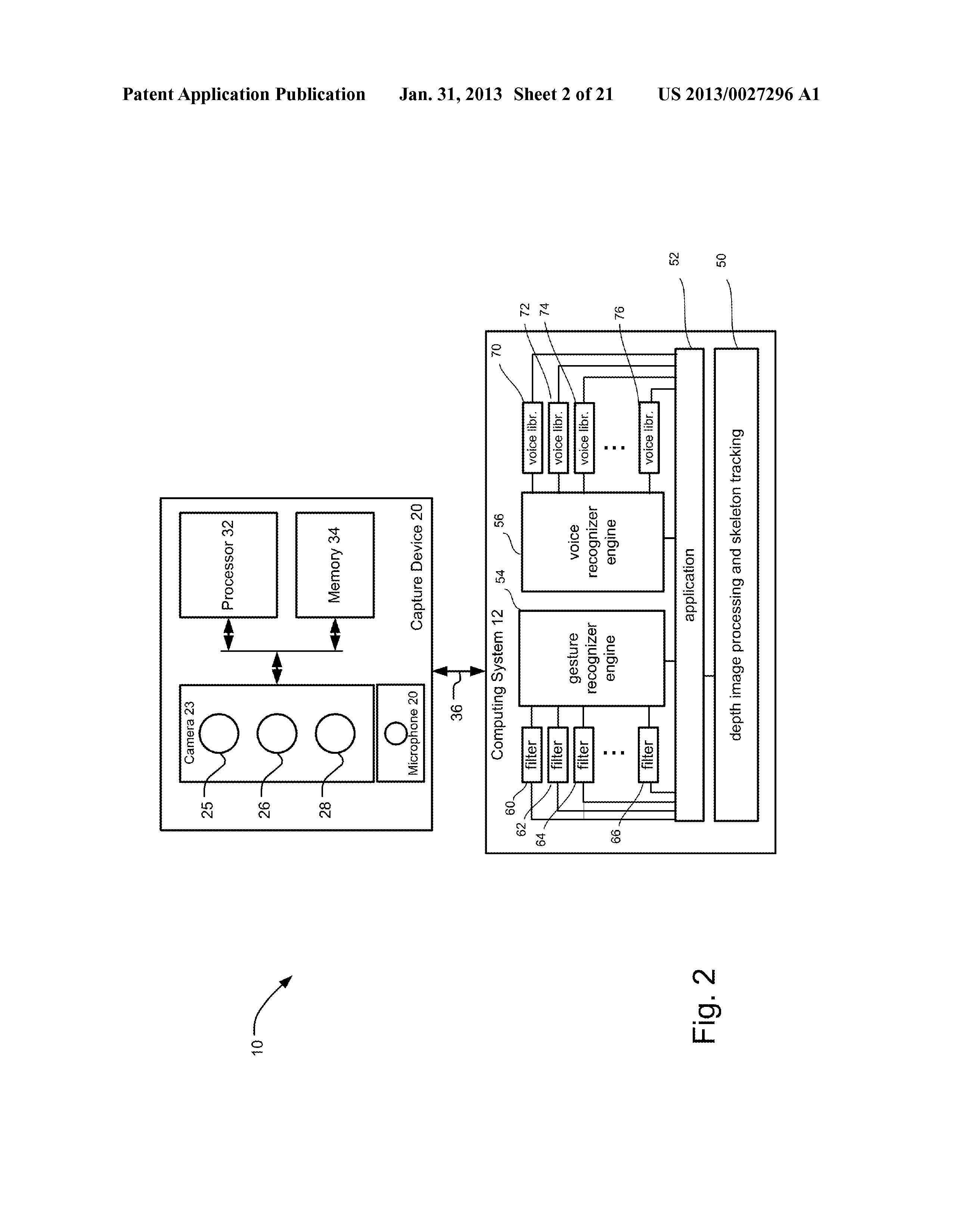

[0010] FIG. 2 is a block diagram illustrating one embodiment of a capture device coupled to a computing device.

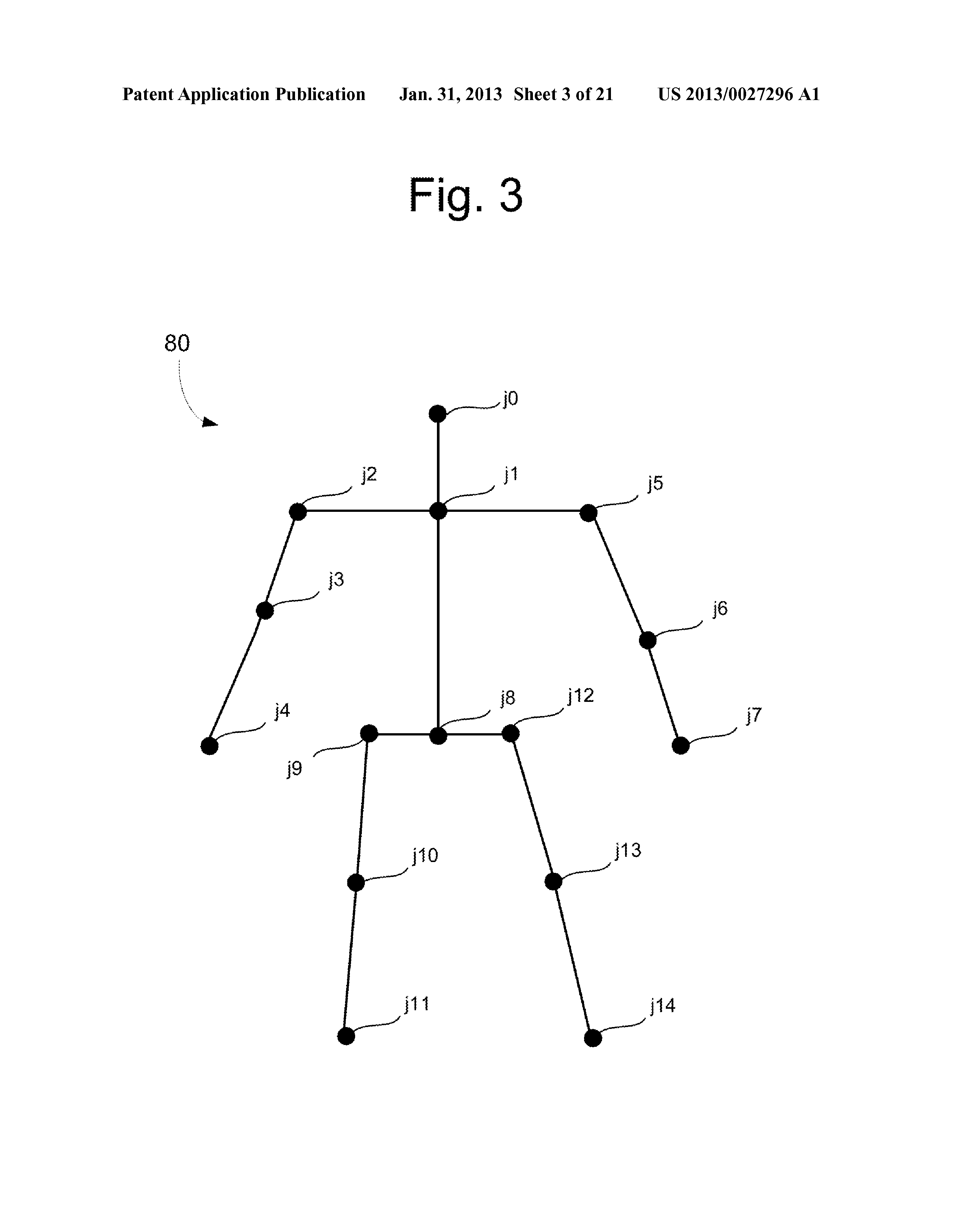

[0011] FIG. 3 is a schematic representation of a skeleton being tracked.

[0012] FIG. 4 is a block diagram illustrating one embodiment of a computing system for processing data received from a capture device.

[0013] FIG. 5 is a block diagram illustrating another embodiment of a computing system for processing data received from a capture device.

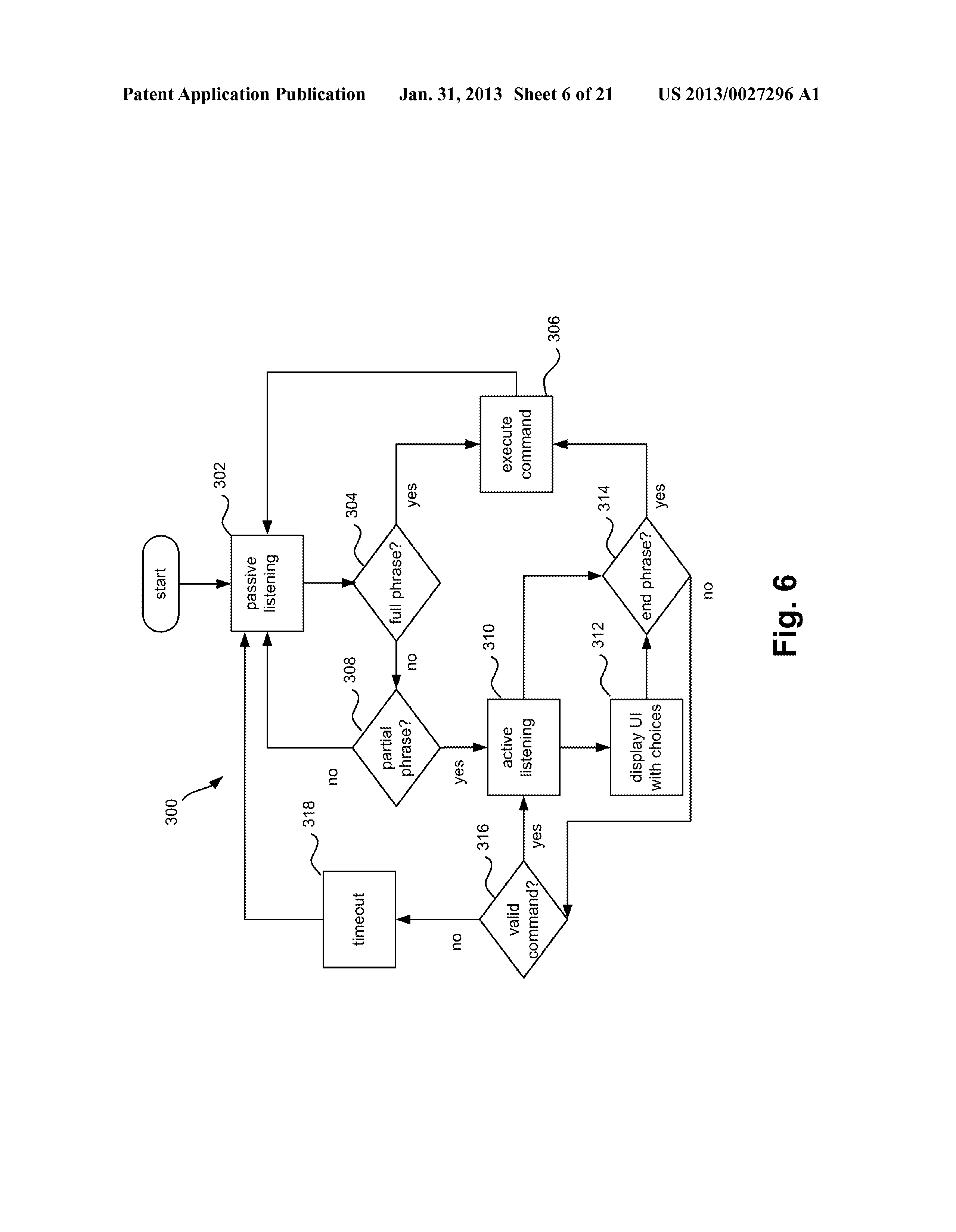

[0014] FIG. 6 is a flow chart describing one embodiment of a process for user interaction with a computing system using voice commands.

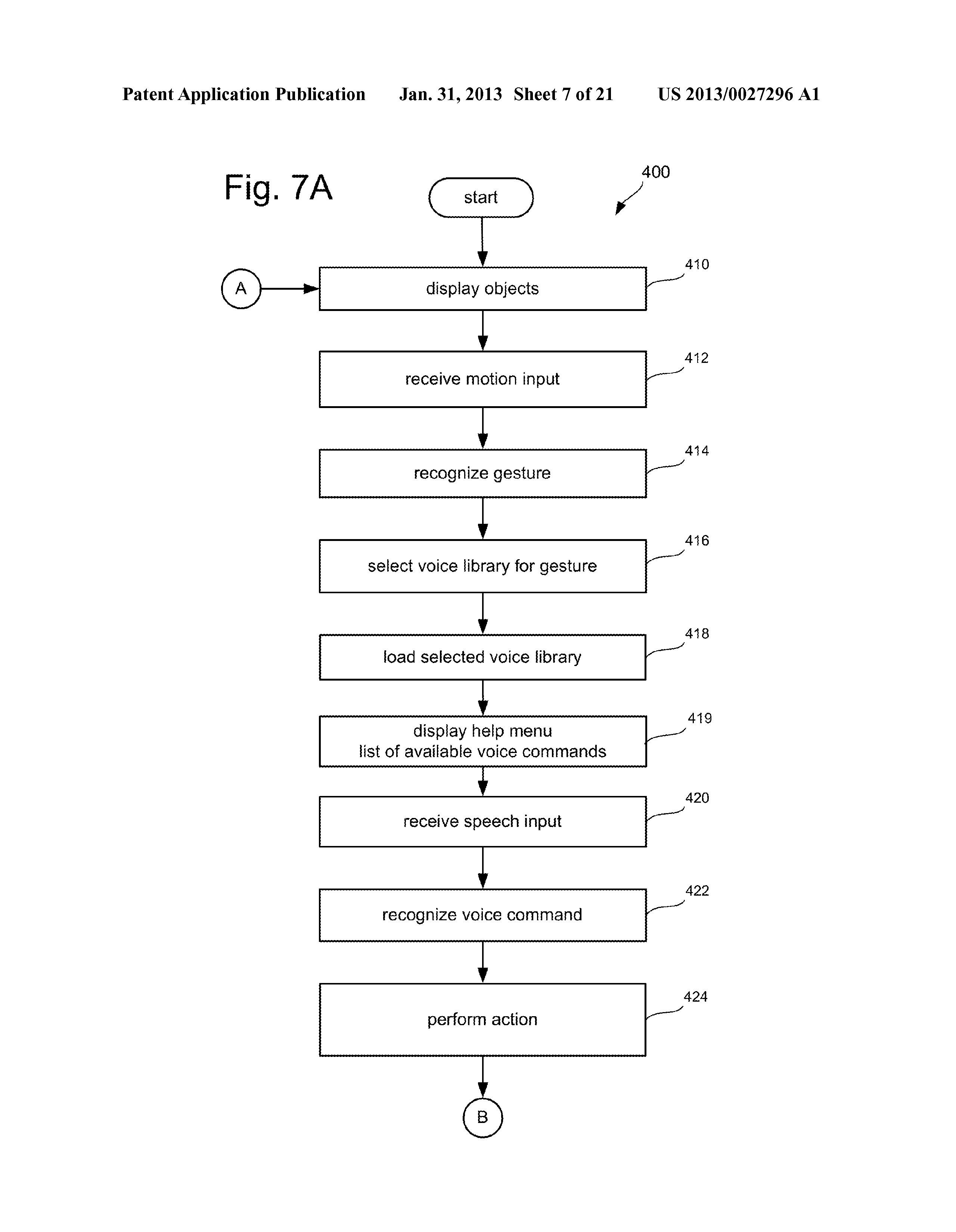

[0015] FIG. 7A is a flow chart describing one embodiment of a process for user interaction with a computing system using hand gestures and voice commands.

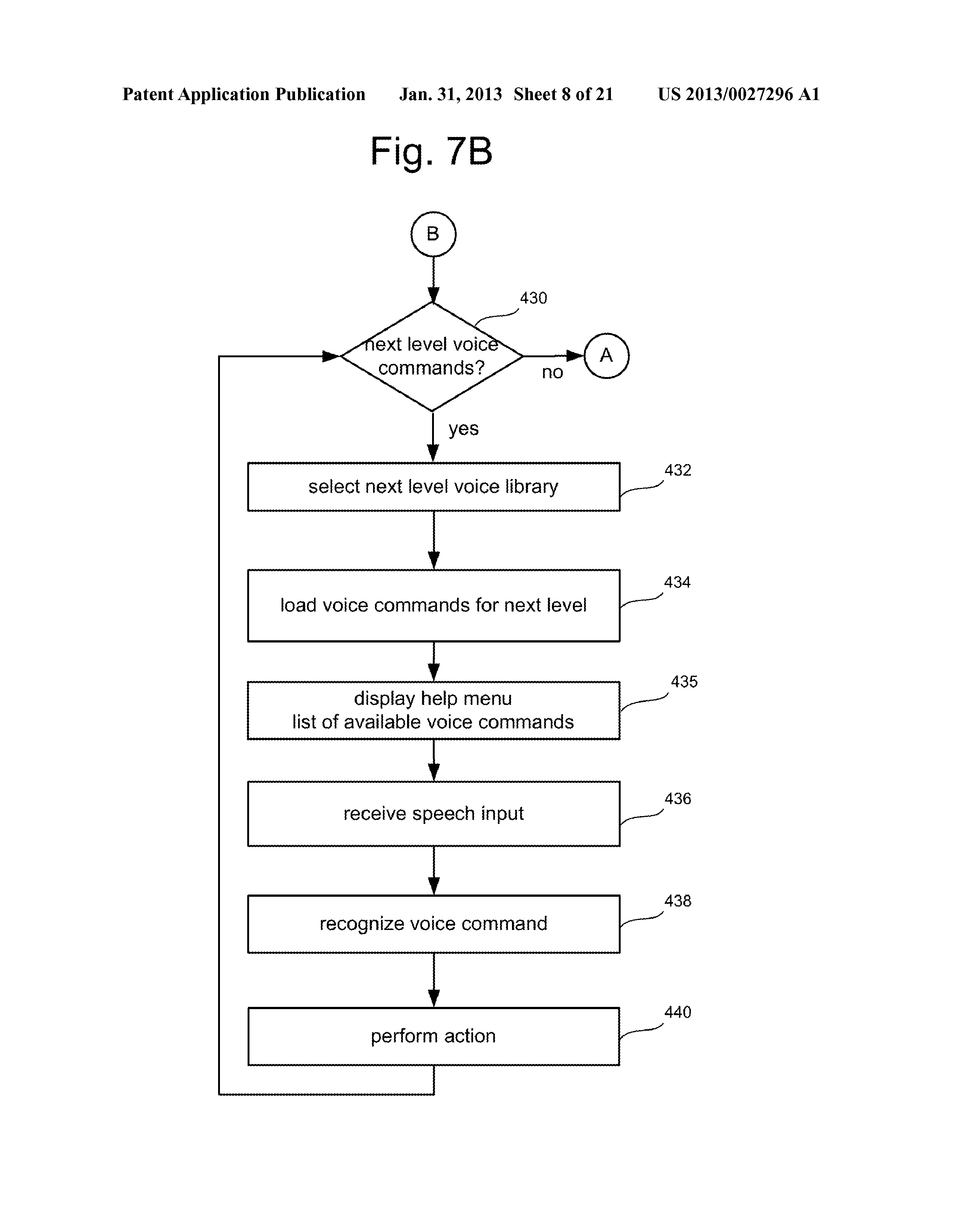

[0016] FIG. 7B is a flow chart describing further steps in addition to those shown in FIG. 7A for user interaction with a computing system using hand gestures and voice commands.

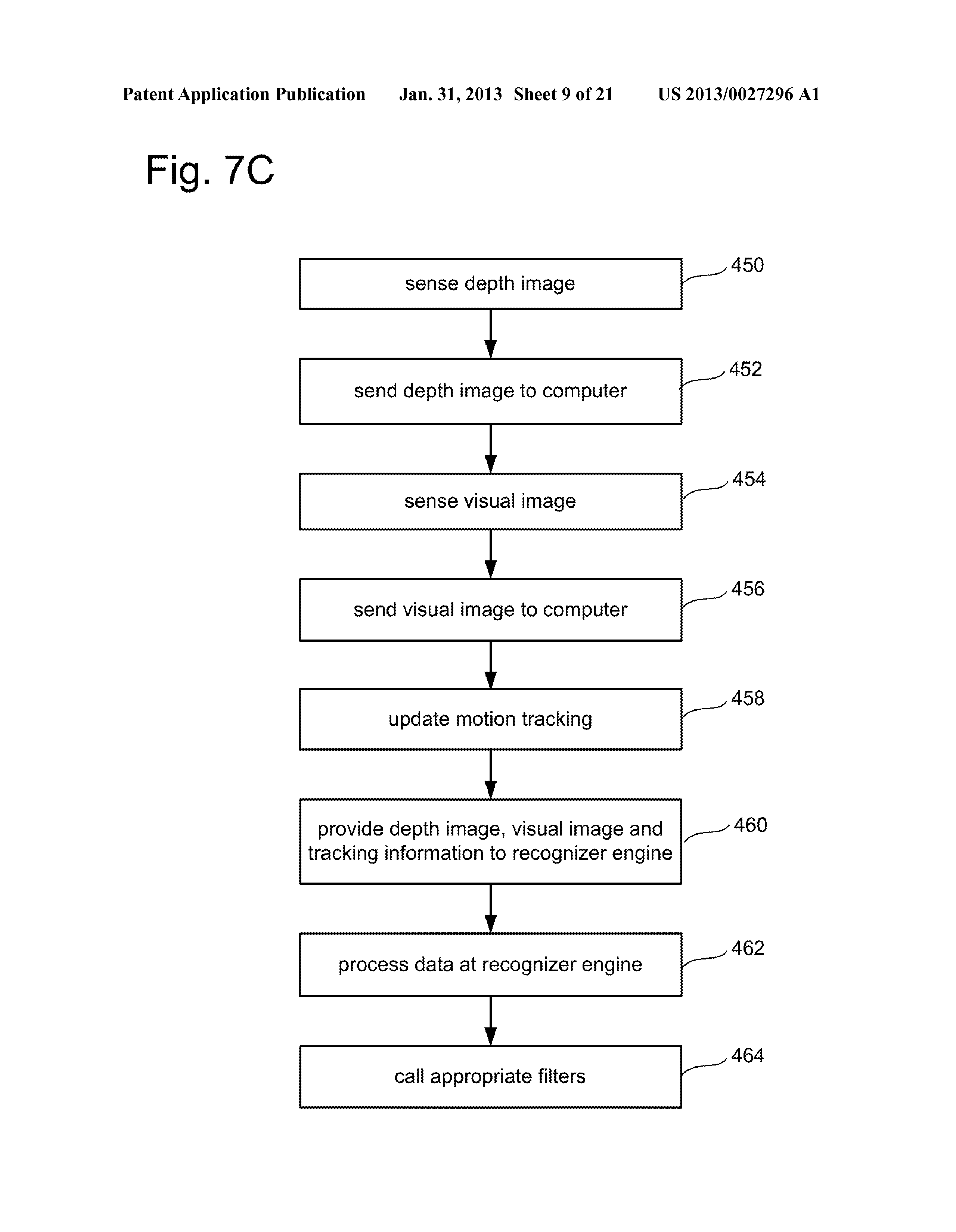

[0017] FIGS. 7C-7D are flow charts describing additional details for recognizing hand gestures in the process shown in FIG. 7A.

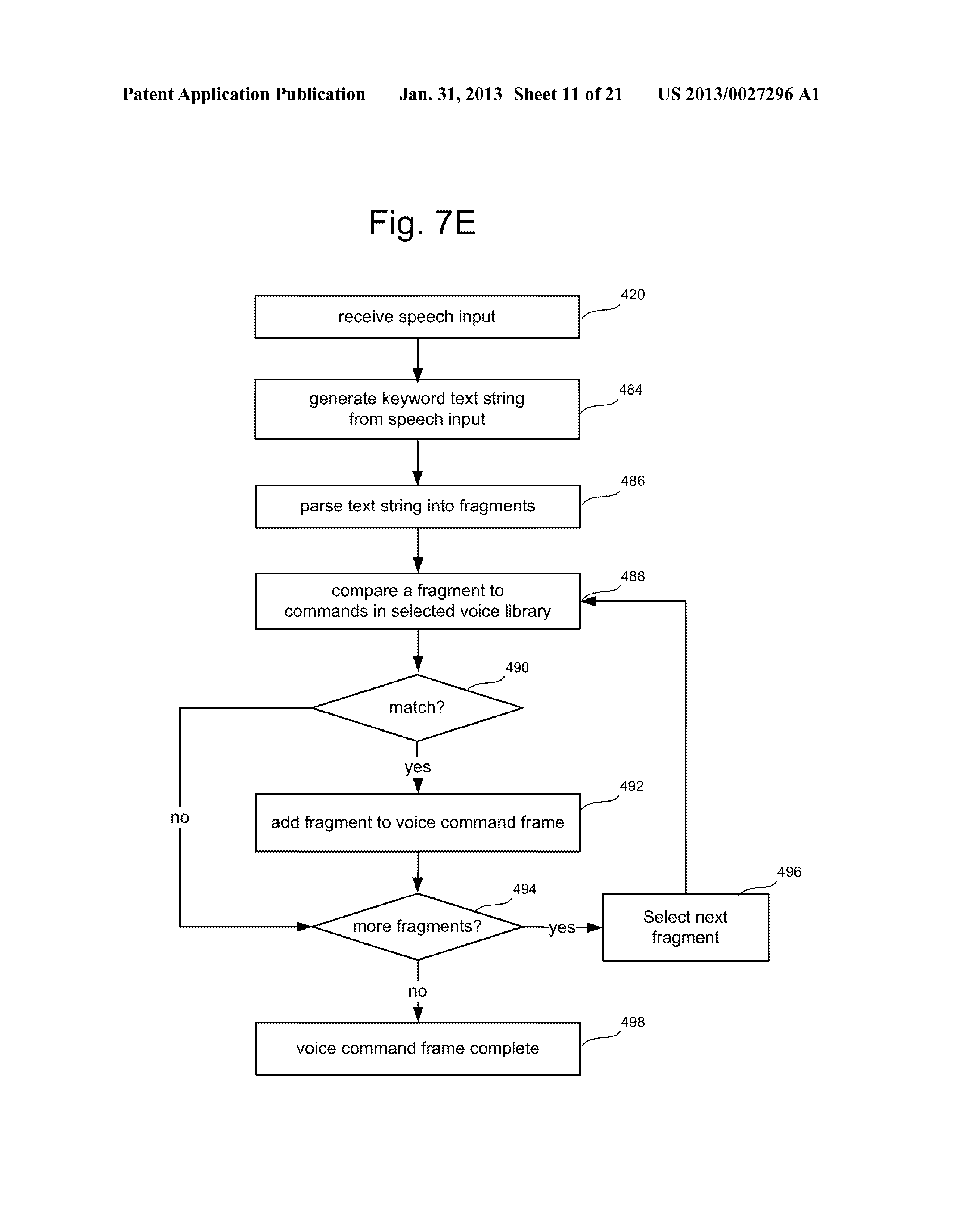

[0018] FIG. 7E is a flow chart describing additional details for recognizing voice commands in the process shown in FIG. 7A.

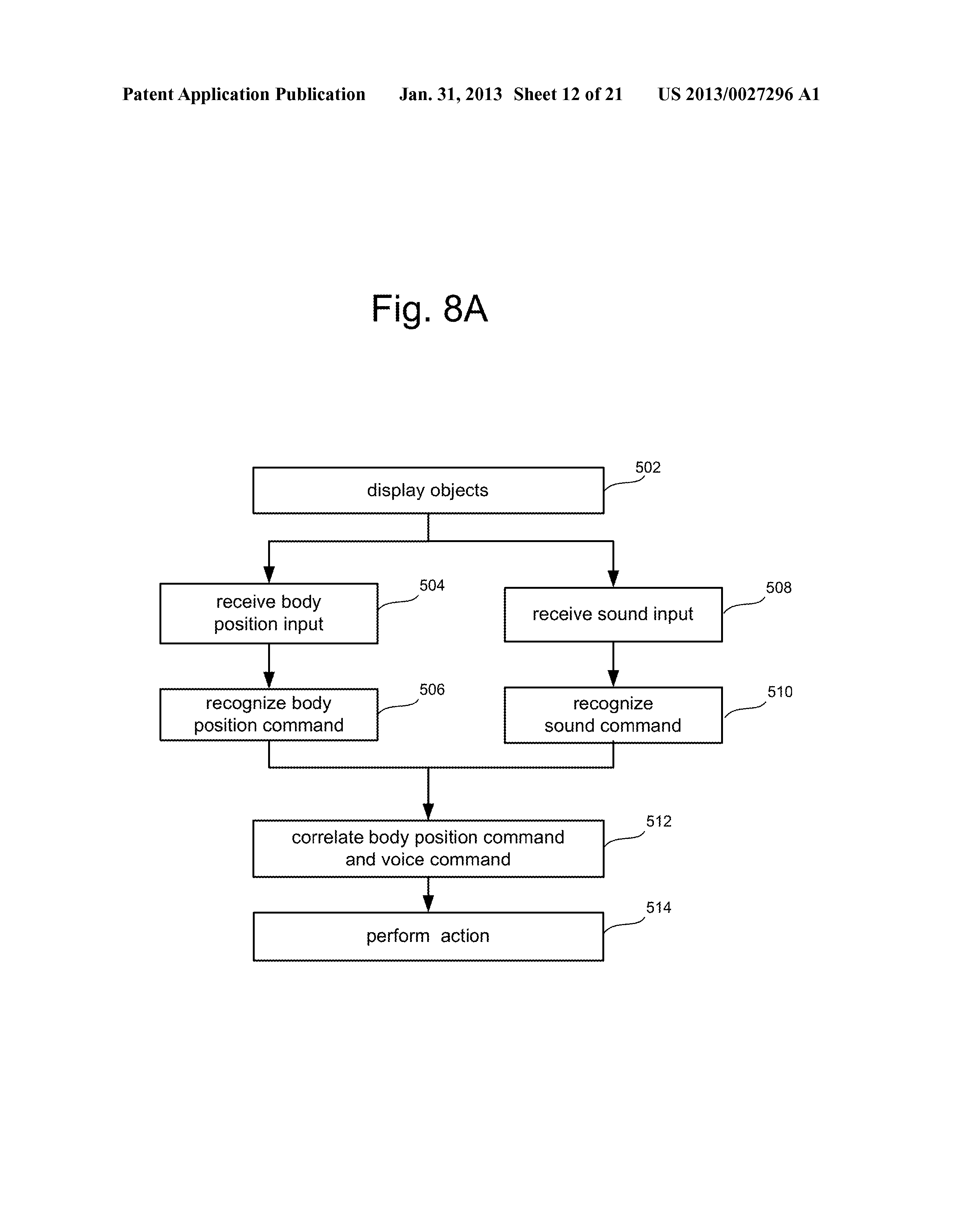

[0019] FIG. 8A is a flow chart describing an alternative embodiment of a process for user interaction with a computing system using hand gestures and voice commands.

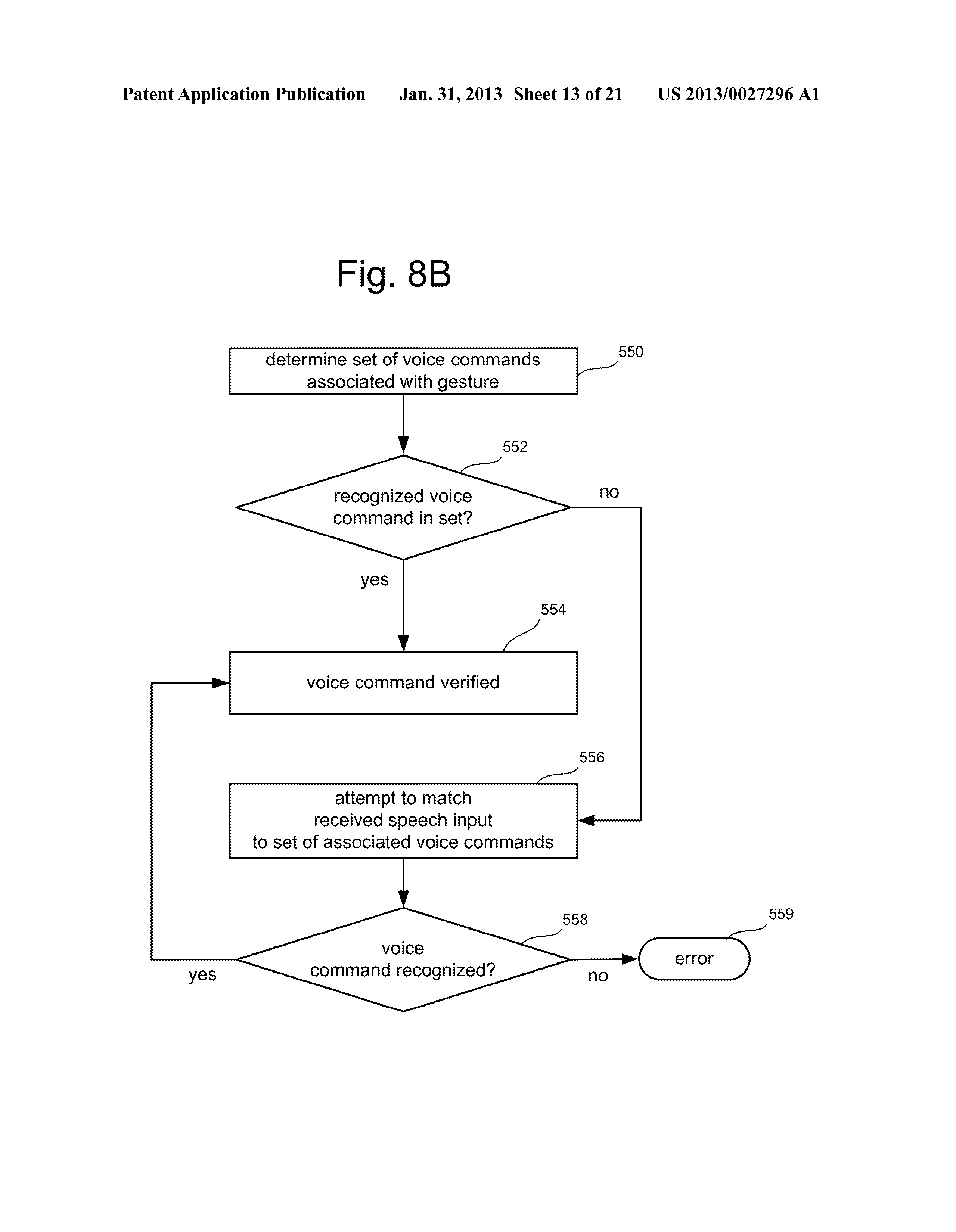

[0020] FIG. 8B is a flow chart describing one option for correlating a gesture with a voice command in accord with FIG. 8A.

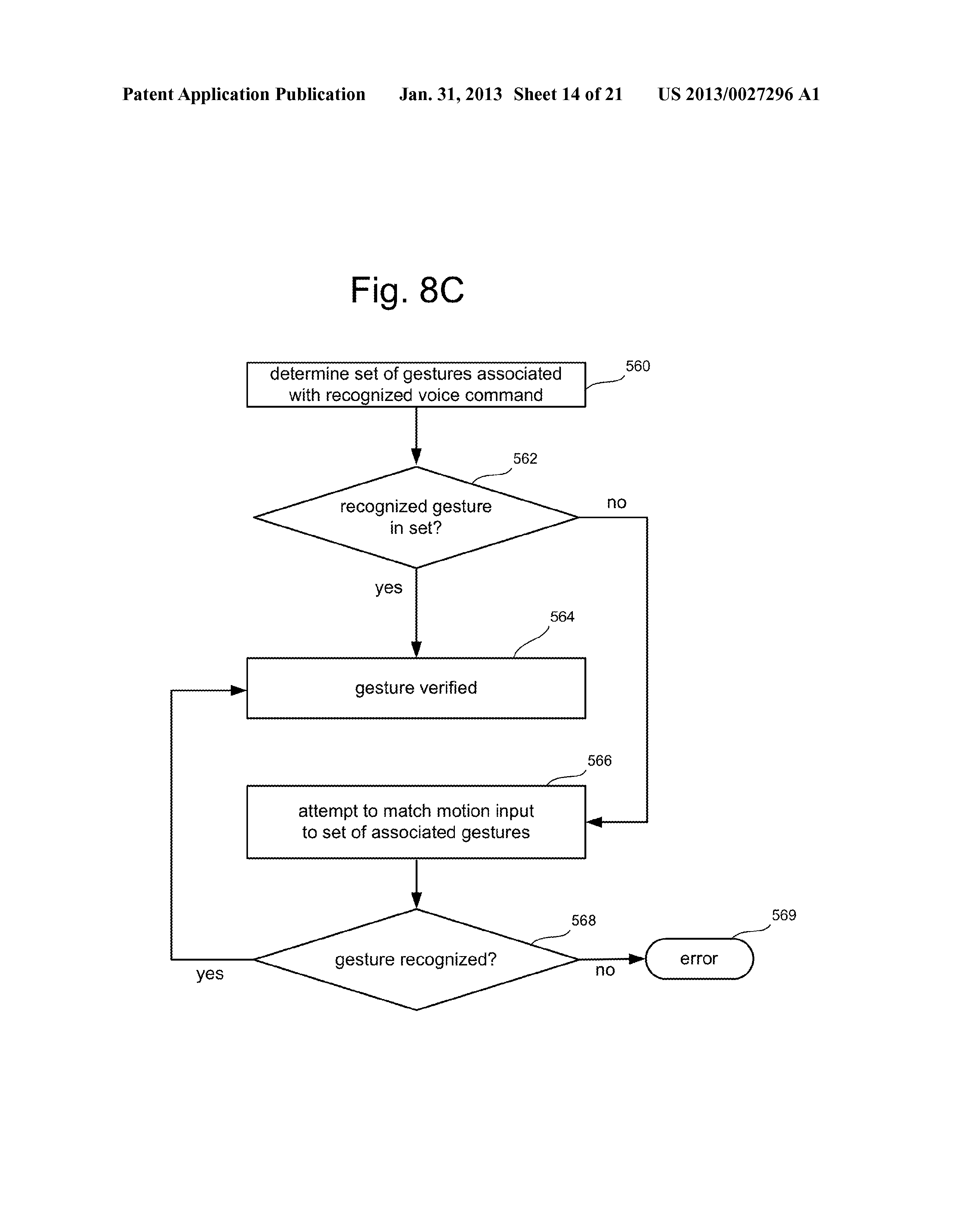

[0021] FIG. 8C is a flow chart describing another option for correlating a gesture with a voice command in accord with FIG. 8A.

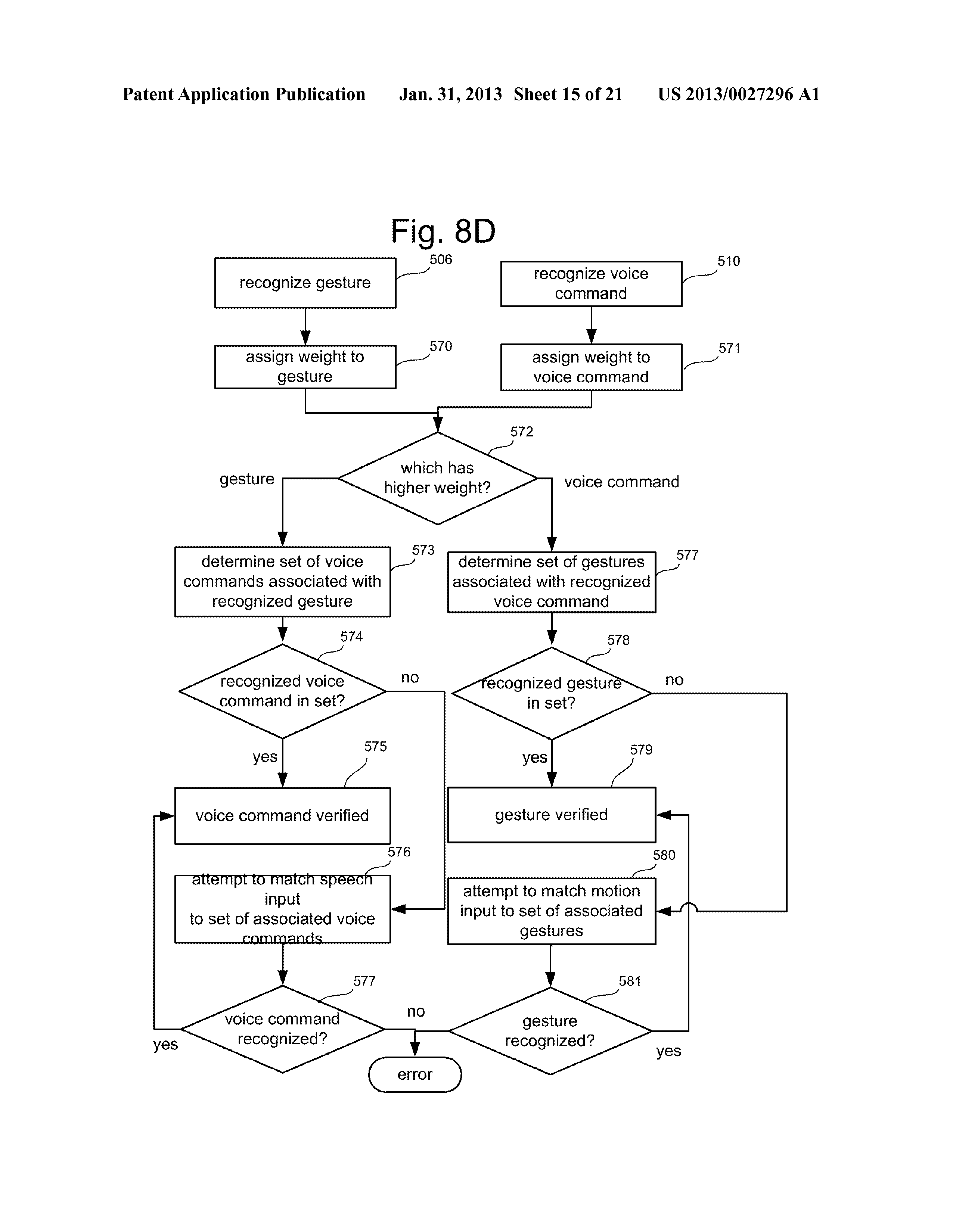

[0022] FIG. 8D is a flow chart describing another option for correlating a gesture with a voice command in accord with FIG. 8A.

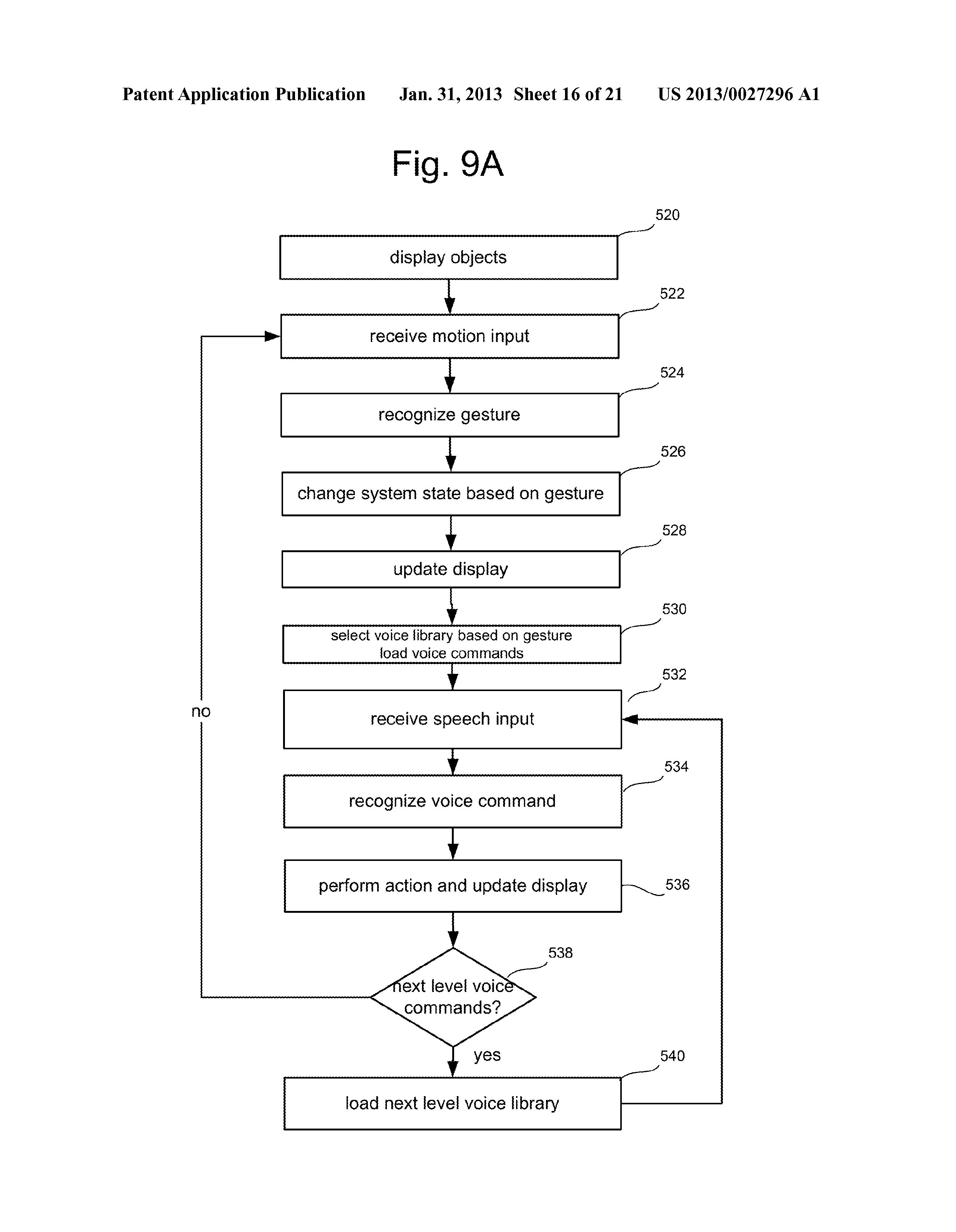

[0023] FIG. 9A is a flow chart describing an alternative embodiment of a process for user interaction with a computing system using hand gestures and voice commands.

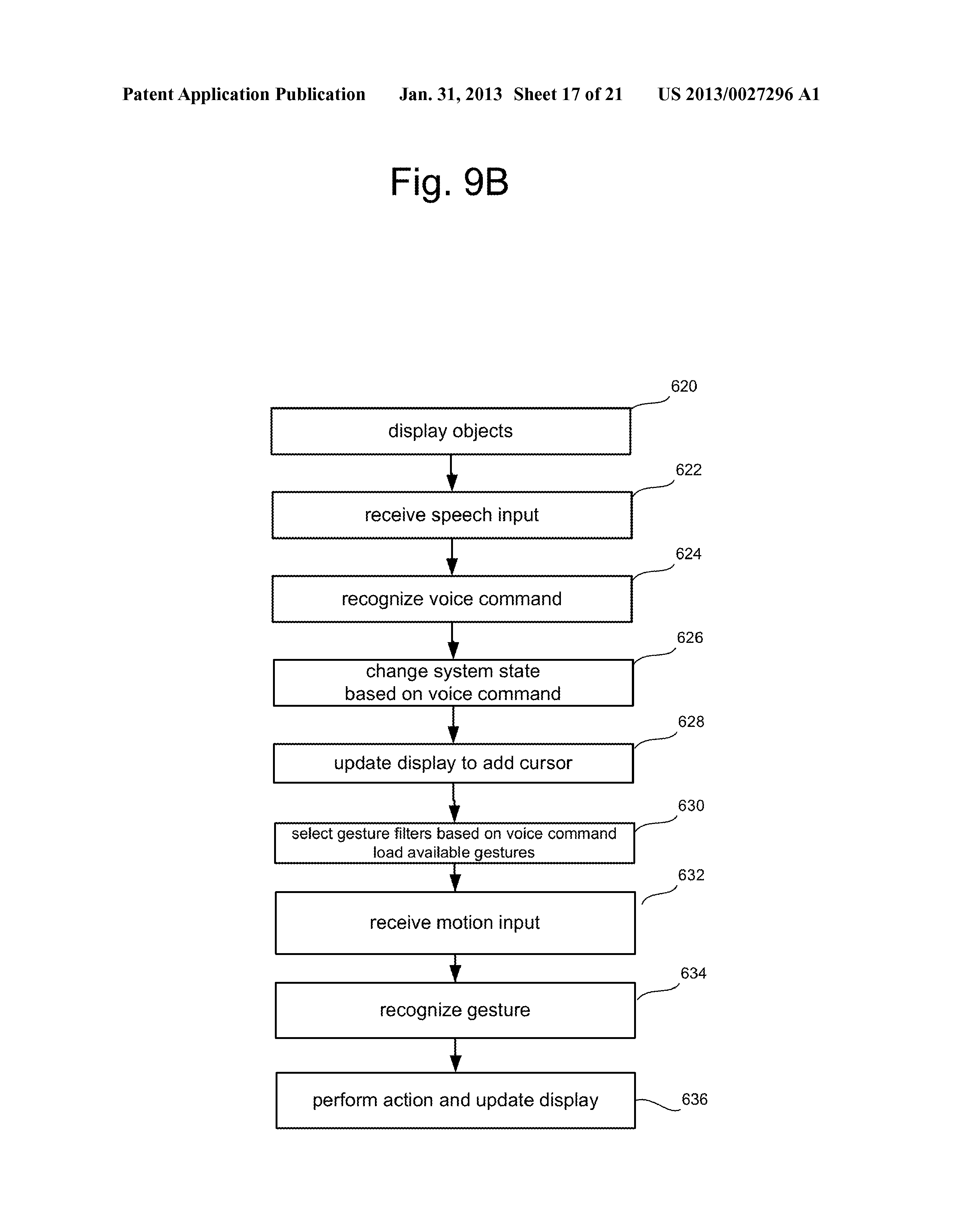

[0024] FIG. 9B is a flow chart describing an alternative embodiment of a process for user interaction with a computing system using hand gestures and voice commands.

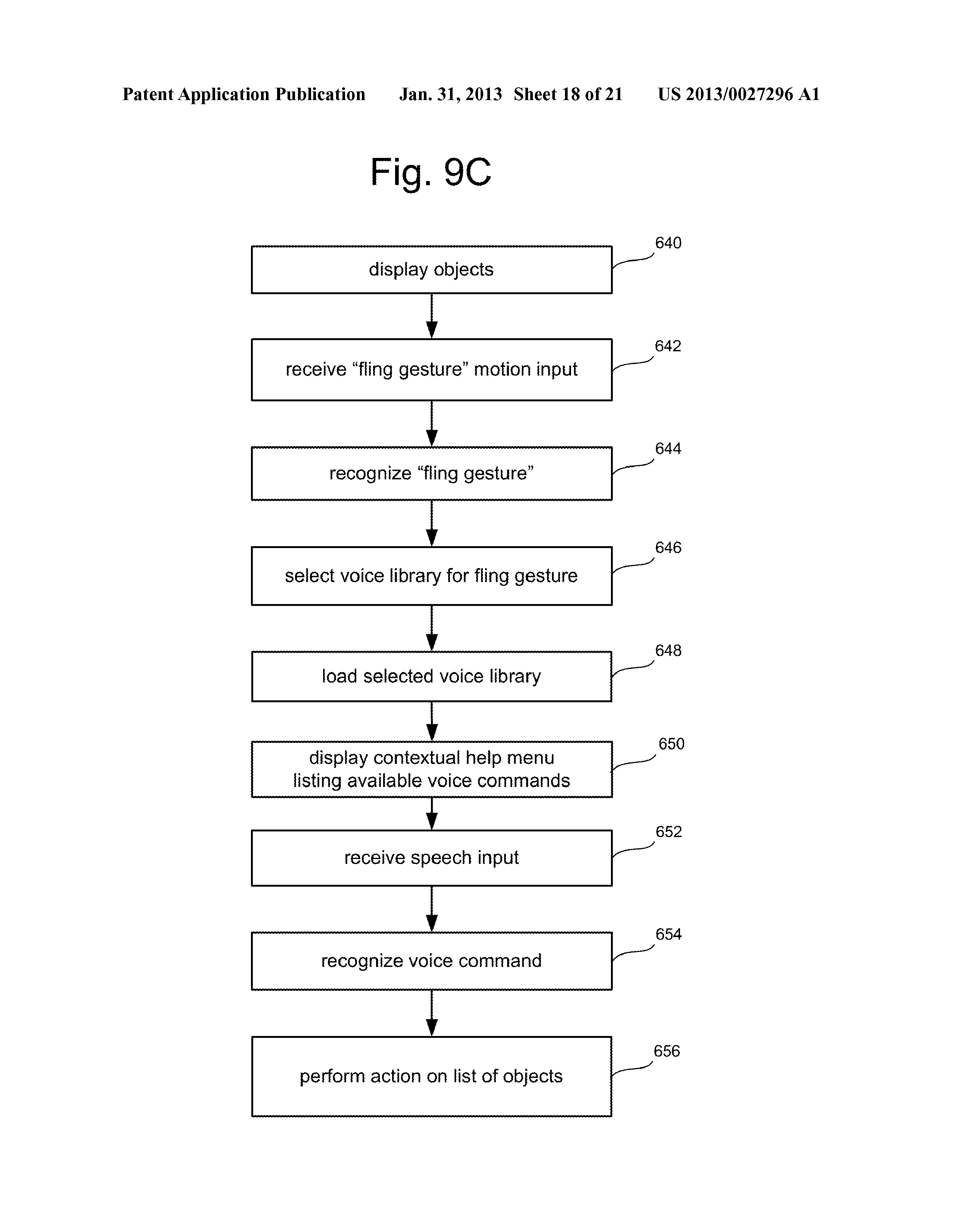

[0025] FIG. 9C is a flow chart describing one embodiment of a process for user interaction with a computing system using a specific hand gesture and contextual voice commands.

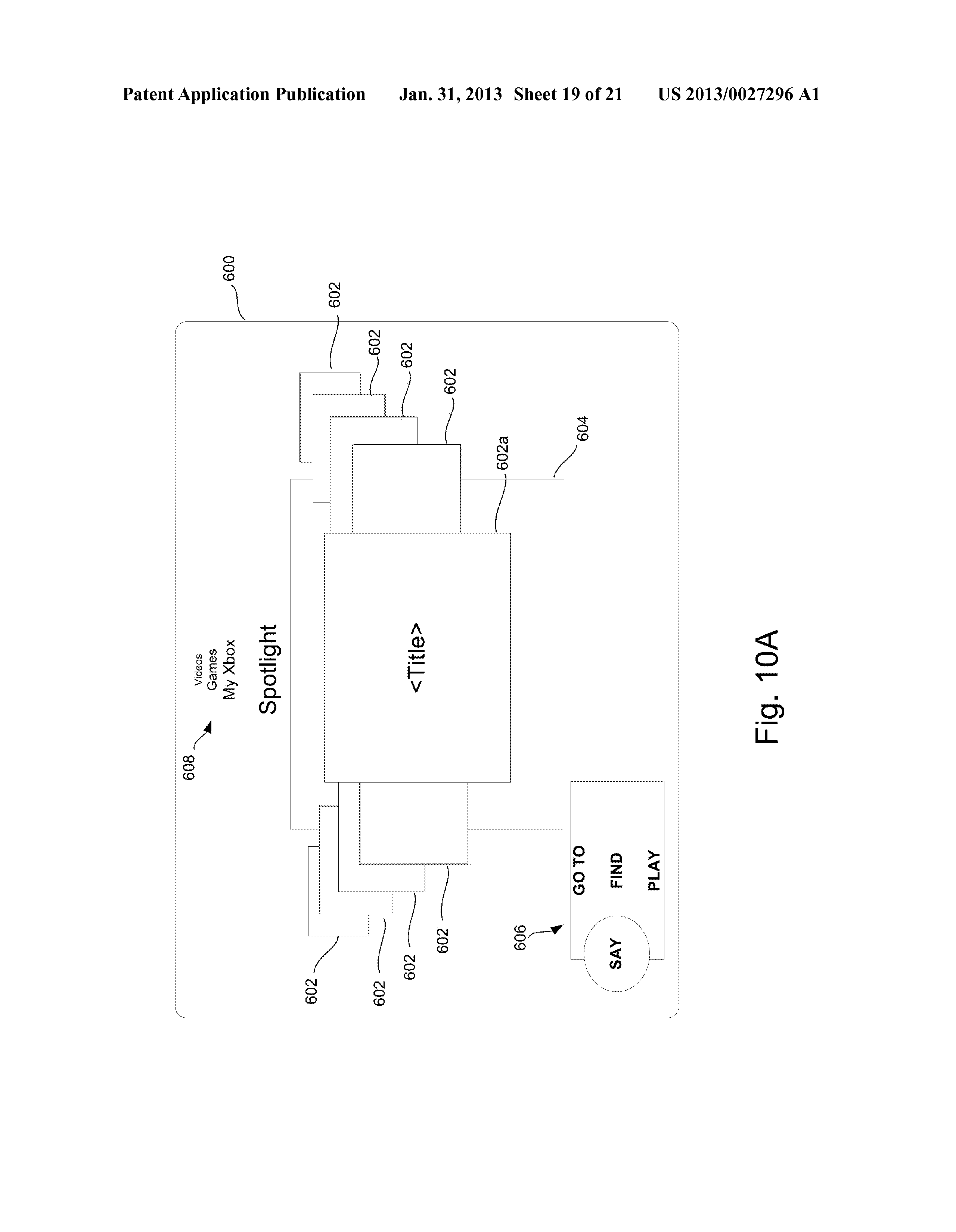

[0026] FIG. 10A is an illustration of a first level user interface implementing the flow chart of FIG. 7A.

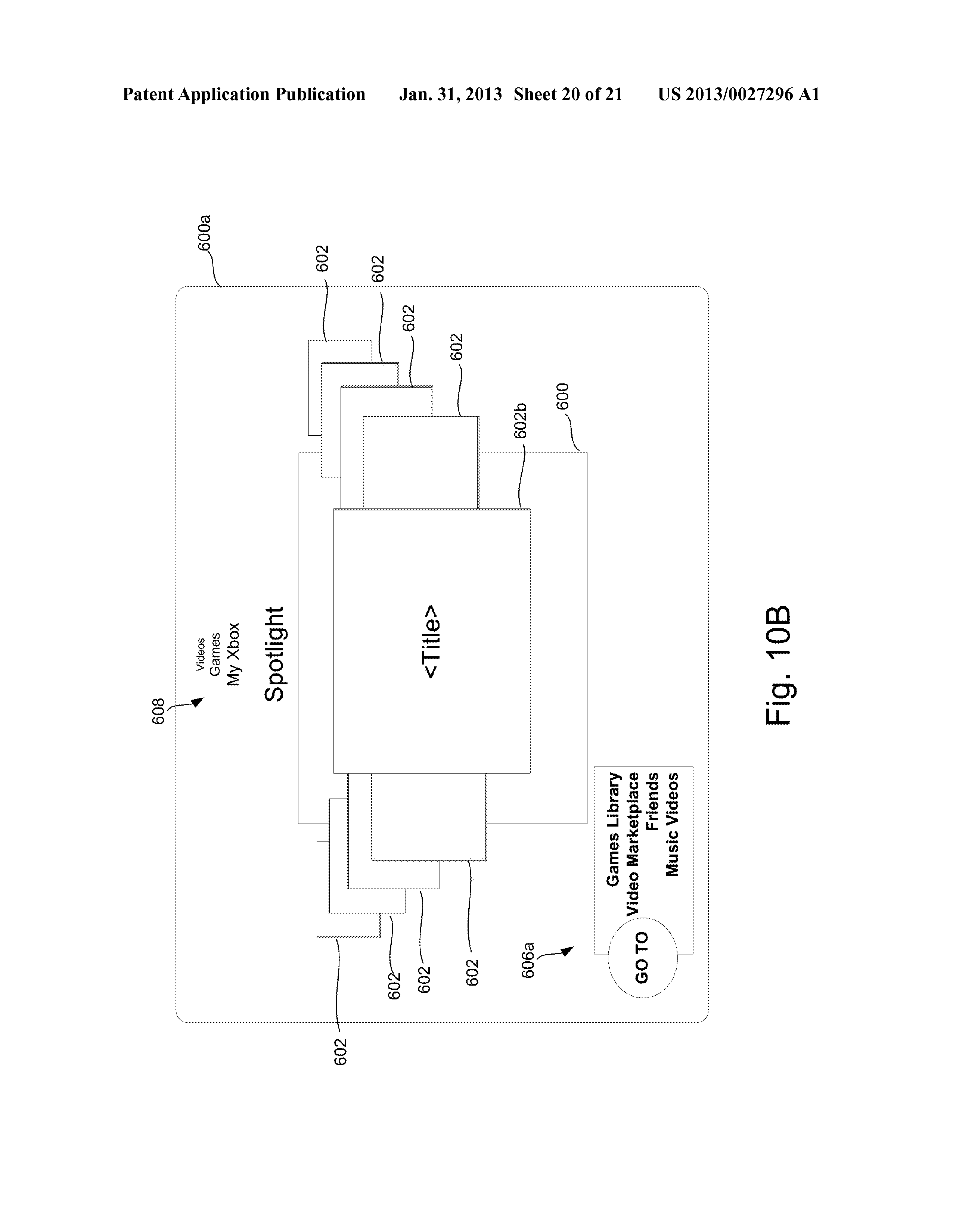

[0027] FIG. 10B is an illustration of a second level user interface implementing the flow chart of FIG. 7B.

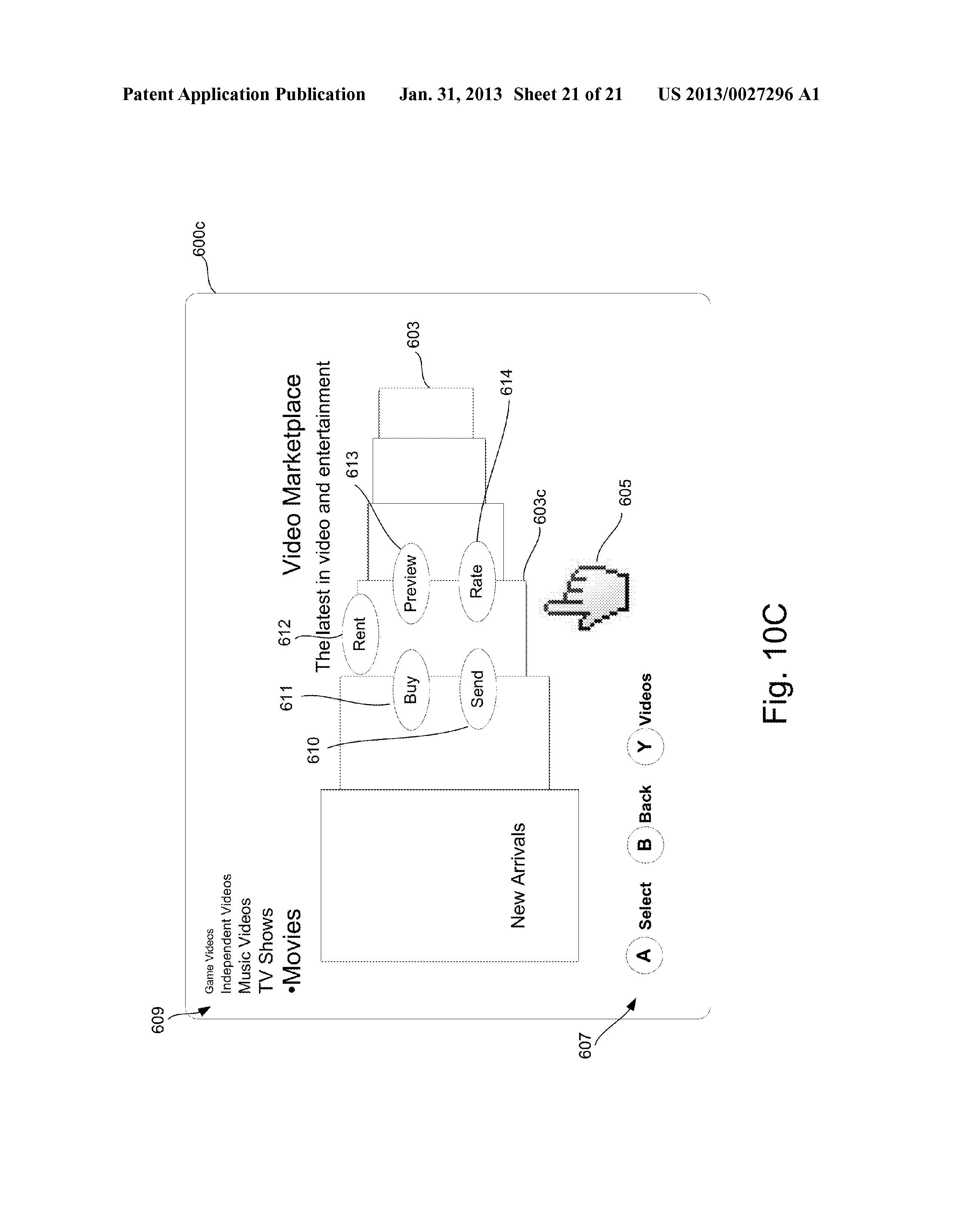

[0028] FIG. 10C is an illustration of a third level user interface.

http://appft1.uspto.gov/netacgi/nph-Parser?Sect1=PTO2&Sect2=HITOFF&p=1&u=%2Fnetahtml%2FPTO%2Fsearch-bool.html&r=2&f=G&l=50&co1=AND&d=PG01&s1=Microsoft.AS.&s2=Xbox&OS=AN/Microsoft+AND+Xbox&RS=AN/Microsoft+AND+Xbox