http://www.eurogamer.net/articles/digitalfoundry-2016-amd-reveals-polaris-next-gen-graphics-hardware

https://youtu.be/5g3eQejGJ_A

https://www.youtube.com/watch?v=5g3eQejGJ_A

http://videocardz.com/58031/amd-announces-polaris-architecture-gcn-4-0

https://youtu.be/5g3eQejGJ_A

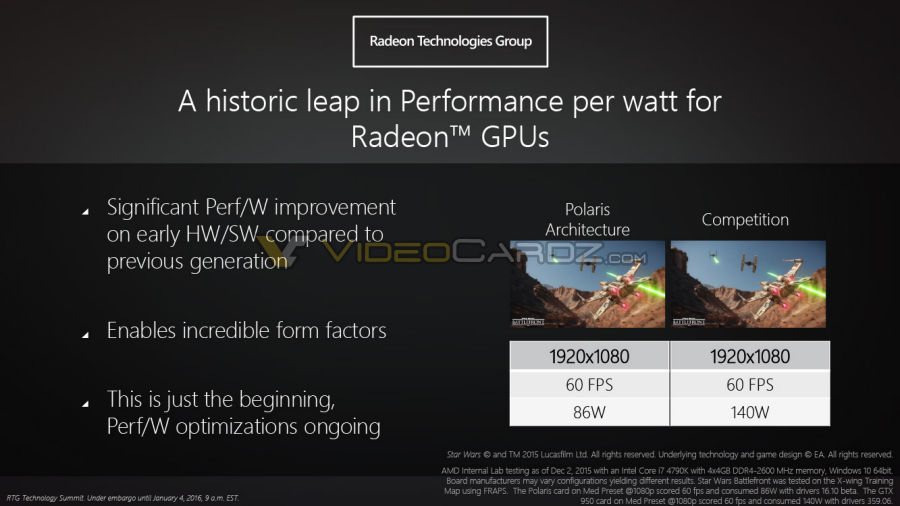

AMD today revealed its new fourth generation GCN architecture, dubbed Polaris, offering a substantial leap in power and efficiency. Comparing an unannounced GPU product against the Nvidia's GTX 950 running Star Wars Battlefront at medium settings at 1080p60, AMD says that its new architecture offers a 61 per cent reduction in power consumption, requiring 84W vs Nvidia's 140W.

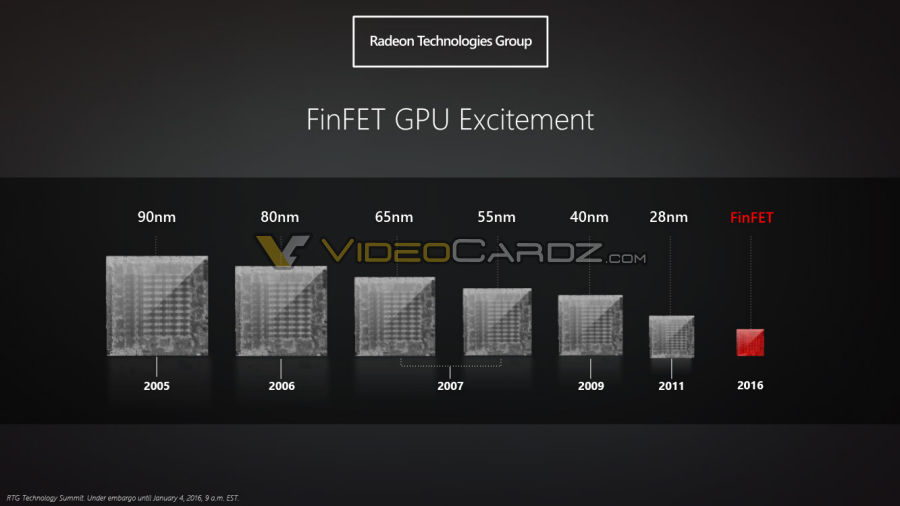

Since 2011, both companies have used 28nm production - meaning that each transistor on the chip is one billionth of a metre long. While the process has been refined since then, it's fair to say that both companies had hit a brick wall in getting more out of the existing technology. This year, moving to a 16nm 'FinFET' process is finally possible - with the larger planar transistors of the old process giving way to the smaller '3D' transistors in the new production technology.

So what does that mean for gamers? The bottom line is simple: GPUs comparable with the current generation can be smaller and more power efficient - as AMD is attempting to demonstrate with its Polaris vs GTX 950 Battlefront comparison. But more to the point, this also means that larger chips with the same levels of power consumption as existing GPUs can pack on far, far more transistors, meaning much more processing power.

We were sent this information way ahead of time, and AMD isn't giving anything specific away with regards its plans for Polaris in terms of actual Radeon products, but more details may emerge as the Consumer Electronics Show (CES) progresses in Las Vegas. We've embedded the complete presentation AMD supplied below:

https://www.youtube.com/watch?v=5g3eQejGJ_A

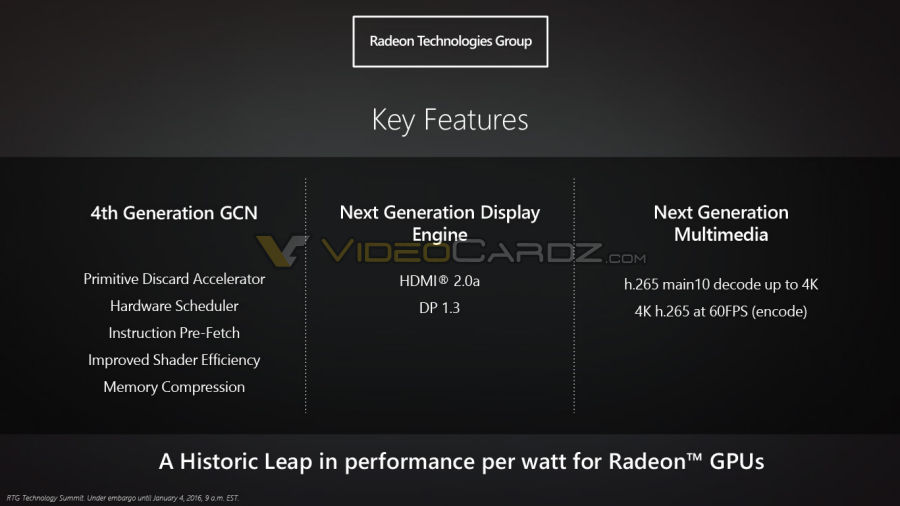

So what do we know? The fourth generation of the GCN architecture features extensive improvements, including a primitive discard accelerator, hardware scheduler, instruction pre-fetch, improved shader efficiency and better memory compression. AMD catches up with Nvidia with support for HDMI 2.0a, plus there's DisplayPort 1.3 compatibility. Media functions are bolstered with support for the HEVC codec - we're promised real-time onboard encoding at 4K60. We expect that decode also follows that spec too (as the Fury products support it already).

But it's the massively improved power efficiency that is compelling here: AMD reckons that Polaris is going to mean big things gaming notebooks, small form factor desktops and full-on graphics card with less onerous power requirements (and fewer power connectors). It has specifically targeted 'console calibre' performance for 'thin and light' laptops - great news, bearing in mind that PS4 and Xbox One define the baseline for most modern triple-A titles. The ability to have that kind of performance in smaller form factor notebooks can only be a good thing.

Polaris GPUs are planned for release in Q2 this year, meaning that the earliest we'll see them is April. We expect to see Nvidia's rival tech - codenamed Pascal - to arrive in the same time window. We're particularly interested in seeing the higher-end products from both firms that should be paired with HBM memory technology - but the recent reveal of higher bandwidth GDDR5X also gives AMD and Nvidia new VRAM options for their next-gen graphics technology. On top of that, we should see more utilisation of DirectX 12, meaning even higher levels of performance - exactly what we need with the upcoming arrival of high-end virtual reality in the same timeframe.

http://videocardz.com/58031/amd-announces-polaris-architecture-gcn-4-0

AMD Polaris vs NVIDIA Pascal

Polaris is new codename for 14nm FinFET architecture that will be introduced with new graphics cards later this year. From what I’ve heard, AMD is planning to launch first Radeon 400 cards in the summer. Even though the launch is still months ahead, the company decided to share more details about their future portfolio to tease gamers (and probably investors).

To give you a perspective, this is how Polaris fits after 28nm architecture.

2011 — 28nm GCN 1.0 — Tahiti / Cape Verde

2013 — 28nm GCN 2.0 — Hawaii / Bonaire

2015 — 28nm GCN 3.0 — Fiji / Tonga

2016 — 14nm FinFET Polaris (GCN 4.0)

In the last 10 years fabrication process shrunk significantly (in 2005 it was 90nm). Obviously smaller node means higher power efficiency and therefore higher performance at lower power consumption. Unfortunately the slides are not very accurate, in fact they don’t even have any numbers, so I can’t share more details as of yet.

The GPU design was modified to include new logical blocks. What’s new is Command Processor, Geometry Processor, Multimedia Cores, Display Engine and upgraded L2 Cache and Memory Controller.

Last but not least, the new architecture has 4th Generation Graphics Core Next Compute Units (aka. GCN 4.0).

AMD Polaris will compete against NVIDIA’s Pascal architecture. Both are believed to utilize High-Bandwidth-Memory 2 (HBM2).