moniker

Member

Lots of cool stuff there as well:

Both the fixed foveated rendering and multi gpu performance increases seem kind of disappointing?

Lots of cool stuff there as well:

"Great Management of Technical Leads" (Mika Acton / Insomniac Games)

Optimizing the Graphics Pipeline with Compute (Graham Wihlidal / Dice)

Object Space Lighting following Film rendering 2 decades later in real time (Dan Baker / Oxide Games)

Optimizing the Graphics Pipeline with Compute (Graham Wihlidal / Dice)

All wonderful presentations. Oxide game's render is pretty darn unique.

Rather disappointing gains from async compute again (2% PS4, 5% XBO, 12% FuryX if I'm not mistaken). But the gains from compute based culling are extreme on the other hand. GCN's geometry pipeline need to improve.

They're definitely unique in shading all stuff in a 4Kx4K buffer even when a user is running in 1080p resolution. 512 bit GDDR5 and HBM cards will probably shine there 8)

Looks like it. I had hoped that VR would benefit more from multi-gpu set ups. 35% is disappointing.30 to 35% increase by adding a 2nd gpu or am i interpreting it wrong?

Looks like it. I had hoped that VR would benefit more from multi-gpu set ups. 35% is disappointing.

its just 1 use case for async compute. its probably much easier to saturate the smaller amt of CUs on the consoles as oppose to the massive arays on higher end pc parts.

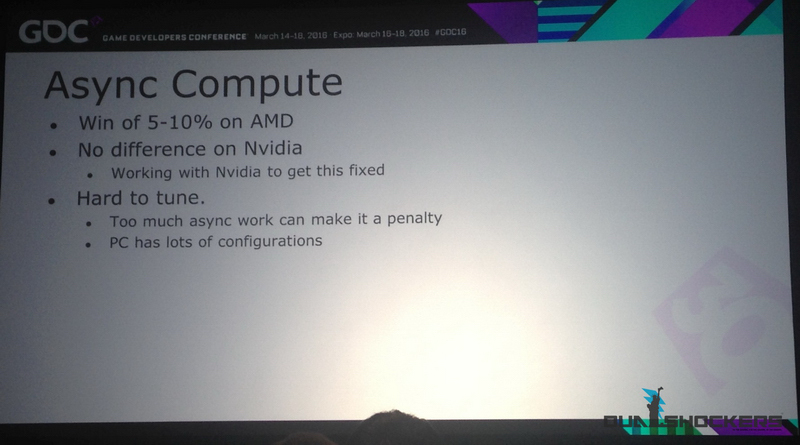

Yeah, well, it's one case but it's not the first which gives that ~+10% performance result on PC GCN cards.

It's also highly questionable if such compute culling optimization will actually help anything but GCN - it's pretty clear that consoles are the main target of these compute tricks which stem from the issues the GCN geometry pipeline has. I also kinda wonder if the primitive discard unit shown in GCN4 will perform something like this in h/w for Polaris.

most development optimizations are going to be for amd going forward. its going to be an uphill battle for nvidia.

most development optimizations are going to be for amd going forward. its going to be an uphill battle for nvidia. 10 to 15% here and there is nothing to scoff at. esp considering its just extra performance without any cost to the user. good developers have pretty much solved gcns geomoetry weakness. its not even an issue outside of nvidias over tessellated gameworks libraries.

Rather disappointing gains from async compute again (2% PS4, 5% XBO, 12% FuryX if I'm not mistaken). But the gains from compute based culling are extreme on the other hand. GCN's geometry pipeline need to improve.

What I do find interesting is that the times for XBO and PS4 are so close together although this is data coming from the rather slow DDR3 RAM.

Global Illumination in 'Tom Clancy's The Division'

Nikolay Stefanov | Technical Lead, Ubisoft Massive

Location: Room 2016, West Hall

Date: Friday, March 18

Time: 1:30pm - 2:30pm

Format: Session

Track:

Programming,

Visual Arts

Pass Type: All Access Pass, Main Conference Pass - Get your pass now!

The session will describe the dynamic global illumination system that Ubisoft Massive created for "Tom Clancy's The Division". Our implementation is based on radiance transfer probes and allows real-time bounce lighting from completely dynamic light sources, both on consoles and PC. During production, the system gives our lighting artists instant feedback and makes quick iterations possible.

The talk will cover in-depth technical details of the system and how it integrates into our physically-based rendering pipeline. A number of solutions to common problems will be presented, such as how to handle probe bleeding in indoor areas. The session will also discuss performance and memory optimization for consoles.

Takeaway

Attendees will gain understanding of the rendering techniques behind precomputed radiance transfer. We will also share what production issues we encountered and how we solved them - for example, moving the offline calculations to the GPU and managing the precomputed data size.

Intended Audience

The session is aimed at intermediate to advanced graphics programmers and tech artists. It will also be of interest to lighting artists who are interested in improving their workflow. Knowledge of key rendering techniques such as deferred shading and 3D volume mapping will be required.

Console engines are optimized for low memory bandwidth obviously. An engine which is optimized for XBO's DDR3 limitations won't benefit from PS4's x2 bandwidth as it simply won't need it. This is where the lower common denominator kicks in and where PS4 exclusive titles have an opportunity to shine by comparison.

Interesting.

His opinion on this? Sure.

His opinion on this? Sure.

5-10% again.

You have a different one?

You think PC doesn't suffer from these optimizations? Take a look at how Fury X is doing against 980Ti. Optimizing for low bandwidth is the first thing you should do on a console h/w, CUs and all else comes after that or as a results of that - allowing you to load the h/w with math while the memory fetch is running."Console engines are optimized for low memory bandwidth obviously."

To me it is not obvious as they started their slides with the CU specs and not with memory specs. To me it is

a) not obvious that the engine is optimized for low bandwidth and

b) coming from the wrong hypothesis, this doesn't mean that first party would make better use of it.

Especially when you look at what PC is able to do. Wouldn't it also suffer from the XBO? Or do you think that they make a console version and a PC version?

The biggest difference as shown in their starting slides is GPU performance and then in the end results are shown. Drawing the conclusion that the difference isn't that big because they "gimped" the PS4 console engine version sounds strange and is not proved by the slides.

That's why I think it is not "obvious".

I think if you are already pushing the GPU hard in a lot of places to produce a frame, it is hard to get a lot out of it as there is just too much "pressure".I have to say I was expecting much more gains due to async compute bearing in mind the hub hub about this feature.

This was also great. I like how he went into the sample pattern and even a way to deal with the resolve/disolve artifact problem.Temporal Reprojection Anti-Aliasing in Inside (Lasse J F Pedersen / PLAYDEAD)

I think if you are already pushing the GPU hard in a lot of places to produce a frame, it is hard to get a lot out of it as there is just too much "pressure".

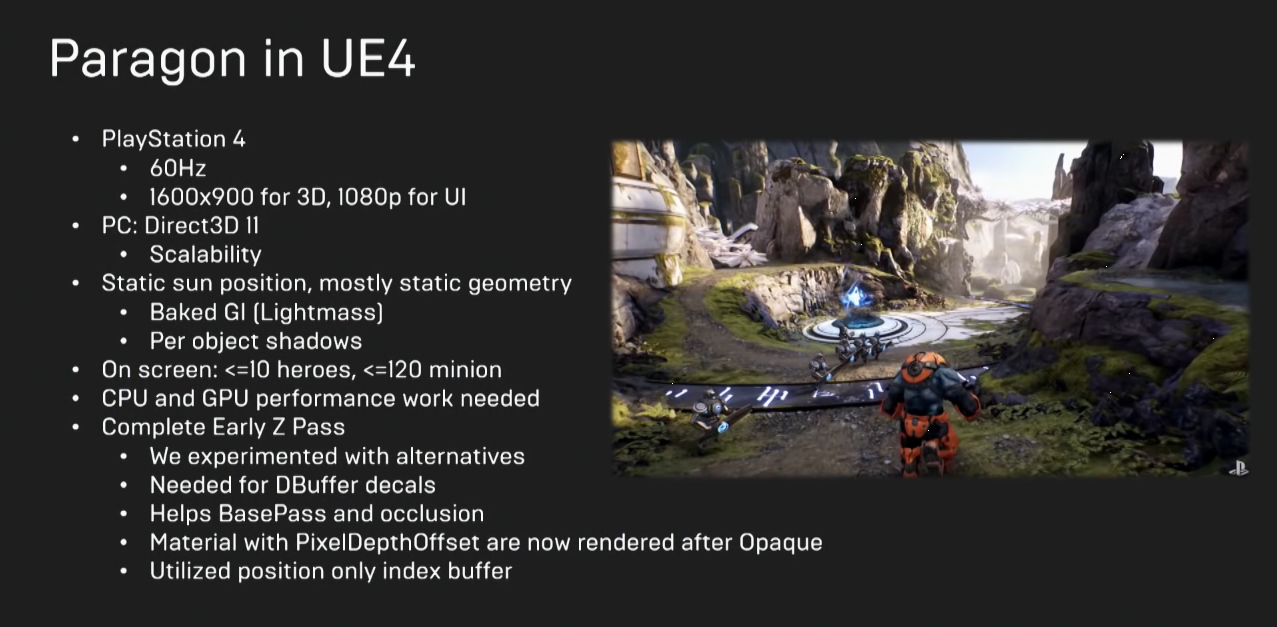

So Paragon is sub 1080p on PS4 ?

Fixed foveated rendering is just having the center of each eye higher quality, and doesn't change depending on where your eyes are actually looking, right?

Do you spend a majority of your time looking at the center of screen, and using your head to look around? If so, that seems like a decent solution to reduce resources required.

Do the higher versions need some more processing time?

Anyone could download correctly the remedy paper from the OT? After downloading it I just end up with a broken file with all the sheets empty.

Works fine here. Something must be interfering with the download on your connection.

Thanks, I opened it with the PowerPoint online client from OneDrive and it finally opened well, for some reason my installed PowerPoint does not like the file.

Regarding the article/presentation, I was expecting much more information specially after all the recent news from the game rendering internals, pretty disappointing as it is just a DX12 small walkthrough.