Well yeah, that's why it is the first thing which came to my mind which could be re-used without too many changes from standard OpenGL. Still, they could probably cut out a lot of cruft even there.Why would they not want to reuse GLSL? Actually curious, I can't think of any reason why they'd want to throw it out.

-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

glNext GDC session presented by Valve, Frostbite, Unity, Epic, Oxide

- Thread starter ashecitism

- Start date

ashecitism

Member

Huh. Looks like GDC pulled that event down from their site. Link in the OP takes me to a "Session Not Found" page.

Edit: the talk's been changed to "Presented by Khronos" - http://schedule.gdconf.com/session/glnext-the-future-of-high-performance-graphics-presented-by-khronos

Yeah, I guess that makes sense, haha.

alexandros

Banned

I like Anderson's title: Technical Fellow

How come Swedes are so prominent in graphics programming? Isn't the "main guy" at Naughty Dog swedish as well?

Historical Amiga roots

ashecitism

Member

Aspyr dev on reddit chimed in

I hope, given the new name, they've broken 100% from OpenGL history and resolved the issues above. However, that means new drivers for every card. The IHVs won't do that, they'll only create drivers for newer cards. That means it'll be OpenGL for old cards and glNext for new and developers will have to decide if the market will bear the extra work required for two APIs.

I think no matter what, I'll be working in OpenGL for the next five years.

ashecitism

Member

lol they changed it back to 'presented by Valve'

make up your mind already, I don't want to replace the links all the time

make up your mind already, I don't want to replace the links all the time

stan423321

Member

...wait, that's NOT how they/you implement such stuff?I think any kind of backwards compatibility would go against what this is supposed to be. Maybe you could reuse most of the shader language.

An idea I just had (so not really reflected on the viability of it or anything) of something that would be incredibly interesting IMHO is an open source / free software "Classic OpenGL" layer implemented on top of glNext. Basically, take the huge software complexity and myriad of functions of full legacy OpenGL support and put it into a single-well tested framework which runs on every low-level GLnext driver provided by each vendor.

I mean, GL2=>GL4 was usually just implemented incrementally, but something like, say, D3D7? You keep a separate implementation for that?

Excuse layman question, but is this going to be used by devs on Windows too? Or is everyone still going to use DX12? Is this mostly for SteamOS/Linux?

DX12 and DX11 will still have the advantage of running out-of-the-box, while getting the latest OpenGL support requires installing drivers. That's why Chrome and Firefox actually use Direct3D under the hood for WebGL support: it ensures maximum compatibility.

However, the problem isn't as bad as it was in the XP and Windows 7 days since Windows automatically downloads the latest WHQL-certified drivers automatically starting with Windows 8, including the auxiliary software (Catalyst Control Center, NVidia Control Panel, 3D Vision software and so on).

Piston Hyundai

Member

sneak preview

valve: "fffffffffffffffffffff..."

frostbite, unity devs, etc, actually talk about glNext

valve: "ffffffFFFFFUCK Microsoft"

curtains

valve: "fffffffffffffffffffff..."

frostbite, unity devs, etc, actually talk about glNext

valve: "ffffffFFFFFUCK Microsoft"

curtains

D3D was never backwards compatible with previous versions, not sure what you mean here?stan423321 said:but something like, say, D3D7? You keep a separate implementation for that?

ashecitism

Member

So it's happening? I thought we were still in the conceptual stage of this entire thing being made, happy to be wrong.

Yeah, as I said whenever I looked at it, Khronos was looking for input from devs what they want and all, then sometimes last year AMD offered them Mantle, then last month they put up this survey to help name it. The Valve devs who are presenting are working on SteamOS and vogl, so this explains the slow updates.

What do you mean? Currently, there is no cross-platform low-level API, so there's nothing to build a cross-vendor free software high-level OpenGL (backwards) compatibility layer upon....wait, that's NOT how they/you implement such stuff?

I wouldn't be surprised if some individual companies implement their drivers a bit like that, but that is obviously not information anyone outside them is privy to, and it's not the same thing (and doesn't have the same usefulness) as an open source cross-vendor implementation.

How come Swedes are so prominent in graphics programming? Isn't the "main guy" at Naughty Dog swedish as well?

Wouldn't say just graphics, but tech & gamedev in general. This goes into it a little bit (the video):

https://sweden.se/culture-traditions/booming-battlefield/

Oh and Pål-Kristian Engstad at Naughty Dog is Norwegian, but close enough and he's a great guy

agiantenemycrab

Member

I think any kind of backwards compatibility would go against what this is supposed to be. Maybe you could reuse most of the shader language.

An idea I just had (so not really reflected on the viability of it or anything) of something that would be incredibly interesting IMHO is an open source / free software "Classic OpenGL" layer implemented on top of glNext. Basically, take the huge software complexity and myriad of functions of full legacy OpenGL support and put it into a single-well tested framework which runs on every low-level GLnext driver provided by each vendor.

I recall an engineer from Valve stating at SteamDevDays that they were lobbying for a standard shader IR for glNext, and that the vendors were coming around to the idea. OpenCL has SPIR which is superset of LLVM IR, giving it the nice property of being easily convertible to a relatively efficient bitcode. Given that the GPU vendors are already using the LLVM toolchain and are acquainted with it for their CL implementations (Intel/NVIDIA/AMD all use it for CL), I think its possible that glNext may not have an explicit high level shading language but just an IR, which many higher languages could target, and which is vendor and device neutral.

Total speculation though. Super interested in seeing what the vendors have come up with. GL has had a good run, but its just got bigger and more complex as time has gone by. A fresh start, designed for modern (highly parallel) hardware has been needed for a long time. I hope this a complete revolution instead of just an incremental upgrade like Long Peaks.

The talk abstract makes it sound as though some of the vendors already have glNext implemented already, given that they will be giving demos of it. So I hope these drivers (and API documentation) are released to the public after the talk. Pretty please NVIDIA!

It's not even the API itself that needs a revamp. The OpenGL driver model is the dinosaur that is in dire need for a replacement.

It severely undermines OpenGL's cross platform potential due to the large amount of QA and tweaking needed to get around inconsistencies between different driver implementations (see Dolphin's OGL driver report).

The new driver model introduced with DirectX 10 is a good template. Instead of having to implement the whole D3D API directly in the driver, GPU vendors had to supply only the kernel driver, which consumed mostly low level stuff like resource management. The user mode driver was develop developed and provided by Microsoft. This made it much easier for vendors to make highly compliant drivers. With OpenGL, GPU vendors have to implement everything, even the shader compiler.

It severely undermines OpenGL's cross platform potential due to the large amount of QA and tweaking needed to get around inconsistencies between different driver implementations (see Dolphin's OGL driver report).

The new driver model introduced with DirectX 10 is a good template. Instead of having to implement the whole D3D API directly in the driver, GPU vendors had to supply only the kernel driver, which consumed mostly low level stuff like resource management. The user mode driver was develop developed and provided by Microsoft. This made it much easier for vendors to make highly compliant drivers. With OpenGL, GPU vendors have to implement everything, even the shader compiler.

I recall an engineer from Valve stating at SteamDevDays that they were lobbying for a standard shader IR for glNext, and that the vendors were coming around to the idea. OpenCL has SPIR which is superset of LLVM IR, giving it the nice property of being easily convertible to a relatively efficient bitcode. Given that the GPU vendors are already using the LLVM toolchain and are acquainted with it for their CL implementations (Intel/NVIDIA/AMD all use it for CL), I think its possible that glNext may not have an explicit high level shading language but just an IR, which many higher languages could target, and which is vendor and device neutral

This would indeed be good. AFAIK OGL requires shaders to be compiled at runtime (still?), having binary shader or a compact IR would be a good thing.However, I'm not sure that Nvidia would be the one to do SPIR as they have been utterly lukewarm on OpenCL 2.0, I think it is AMD that is pushing SPIR as part of their HUMA initiatives.

Yeah, well... Apple gonna Apple.

Even when they are relatively snappy on the uptake, their OpenGL implementations leave a lot to be desired in terms of performance. They do pander to the workstation users such that even the consumer GPUs are certified for working with things like Maya etc; their drivers favor stability over anything else.

As someone that does a lot of OpenGL development on a Mac Pro (2013), I can assure you that "stability" isn't something that Apple's drivers are good at. I can think of a half dozen ways to trigger a GPU reset or hard-lock the entire machine with code (sequences of GL function calls and/or shaders) that works fine on other platforms. Same story for their OpenCL runtime; simple things like "a loop calling a function using a vec4 constant pulled from an array" will crash the GPU in a way that requires a hard reset to recover.

How come Swedes are so prominent in graphics programming? Isn't the "main guy" at Naughty Dog swedish as well?

Demoscene, graphics and games related hackery was big in northern countries in the 90s. Finland has remedy, housemarque and bugbear for example which all were born out of it.

As someone that does a lot of OpenGL development on a Mac Pro (2013), I can assure you that "stability" isn't something that Apple's drivers are good at. I can think of a half dozen ways to trigger a GPU reset or hard-lock the entire machine with code (sequences of GL function calls and/or shaders) that works fine on other platforms. Same story for their OpenCL runtime; simple things like "a loop calling a function using a vec4 constant pulled from an array" will crash the GPU in a way that requires a hard reset to recover.

Stability and Certification from CAD and graphics packages is what they are aiming for, whether they achieve it for other things is something else...

I'm totally with you on the OpenCL runtime, it is really bad. The terrible OpenCL implementation is so laughable considering Apple was the company that was pushing it into Khronos.

blu

Wants the largest console games publisher to avoid Nintendo's platforms.

Cosigned. And why I left osx behind. They have a myriad of great implementations of great technologies, but ogl is not one of them.As someone that does a lot of OpenGL development on a Mac Pro (2013), I can assure you that "stability" isn't something that Apple's drivers are good at. I can think of a half dozen ways to trigger a GPU reset or hard-lock the entire machine with code (sequences of GL function calls and/or shaders) that works fine on other platforms. Same story for their OpenCL runtime; simple things like "a loop calling a function using a vec4 constant pulled from an array" will crash the GPU in a way that requires a hard reset to recover.

I think what OpenGL really needs is a public repository of unit tests. Like 20000 of them.It severely undermines OpenGL's cross platform potential due to the large amount of QA and tweaking needed to get around inconsistencies between different driver implementations (see Dolphin's OGL driver report).

ashecitism

Member

People on reddit already in fanfic mode. Battlefront? L4D3?...HL3? Please.

Every.single.time.

Every.single.time.

agiantenemycrab

Member

People on reddit already in fanfic mode. Battlefront? L4D3?...HL3? Please.

Every.single.time.

To be fair, if I was Valve and I wanted to showcase glNext, demoing Source 2 with a glNext rendering backend would be a pretty good way to get people excited about both. So I think there is a decent chance we will see something about Source 2 related at GDC. And Valve would probably use a new game to demo it as well. Showing DOTA 2 running on Source 2 would be kinda underwhelming. Again, entirely speculation with a little bit (ok a lot) of wishful thinking

NumberThree

Member

People on reddit already in fanfic mode. Battlefront? L4D3?...HL3? Please.

Every.single.time.

Half-Front Tournament 34, a collaboration by Valve, Epic and DICE. Made in Unity.

agiantenemycrab

Member

I know it's only slightly related, but don't dev also use opengl to code for PS4?

The Sony ICE Team provides libgnm for interacting with the PS4 GPU. It is similar in spirit to what AMD is trying provides with Mantle. Much less abstract than GL. This is easier for Sony to do given they control the hardware and the OS. Sony did provide a GL like interface for the PS3 called PlaystationGL though.

The Sony ICE Team provides libgnm for interacting with the PS4 GPU. It is similar in spirit to what AMD is trying provides with Mantle. Much less abstract than GL. This is easier for Sony to do given they control the hardware and the OS. Sony did provide a GL like interface for the PS3 called PlaystationGL though.

Thanks for clearing that out for me. Nevertheless I'm still excited to hear the news. Especially since Valve is among the presenters.

Cosigned. And why I left osx behind. They have a myriad of great implementations of great technologies, but ogl is not one of them.

yep. And besides all of the correctness problems, the performance of GL on OS X is terrible. I can't run CS:GO at full settings on this "high-end graphics workstation". Doom 3 will dip into the 40s in scenes with a lot of fog running at 2560x1440, and that's a game from 2004.

We'll definitely post the slides after the talk!

excellent!

ashecitism

Member

To be fair, if I was Valve and I wanted to showcase glNext, demoing Source 2 with a glNext rendering backend would be a pretty good way to get people excited about both. So I think there is a decent chance we will see something about Source 2 related at GDC. And Valve would probably use a new game to demo it as well. Showing DOTA 2 running on Source 2 would be kinda underwhelming. Again, entirely speculation with a little bit (ok a lot) of wishful thinking

So does this mean we're finally getting Source 2 SDK? I'm one of those nutters that prefers Source over UnrealEngine to work in. Though to be fair Source 2 will probably be some kind of DOTA engine I have no interest in now.

The only Valve related talks announced so far are this, VR rendering and physics (something which they have every year or so). There's no indication Source 2 is going to be a thing there. Also, for Valve GDC is mainly going to be about Steam Machines. The moment they show Source 2 with a new game it's going to detract attention from that. I doubt they want that. Which also goes for this talk. It's a joint presentation about glNext and not unannounced Valve stuff. If they do show stuff it might be Dota 2 Source 2, but I doubt it. What I'd expect is Oxide showing a Nitrous tech demo with Star Swarm or something like that, and Epic, Unity, Frostbite showing engine stuff as well.

If Valve do show Source 2 proper this GDC that'd be great, but I'm not getting my hopes up.

ashecitism

Member

Valve employee on reddit:

http://www.reddit.com/r/linux_gamin...pengl_successor_to_be_unveiled_at_gdc/co9ilpe

I'm one of the presenters. We are planning on having recordings, they just haven't been sorted out with GDC quite yet. Hopefully should be fixed in a couple of days.

http://www.reddit.com/r/linux_gamin...pengl_successor_to_be_unveiled_at_gdc/co9ilpe

What I'd expect is Oxide showing a Nitrous tech demo with Star Swarm or something like that

I hope this happens. Can't wait for games to come out built with this engine...

vasametropolis

Member

Correct me if I'm wrong, and even if I'm right it might be a lot of work initially, but if you can create a layer that sits on top of glNext as proposed by Durante and others (as a means of supporting OpenGL games), then couldn't you create another layer to handle D3D as well, similarly to what Valve has done with D3D -> GL? Except this time high -> low level, and perhaps a lot more robust.

I think everyone would agree that they'd like to see as many existing games as possible running on SteamOS, etc. It's been said already that high -> high level is not ideal... but would this be the best way to get existing games ported in literally no time?

Edit: I guess from a DX perspective you also have to think about sound, input, and whatever else... I'm not super savvy in this area, but maybe it would be possible to have a translation from that to SDL as well that isn't completely awful.

Edit 2: I was reading the Aspyr guy's comments in the reddit post and it seems more or less true that this is completely feasible... although he says he might be out of a job if it happens LOL.

I think everyone would agree that they'd like to see as many existing games as possible running on SteamOS, etc. It's been said already that high -> high level is not ideal... but would this be the best way to get existing games ported in literally no time?

Edit: I guess from a DX perspective you also have to think about sound, input, and whatever else... I'm not super savvy in this area, but maybe it would be possible to have a translation from that to SDL as well that isn't completely awful.

Edit 2: I was reading the Aspyr guy's comments in the reddit post and it seems more or less true that this is completely feasible... although he says he might be out of a job if it happens LOL.

I never saw this particular slide set before, but now after going through it I'm inordinately hyped for glNext. This can only end in disappointment

A lot of relevant people seem to feel strongly about it:

A lot of relevant people seem to feel strongly about it:

ashecitism

Member

haha, they mention St. John's and Geldreich's blog posts.

ScepticMatt

Member

The comment was a response to "vault recording", which aren't publicly available for a year.Valve employee on reddit:

McDonald didn't specify which type of recording it was, though.

consoles don't use openGL. Not sure if they'll support glNext, even after the Unity image above.Just in time for next-gen consoles it seems.

ashecitism

Member

It's a sponsored session and apparently recordings of those are often available immediately.

Robert Hallock is apparently claiming that Mantle/glNext/DX12 all possess or will posses the ability, if developers choose to implement it in their games, to fully combine Multi GPU memory.

Ultimately the point is that gamers believe that two 4GB cards cant possibly give you the 8GB of useful memory. That may have been true for the last 25 years of PC gaming, but thats not true with Mantle and its not true with the low overhead APIs that follow in Mantles footsteps.

alexandros

Banned

Now that would be fantastic.

Just in time for next-gen consoles it seems.

?

#1 consoles already have their own low level APIs

#2 this OpenGL version coming very late, much latter than next-gen consoles. "Just in time" would be last year or even in late 2013.

Sure, but that would actually require developers to manually support multi-gpu configurations. I don't know how many will bother with that.Robert Hallock is apparently claiming that Mantle/glNext/DX12 all possess or will posses the ability, if developers choose to implement it in their games, to fully combine Multi GPU memory.

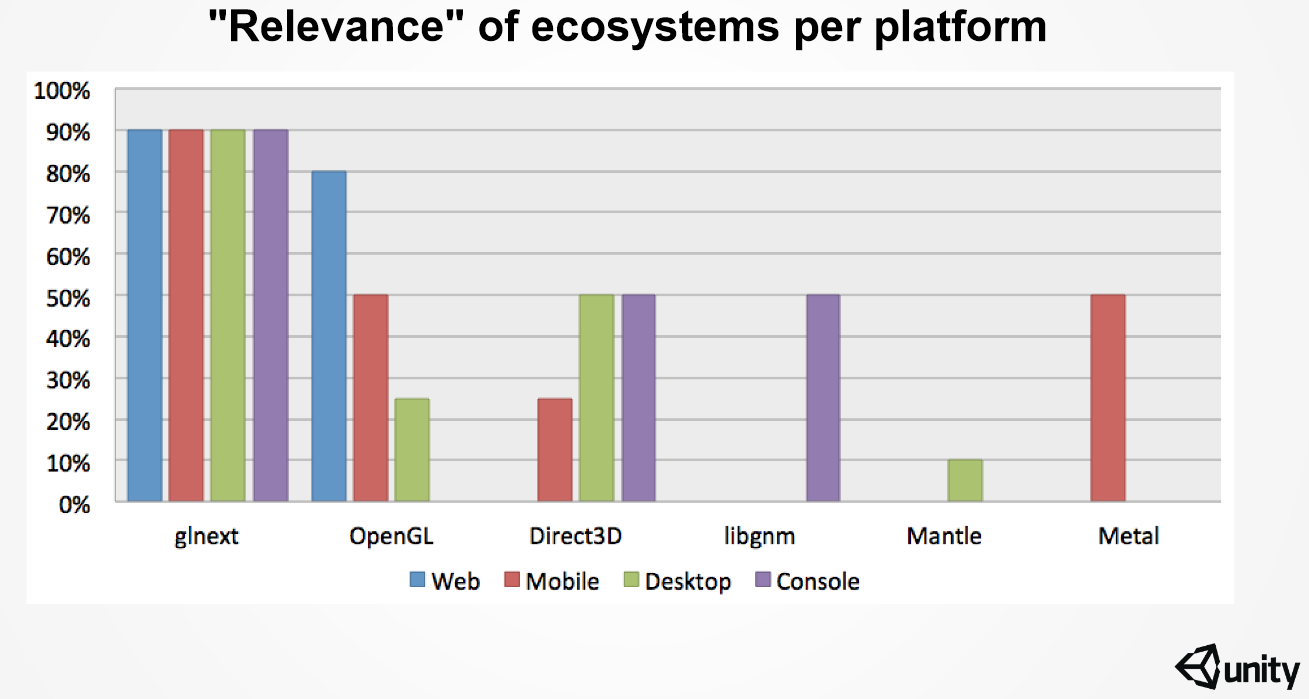

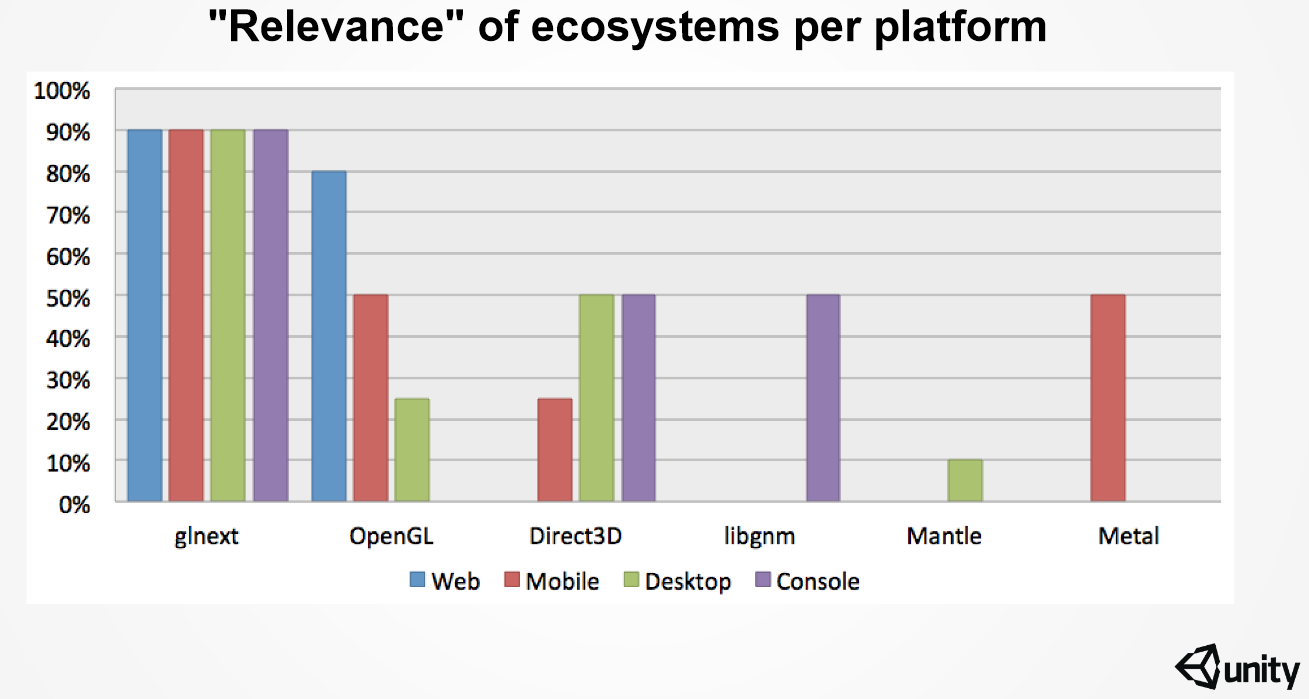

(If you look at the "relevance" chart above, you can an entry for "multi-GPU" which is like 1/2 the size of the Mantle one

Dictator93

Member

Sure, but that would actually require developers to manually support multi-gpu configurations. I don't know how many will bother with that.

(If you look at the "relevance" chart above, you can an entry for "multi-GPU" which is like 1/2 the size of the Mantle one)

One cool thing about that type of multi-GPU is that it would, on a certain level, allow games to deliver future scaling graphics options whilst testing well in house. IMO, still would be cool.

Nvidia made mention in a PCper interview recently that suff like SFR will see a return for certain games under dx12. Too bad by that point in time I wil no longer have SLI...

I completely agree that it would be cool, I just don't think many developers will bother with it.One cool thing about that type of multi-GPU is that it would, on a certain level, allow games to deliver future scaling graphics options whilst testing well in house. IMO, still would be cool.

The best you can hope for IMHO is that the widely used engines (UE4, Unity) implement solid support, and that individual games don't break it with their customizations.

DX12 and DX11 will still have the advantage of running out-of-the-box, while getting the latest OpenGL support requires installing drivers. That's why Chrome and Firefox actually use Direct3D under the hood for WebGL support: it ensures maximum compatibility.

However, the problem isn't as bad as it was in the XP and Windows 7 days since Windows automatically downloads the latest WHQL-certified drivers automatically starting with Windows 8, including the auxiliary software (Catalyst Control Center, NVidia Control Panel, 3D Vision software and so on).

Actually, last I heard the versions of drivers supplied by Windows Update usually had OpenGL stripped from them. Not sure if that's still true.

Actually, last I heard the versions of drivers supplied by Windows Update usually had OpenGL stripped from them. Not sure if that's still true.

That was back in the Windows 7 days, when only the barebones driver DLL was supplied by Microsoft in order to enable Aero and it rarely got updated (if ever). Windows 8.1 actually installs the full thing, bloatware included. It even shows up the add/remove programs, AFAIK. I don't think they remove OpenGL from those, but I haven't tested it.

At least that was my experience with a NVidia GPU. I saw the "3D Vision Photo Viewer" icon show up automatically on the desktop just a couple minutes after a fresh install.