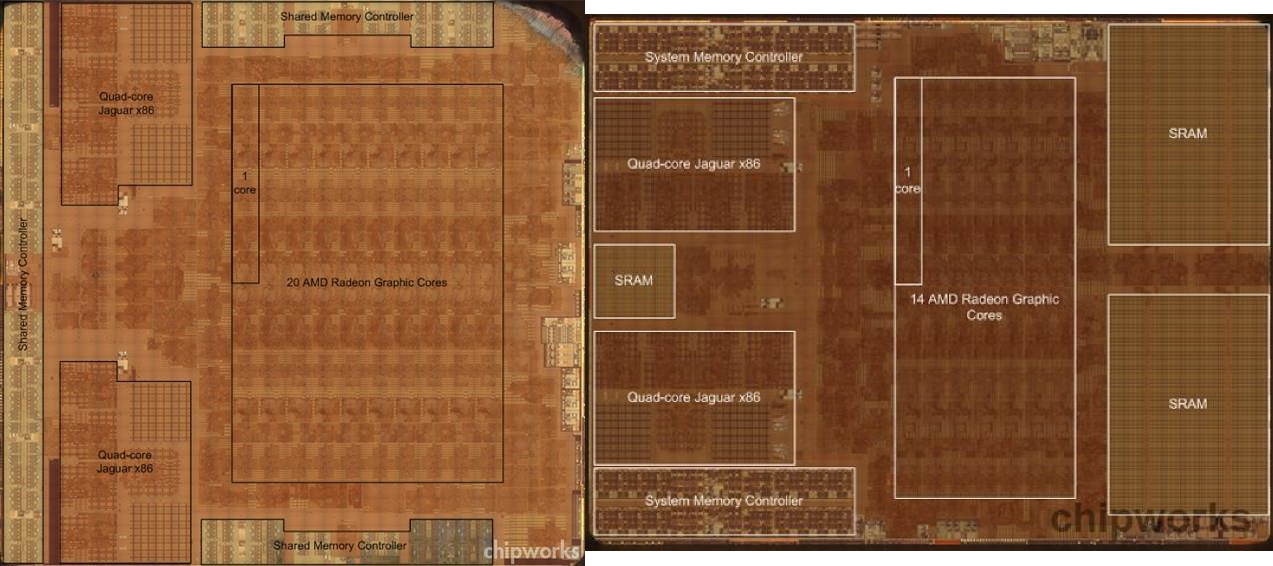

What exactly Im looking at here?

Something like this?

Insane to think about it.

But in this case combined with the GPU and other subsystems. But yeah. You can see the die architecture exposed in your picture, what we have is just a better head-on scan of it.

I decapped CPUs and was able to see this stuff sometimes, it was so cool. SIM cards are a good way to see them, the chip is easy to see the architecture of (break apart the plastic and go for the tiny square in the middle, peel off some glue and you already see it)

Better shots of that Cell

You can see the memory blocks on that too, the square like stuff in each SPE.