In order to ensure that Direct3D 12 could support the widest range of hardware, without significant compromises that could limit the longevity of the new API, Microsoft and its partners established to divide into three tiers the level of support of the new resource-binding model.

Each tier is the superset of its predecessor, that is, tier 1 hardware comes with the strongest constraints about the resource-binding model, tier 3 conversely has no limitations, while tier 2 represent intermediate level of constrictions.

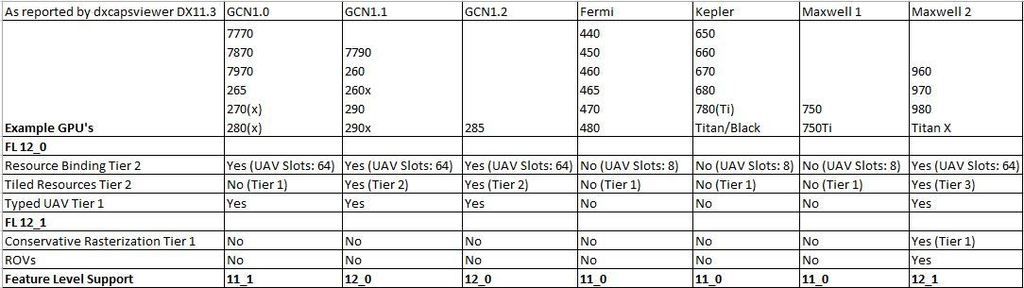

If we talk about the hardware on sale, the situation about the resource-binding tiers is the following:

Tier 1: INTEL Haswell e Broadwell, NVIDIA Fermi

Tier 2: NVIDIA Kepler, Maxwell 1.0 and Maxwell 2.0

Tier 3: AMD GCN 1.0, GCN 1.1 and GCN 1.2

Regarding the resource binding, currently only AMD GPUs come without hardware limitations, which has been erroneously defined as a full support by some sources.