copelandmaster

Member

Ya'll know that AMD is not the way to go if you do anything other than game on your card, right?

For instance, Blender Cycles and Octane Render (and the Octane for Blender plugin) don't support AMD officially. That super sucks and puts people like me out of camp red entirely, value be damned.

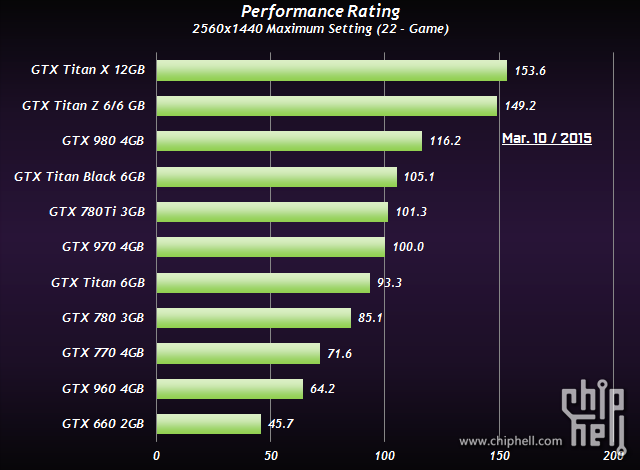

12GB gpus are what the VFX industry has been waiting for. The X is like a M6000 except way cheaper. That's a pretty good value if you're a vfx hobbyist.

For instance, Blender Cycles and Octane Render (and the Octane for Blender plugin) don't support AMD officially. That super sucks and puts people like me out of camp red entirely, value be damned.

12GB gpus are what the VFX industry has been waiting for. The X is like a M6000 except way cheaper. That's a pretty good value if you're a vfx hobbyist.