dr. apocalipsis

Banned

eDRAM is only available on their highest performance iGPU(GT3e) that we are comparing to Richland/Trinity which are mid-range parts. Like I said Kaveri which is more competitive with i7-4x50HQ should wipe the floor when it comes to GPU performance.

Nope, they are caused by the lack of frame-pacing/metering. Kepler has frame-metering built into the silicon that's why micro-stuttering isn't a big issue with their cards and AMD has already acknowledged the issue. In fact we should get a driver this month that fixes the micro-stuttering issue which is again a multi-GPU problem and I don't know why you keep bringing that up.

Once again you seem to gloss over the reason why embedded RAM was incorporated.

This is information coming straight from Intel. iGPUs can't run on solely off of DDR3 RAM because they will be bandwidth starved....this isn't even up for discussion, this is a fact. eDRAM is implemented to help with the bandwidth issues, the low latency is just a side affect of it being on die.

I'm done here. You sound like a car dealer changing subject everytime I find a flaw in your speech.

Also, if proper 6T-SRAM, which is what the XB1's ESRAM is highly speculated to be, then you could be looking at a latency of anywhere from 10-15 cycles give or take. It could be slightly higher, I really have no idea, but we are more than likely talking in the right range even if my specific number is off. I know people hate this, but I'm going to post a comment from a game developer, and I don't care how offended people are by my attempt to use an actual game creator's comments to support what I'm saying.

That figure is pretty unrealistic. Latency should be in between L2 and main RAM, not better than L2. Triple than what you suggest would be awseome for One.

I am pretty sure that bandwidth is the only really relevant reason why embedded memory exists in GPUs. For instance, you can concurrently fetch textures from main memory while drawing pixels to a render target in the eSRAM without interference between the two. However, these scenarios are limited by the eSRAM's size. Many people mention many possible scenarios but forget that you can't implement them all at the same time. Without knowing the concrete numbers, I am pretty sure that the eSRAM is not big enough to be usable as a texture cache and for render targets at the same time. For instance, the g-buffers of Killzone Shadow Fall already eat up 40MB of RAM and their backbuffers eat additional 31 MB. That is just not possible on the eSRAM, even without storing any textures in it.

Don't you believe eSRAM here will going to be used as a next level cache?

As I see this, it won't be used to store buffers such as PS2 or 360, but as a cache, and in that scenario 32mb are more than enough for a 95% hit rate, Intel claims. People is forgetting than DDR3 in One is running at 2100 mhz, and that is more than enough to not bottleneck a mid tier GPU, like the APU of One, and to handle buffers, more when you have included more logic to move data.

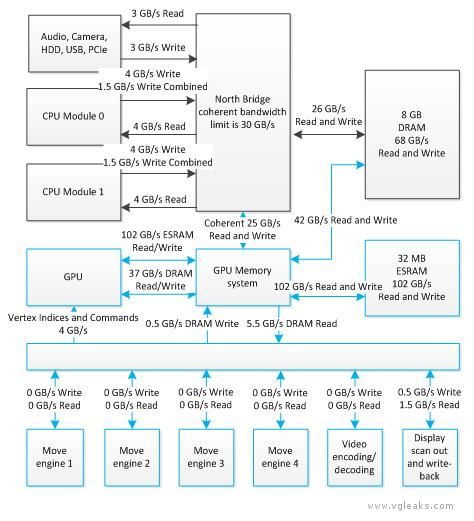

I'm looking at One diagrams, but they aren't too detailed. Can CPU use this eSRAM too in an useful way? Having that pool of cache may lead to minor improvements on the CPU's IPC, starved like it is with 2MB of L2.

I don't think they're on the same die though.

They aren't on the same DIE for fab convenience. That way they can produce both 5200 or 4600 in the same line, then choose what to mount. And, in the future, they still can fab the edram in the old node.