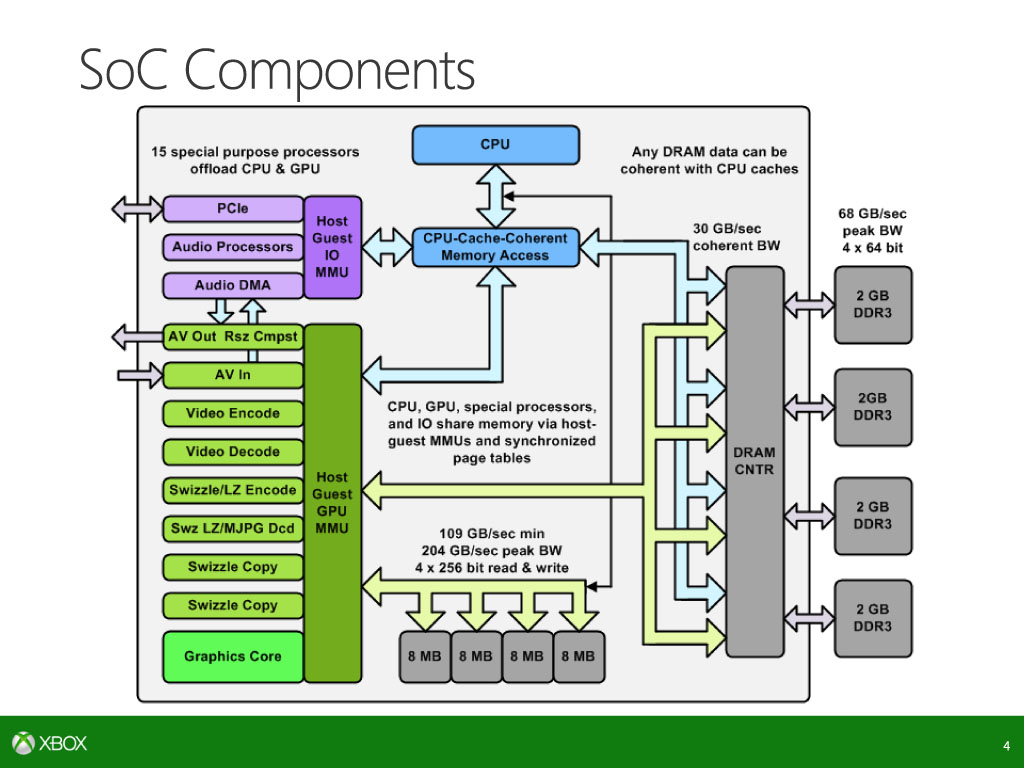

My guess? The ESRAM, like the EDRAM in Intel's new Haswell chips, can use the Xbox One's ESRAM like a cache. But obviously because this isn't your traditional cache, and instead a generic scratchpad, it instead likely means that, as opposed to the CPU sometimes having to get its data from main memory, which is slower, devs can choose to store certain important pieces of data that might otherwise be in the CPU's caches in order to cut down on cache misses in a much larger, and rather similar, piece of memory such as the Xbox One's 32MB of ESRAM.

Between the two 4 core Jaguar clusters, you're dealing with a total of

512KB of L1 Cache (divided evenly between the two 4 core clusters)

and 4MB of L2 cache (also divided evenly between the two clusters)

32MB of ESRAM looks like mighty attractive assistance to that CPU in cases where there's just no more space left inside either of those caches to fit the relevant data.

http://www.realworldtech.com/intel-dram/

Maybe that's what that line implies. Perhaps MS and AMD did something similar to what Intel did with the EDRAM in haswell. it could be any number of things really. As an example, you know how like the PS4 has that onion+ bus that allows it to bypass the GPU's caches? Maybe this is similar to that, but only in reverse. Maybe it's the CPU's cache that's being bypassed with data being fed to it from the ESRAM, which would make it, again, similar to what Intel did with Haswell. Because I think it's interesting that the line starts from above the CPU Cache Coherent Memory Access block. Maybe ESRAM data can be delivered directly into the CPU's registers.

A major mistake that people are making with my posts in general is that I've never particularly cared how much stronger the PS4 is, or how much weaker the Xbox One is, and maybe I share a lot of the blame for not making that more clear, but literally my very first post on this forum ever says precisely that. That I don't think it matters how the Xbox One compares or matches up to the PS4, only that I think it's far more important that it's a big enough jump over the Xbox 360, and that Microsoft are providing developers with enough power to make incredible next generation games. When I say things like that, people say "Oh my god, listen that MS PR!!!" "Get the shill!!" etc. When I post Dave Baumann's comment, it isn't to say OMG, look, the Xbox One's graphics performance will far and away be better than a 7790, which means it could threaten the PS4. No, far from it. If, as Dave Baumann says, the Xbox One's graphics subsystem will far and away outstrip even a Radeon 7790 when devs make proper use of the ESRAM, then this also largely applies to the PS4 also, even though it isn't an EDRAM or ESRAM design. If the Xbox One at around 1.2/1.3 TFLOPS can, in dave's view, carry the real potential to outperform a 7790, it means the PS4's GPU will likely far and away outstrip an even stronger AMD GPU when all is said and done.

The general point to many of my posts on this particular subject have not been to say the Xbox One will be stronger or just as strong as the PS4. No... I'm not trying to "close that gap" as some have accused me of doing. It has always been my intent to point out that the Xbox One's graphics performance, despite flawed comparisons to the Radeon 7770, or even to a desktop Radeon 7790, which looks better on paper in many respects, will not only likely end up surprising people, but is likely to outperform either of those two desktop cards in the long run.

In conclusion: I don't care about the comparison to the PS4 and the Xbox One. You don't have to be a genius to see that the PS4 is the noticeably stronger machine. I just like to point out, and I think rightfully so, that I think the Xbox One is going to be a hell of a lot stronger as a gaming system than people are giving it credit for. It won't outclass the PS4, but it will sure as hell still surprise people with what it can do. That's why I take comments from beyond3D from people that have developed games, and are currently developing games when they talk about the potential of the Xbox One, that's why I post Dave Baumann's comment on the Xbox One, and that's why from talking to a friend in first party development for xbox one, and listening to what they have to say about the potential performance implications of low latency esram on the system's performance among other things, I continue to believe that the Xbox One is a pretty damn powerful game console. And after seeing what devs did with 10MB of EDRAM on 360, I will not have anyone telling me that 32MB of even more versatile low latency ESRAM is suddenly "useless," or will hardly make a difference, because it's too small.

The challenge others have is to not assume that just because I'm hopeful and excited to see how the Xbox One performs, that it means I'm somehow doubting the

power level of the PS4. To use a DBZ reference, I know full well that the PS4 is Goku, and the Xbox One is Vegeta. Super Saiyan Goku vs Majin Vegeta is the two systems in a multi-platform game. Super Saiyan 3 Goku is the PS4 in an exclusive.