-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

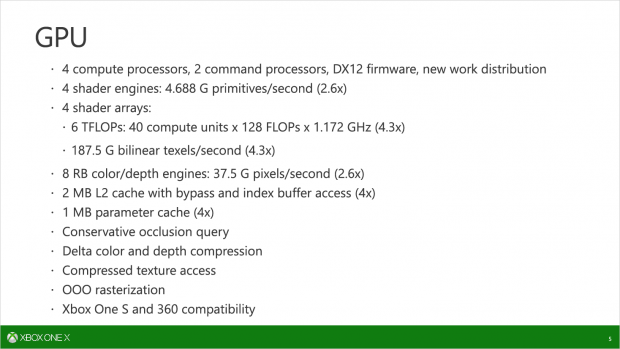

Full details of the Scorpio engine in Xbox one X from Hotchips conference 2017

- Thread starter Kayant

- Start date

LukasTaves

Member

I don't think that's true and it doesn't make sense either (due to unified shaders). 3TF for vertex shaders alone sounds like an overkill. Vertices don't need that much processing power.

There are 3TF available for all types of shaders. Still plenty enough for old, unpatched games.

ps: You also forgot to mention geometry shaders.

I agree it doesn't make much sense, but that's what the documentation said.

I don't recall the thread but it was something like that:

Xbox One BC with very old SDKs will have the full gpu exposed, but with that odd limit.

Xbox One BC with the (I think it was June 2017) SDK would have the full gpu exposed in a unified way.

Xbox One games with the October SDK will be able to target xbonex specifically.

It's like the PS4 & Xbox One CPU being made up of 2 Jaguar clusters but in this case PS4 Pro GPU is made up of 2 PS4 GPU size clusters, this is pretty much fact I'm not sure what the argument is at the moment but when I said this a year ago & said that I would guess that it would be 64 ROPs people didn't think it would be but right now everything points to it being 64 ROPs.Nah, it's ~52 NGCs glued together.

...There is zero reason to put two PS4 GPUs next to each other when you are building a single chip SoC system.

That's because they are counting the full render time, which includes vertex and other work that won't scale at all with resolution.

CB will be costlier than just rendering half the pixel load, because it has do to something extra (but even a simple software upscale would), but the extension of that is currently undisclosed.

Why wouldn't they use the full render time?

The ROPs not telling the full story wasn't aimed at you, I said it doesn't tell the full story as the post I quoted mentioned advertising it as an advantage, to which I responded with "it doesn't tell the whole story about how the GPU performs as there are other variables at play depending on the software".

Oh

LukasTaves

Member

Why wouldn't they use the full render time?

They should use the total render time, I'm just saying the total render time is not useful to draw comparison on how much CB saves on the stuff that is resolution dependent.

Edit: And it has been confirmed Pro's GPU have 64 ROPs? Seems like a utterly waste, considering how little the bandwidth increased.

rokkerkory

Member

I absolutely love threads like this even though I understand like 10% of it haha. But still learn a bit more each time. Thanks for the good dialogue folks.

dr_rus

Member

It's like the PS4 & Xbox One CPU being made up of 2 Jaguar clusters but in this case PS4 Pro GPU is made up of 2 PS4 GPU size clusters, this is pretty much fact I'm not sure what the argument is at the moment but when I said this a year ago & said that I would guess that it would be 64 ROPs people didn't think it would be but right now everything points to it being 64 ROPs.

There is no "PS4 GPU size cluster" (contrary to a 4 core Jaguar CPU module which is actually a thing) and the sole fact that Neo's SIMDs are different (FP16x2 support for example) is enough to make Neo's GPU a completely new piece of silicon, in no way related to what's present in PS4. ROPs in all versions of GCN architecture are decoupled from shader core anyway so it doesn't matter if there's 64 or 32 or 48 - this doesn't mean that Neo's GPU is "2 modified PS4 GPUs next to each other" either.

Sony's inability to effectively abstract PS4's h/w to avoid running weird h/w configurations like half GPU reservation on Neo for titles running in legacy mode is nothing more than a sign of PS4's APIs weaknesses and this is done via the (OS/driver/firmware level) software, not by putting "2 modified PS4 GPUs next to each other".

There is no "PS4 GPU size cluster" (contrary to a 4 core Jaguar CPU module which is actually a thing) and the sole fact that Neo's SIMDs are different (FP16x2 support for example) is enough to make Neo's GPU a completely new piece of silicon, in no way related to what's present in PS4. ROPs in all versions of GCN architecture are decoupled from shader core anyway so it doesn't matter if there's 64 or 32 or 48 - this doesn't mean that Neo's GPU is "2 modified PS4 GPUs next to each other" either.

Sony's inability to effectively abstract PS4's h/w to avoid running weird h/w configurations like half GPU reservation on Neo for titles running in legacy mode is nothing more than a sign of PS4's APIs weaknesses and this is done via the (OS/driver/firmware level) software, not by putting "2 modified PS4 GPUs next to each other".

How is it not a PS4 GPU size cluster when Cerny said that it's a mirror of it's self placed next to it? that's 2 18 CU clusters next to each other & why are you telling me about the difference in the GPU as if I'm saying that it's actually 2 PS4 GPUs in the PS4 Pro?

LordOfChaos

Member

There is no "PS4 GPU size cluster" (contrary to a 4 core Jaguar CPU module which is actually a thing) and the sole fact that Neo's SIMDs are different (FP16x2 support for example) is enough to make Neo's GPU a completely new piece of silicon, in no way related to what's present in PS4. ROPs in all versions of GCN architecture are decoupled from shader core anyway so it doesn't matter if there's 64 or 32 or 48 - this doesn't mean that Neo's GPU is "2 modified PS4 GPUs next to each other" either.

Sony's inability to effectively abstract PS4's h/w to avoid running weird h/w configurations like half GPU reservation on Neo for titles running in legacy mode is nothing more than a sign of PS4's APIs weaknesses and this is done via the (OS/driver/firmware level) software, not by putting "2 modified PS4 GPUs next to each other".

That was actually an early weakness of GCN, ROPs being tied to CU counts. This was addressed in later versions. It's possible the Pro plucked from the then-near-future feature release and decoupled them, just like they borrowed 8 ACEs from the future.

In fact I'd bet on that. If the Pro doubled ROP pixel throughput, I'd think they would have talked about it, just like they talked about shading performance, FP16, and memory bandwidth. The PS4 was already very overkill for 1080p with 32. Perhaps, likely, ROPs were never the limit to scaling up to double that.

Also 32 being overkill for 1080p also fits in with PSVR needing around double that throughput, same with what the PS4 Pro usually ends up rendering at pre-checkerboarding.

dr_rus

Member

Every GCN GPU has half of CUs placed as a mirror on another side of the chip. PS4 GPU is arranged in the exact same fashion, with 9+9 CUs on different sides. 18 CUs means nothing as these are different CUs.How is it not a PS4 GPU size cluster when Cerny said that it's a mirror of it's self placed next to it? that's 2 18 CU clusters next to each other & why are you telling me about the difference in the GPU as if I'm saying that it's actually 2 PS4 GPUs in the PS4 Pro?

GCN never had ROPs tied to CU counts. Tahiti (the first GCN GPU) had 32 CUs and 32 ROPs while Pitcairn (the second GCN GPU) had 20 CUs and 32 ROPs. Hawaii had 44 CUs and 64 ROPs.That was actually an early weakness of GCN, ROPs being tied to CU counts. This was addressed in later versions. It's possible the Pro plucked from the then-near-future feature release and decoupled them, just like they borrowed 8 ACEs from the future.

If you're thinking about changes made to GCN in Vega/GCN5 then I'm quite sure that ROPs/MCs used in Neo's GPU were in fact from Polaris and not GCN5 as these were made to work with HBM2 and not GDDR5 which is used in PS4Pro. It's also somewhat telling that they haven't mentioned ROVs and CR support in PS4Pro - both are ROP features and both are added in GCN5. Both would be pretty useless for a system designed to run PS4 games in higher resolution though.

It's rather unlikely that they are using more than 32 ROPs in Neo for a simple reason of peak memory bandwidth not being significantly higher than in PS4. With just 217GB/s of bandwidth putting more than 32 ROPs in the chip would likely be an overkill as the backend isn't in fact that much limited by the pixel output as it is limited by other things like memory bandwidth and the need to run long shaders over a course of several cycles.In fact I'd bet on that. If the Pro doubled ROP pixel throughput, I'd think they would have talked about it, just like they talked about shading performance, FP16, and memory bandwidth. The PS4 was already very overkill for 1080p with 32. Perhaps, likely, ROPs were never the limit to scaling up to double that.

Also 32 being overkill for 1080p also fits in with PSVR needing around double that throughput, same with what the PS4 Pro usually ends up rendering at pre-checkerboarding.

10% boost from Out of Order Rasterization?

This is from a web post about OOO Rasterization not about Xbox One X but it's a feature of Xbox One X

And another one

Out-of-Order Rasterization On RadeonSI Will Bring Better Performance In Some Games

This is from a web post about OOO Rasterization not about Xbox One X but it's a feature of Xbox One X

And another one

Out-of-Order Rasterization On RadeonSI Will Bring Better Performance In Some Games

AMD developer Nicolai Hähnle has published a set of patches today for adding out-of-order rasterization support to the RadeonSI Gallium3D driver. Long story short, this can boost the Linux gaming performance of GCN 1.2+ graphics cards when enabled.

Nicolai posted this patch series introducing the out-of-order rasterization support. This is being used right now for Volcanic Islands (GCN 1.2) and Vega (GFX9) discrete graphics cards (though support might be added to other GCN hardware too). It can be disabled via the R600_DEBUG=nooutoforder switch.

This out-of-order rasterization support is also wired in for toggling it and some attributes via DRIRC for per-game Linux profiling in order to enable/disable depending upon where this helps Linux games or otherwise causes issues.

Nicolai has posted an explanation of out-of-order rasterization on his blog for those interested in a technical explanation, " Out-of-order rasterization can give a very minor boost on multi-shader engine VI+ GPUs (meaning dGPUs, basically) in many games by default. In most games, you should be able to set radeonsi_assume_no_z_fights=true and radeonsi_commutative_blend_add=true to get an additional very minor boost. Those options aren't enabled by default because they can lead to incorrect results."

Once the patches land in Mesa Git (or while still in patch form, if I magically find extra time before then), I intend to try out the support to see their impact on popular Linux games.

nope. my bad.

OOO Rasterization it's something that was enabled last year for AMD GPU's with GCN 1.2 & higher that's after PS4 & Xbox One was released so this would be a new feature on console & I'm guessing it's always enable for Xbox One X so that's an extra boost over Xbox One that add to the boost of more GPU Flops.

Locuza

Member

That's a performance boost for GPUs with multiple Shader Engines in cases where you don't have to synchronise between them.

The PS4 and Xbox One only had 2 SEs, so the cost of waiting naturaly occurs less often there, than on 4 SEs.

The performance boost in comparison to the old consoles will likely be small if present at all.

The PS4 and Xbox One only had 2 SEs, so the cost of waiting naturaly occurs less often there, than on 4 SEs.

The performance boost in comparison to the old consoles will likely be small if present at all.