Looks like they doubled the number of ACEs compared with XB1... it is still half of PS4/Pro.

1 HWS (Polaris) = 2 old ACEs (GCN 1.1)

PS4/Pro has 8 compute processors.

XB1 has 2 compute processors.

XB1X has 4 compute processors.

That is what AMD call ACE.

If you add the graphic (or command processor) you will have: 8x2, 2x2 and 4x2 respectively.

It is doubled from XB1 but half PS4/Pro.

AFAIK, PS4 Pro Polaris GPU also has 4 ACEs and 2 HWS.

Hypervisor is nothing unique or bad. The PS4 uses a Hypervisor as well.

Source?

I remember PS3 having a Hypervisor (GameOS/OtherOS), but Sony has never said anything about the PS4.

ps: Having a hypervisor is probably a good thing for BC.

285 GB/s just for the GPU is really good.

285GB/s just for the GPU or for the APU as a whole?

Does that mean that the CPU has 41GB/s of RAM bandwidth?

I also wonder if there's any performance bottleneck when both CPU and GPU access RAM simultaneously (kinda like in the PS4).

We still haven't seen the PS4 Pro die but I expect it to be the same way with 2 GPU arrays next to each other just like this.

The fact that both companies had to disable half the GPU for BC indicates that they have something in common due to AMD/Polaris hardware.

And yes, that die schematic looks like a "butterfly" GPU.

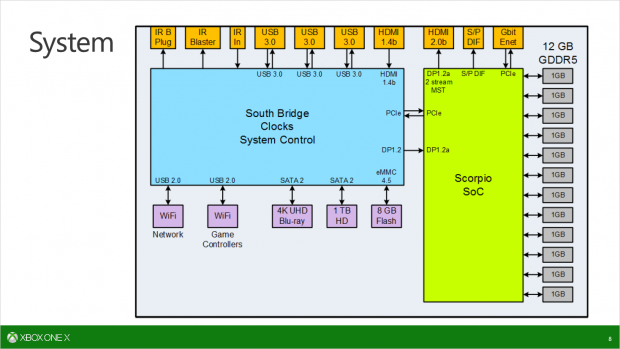

So Scorpio will only use a SATA2 interface for the HDD. BUT: SATA2 is completely fine - I expect most savings coming from the CPU decompressing data when looking at load times.

Mechanical HDDs barely max out SATA1, let alone SATA2.

(2) 3gbps vs. 5 (USB3) or 6gbps (SATA3). I think there is a difference. Why else MS would recommending to use external hdds for faster loading. But anyway it is what it is. Nothing crucial but noneoftheless a missed opportunity imo.

Because external HDDs have more dense platters and thus higher transfer rates. It's not a matter of interface (USB vs SATA).

Can you swap internal HDD in Scorpio?

If not then SATA3 is a pretty useless feature that will only drive up costs. SATA3 only will give you advantage if you can put a SSD on Scorpio.

This was a smart decision.

No, you can't and SATA3 isn't that expensive either. Still useless for HDDs tho.