GTA V ran at 25 FPS avg. on 360/PS3, loved the hell out of it.

That is what I am talking about.

GTA V ran at 25 FPS avg. on 360/PS3, loved the hell out of it.

24 fps is totally playable for most game types.

I played through Crysis with an average of about 15 fps haha.

Loved every second.

Me too, bro. When ever I get much over 24fps it is time to add more AA.

Isn't that not an accurate comparison? If 1080p60 is your PC's limit, then wouldn't 4K drop the FPS to 15, not 30?

We should have a poll. Polls are all the rage.

Mods, can we get a poll?

Isn't that not an accurate comparison? If 1080p60 is your PC's limit, then wouldn't 4K drop the FPS to 15, not 30?

1080p @ max settings vs 4k at lowered settings, hairworks in particular is a huge drain on resources

OP have you considered something inbetween 1080p and 4k at 60fps?

Considering my TV's native resolution is 4K, I'd rather not pick something asymmetrical like 1440p. 1080p upscales perfectly to 4K. My desk monitor is 1440p, but I prefer playing games like Witcher on my couch.

That is what I am talking about.

Considering my TV's native resolution is 4K, I'd rather not pick something asymmetrical like 1440p. 1080p upscales perfectly to 4K. My desk monitor is 1440p, but I prefer playing games like Witcher on my couch.

1080/60. I'll take a higher framerate over resolution any day. Gameplay > Visuals IMO.

1080@30FPS because I'm on a budget so I bought a 970.

1080/60. I'll take a higher framerate over resolution any day. Gameplay > Visuals IMO.

24 fps is totally playable for most game types.

I played through Crysis with an average of about 15 fps haha.

Loved every second.

If you said 4K 60fps or 1080 120/144fps, that would be a harder choice (most likely 4K/60), but as it stands now definitely 1080/60fps. Smooth frame-rate over great visuals.

That's what I shoot for. (I have a 1440p screen but find it to be a tangible step up from 1080p without being overkill like 4K)1440p 60 FPS.

If you can do 4K at 30 you can do that. Best of both worlds, in my opinion, especially if you're downsampling onto a 1080p screen.

That starts to play a big part in driving games, or some of the faster paced shooters. At 30fps you can kiss a lot of background detail goodbye.60fps is a minimum requirement for gaming, the accepted standard for going on 40 years now. 4K resolution is not. Easy choice.

Keep in mind that temporal resolution is really important too, it's something too many gamers ignore. When you cut your framerate in half you are dramatically reducing resolution, just like going from 4K to 1080p does. You're not choosing resolution over framerate, as is popularly assumed, you're choosing one form of resolution over another. You want a balance, and for my money 1080p/60fps is going to get closer to that ideal for most situations than 4K/30fps will.

What percentage of responses in this thread do you think have actually played a game in 4k/30 on a proper set up?

Care to explain? That's definitely not what my eyes see when running a game @ 30 FPS in 4K.60fps is a minimum requirement for gaming, the accepted standard for going on 40 years now. 4K resolution is not. Easy choice.

Keep in mind that temporal resolution is really important too, it's something too many gamers ignore. When you cut your framerate in half you are dramatically reducing resolution, just like going from 4K to 1080p does. You're not choosing resolution over framerate, as is popularly assumed, you're choosing one form of resolution over another. You want a balance, and for my money 1080p/60fps is going to get closer to that ideal for most situations than 4K/30fps will.

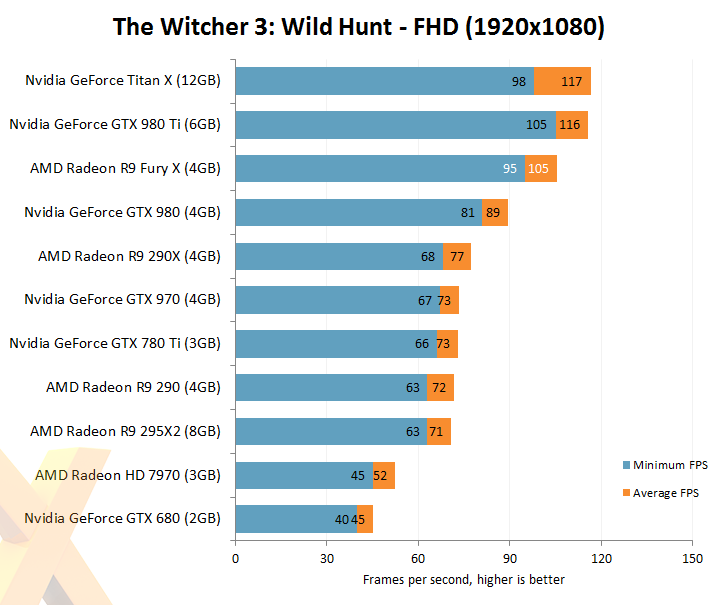

1080@30FPS because I'm on a budget so I bought a 970.