How ignorant are these MS execs if they think they can run the same HD-DVD style fud-campaign at NeoGAF like they did at AVS forums? Pointless question since these were the same clowns behind HD-DVD.

The same FUD strategy will not fly here as it did there back then primarily due to the user base and the moderation of this forum compared to AVS who were totally unprepared for the FUD and astroturfing campaign that occurred with HD-DVD.

It's funny seeing Penello post here, the only people agreeing with him were already inclined to do so, others are finally seeing the thin coat of PR wash off from his posts.

When someone of claimed authority makes a shit post this forum will eviscerate that post and sometimes that individual. Microsoft need an all-new social marketing strategy since the current FUD tactics have little to no chance of succeeding here. They hugely underestimate their audience. They have learned very little.

I wrote this in another thread:

zomgbbqftw said:

Fuck this guy. Seriously, threads on comments from Major Nelson, Albert Penello and such just need to be closed. It causes a whole load of shit and we're supposed to accept their comments because they work for MS and are in a position of knowledge. Well fuck all of them, either show the goods and documentation of shut the fuck up. Just like every other GAF member.

avaya said:

Penello is really handling GAF. They are going to realise very quickly that NeoGAF is not AVS Forums which they reduced to shit during the HD format wars from 2006-2008.

OMG this x1000! I was going to come in to basically post the same thing.

I was there too when I saw this playbook being run. The pointless Blu-ray vs. HD-DVD format war was in full swing, and there are eerie parallels to this console war (Blu-ray is a technically superior format, with 66% more capacity than HD-DVD. HD-DVD had some short term advantages in authoring software, minimum player spec, and cost of manufacture, but those are things that are again, only short term advantages and not necessarily something that a customer would notice, and all have been effectively nullified in the years since.)

MS sent Amir, a VP who I'm told convinced MS to go all-in on HD-DVD. So people were thrilled to see an executive from one side coming to talk to them, and understandably so, as he shared his insider's perspective. I don't have a problem with this, to be honest.

What I do have a problem is when they come on and basically spread FUD (fear, uncertainty, doubt) and rumors about their competitor's product. I saw this happen over there - the one I remember most vividly was Amir telling everyone that (paraphrased) "dual layer BD-50 discs are science fiction. They can't be manufactured." And a LOT of people believed him, because here is an exec from Microsoft telling you so! Worse, there could be no response from the other side - it's all rumor and hearsay, and maybe no one is at liberty to disclose exactly how much BD-50 capacity they have publicly? IIRC a Sony Pictures guy started posting but he was on the content side so he couldn't really comment on the rumors. (But that guy played it straight - talked about new movies in the pipeline, etc. and from what I remember never got caught up talking crap about HD-DVD as what the hell would he know about HD-DVD anyway?)

Folks on the AVSForum bought Amir's BS right up until the point that the first BD-50 discs rolled out of the replication plants...and HD-DVD died and Amir took his retirement.

Don't get me wrong, I think it's cool to see executives coming in and posting. It's awesome when they share new information with us! But there should be some ground rules, I think. I don't want to see NeoGAF turn into format war-era AVSForums.

Want to talk about how amazing your product is? I don't have a problem with that, as long as you tell us who you are.

Want to clarify misconceptions about your product? Please, be my guest. I'm sure we would all love getting the straight scoop!

Want to spread rumors and innuendo about your competitor's product? IMHO this should be ban-worthy and we should not tolerate it.

To put some timeline into this obvious crazy of circus deception.

Time LIne:

June 28th Friday : Leadbetter at Eurogamer made an article exclusive to him alone where MS found this amazing before never to be known two way ESRAM, which helps double the bandwidth.

http://www.eurogamer.net/articles/digitalfoundry-xbox-one-memory-better-in-production-hardware

Leadbetter claims his source was "Well-placed development sources have told Digital Foundry ".... well now we know that this development source had tobe MS them self. So leadbetter became a mouthpiece PR. As soon as the article hit Gaf, question spark of math that did not make any sense.. As summarize by the post below from the thread..

Well,

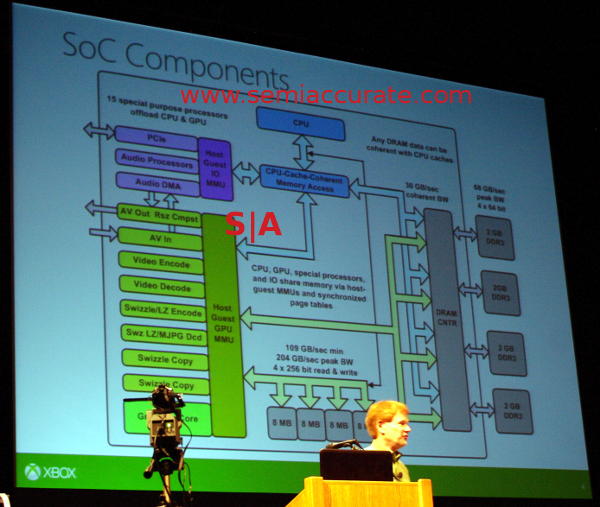

800Mhz x 128 bits = 102 GB/s

According to the article the flux capacitor found by MS allows read and write at the same time(intel, hire these guys) and so theorical max bandwidth should be 102 x 2 = 204

http://www.neogaf.com/forum/showthread.php?p=66898711#post66898711

Basic question if it is read and write at the same time then the max should have been 204. Why 194? This was raised and debated but no answer.

Then we had the upclock. And MS increased/upclock GPU. Albert than made a post here in Gaf of comparison with PS4

We have more memory bandwidth. 176gb/sec is peak on paper for GDDR5. Our peak on paper is 272gb/sec. (68gb/sec DDR3 + 204gb/sec on ESRAM). ESRAM can do read/write cycles simultaneously so I see this number mis-quoted.

http://www.neogaf.com/forum/showthread.php?p=80951633&highlight=#post80951633

He then was asked:

by Freki

So why is the bidirectional bandwith of your eSRAM 204GB/s although your one-directional bw is 109GB/s - shouldn't it be 218GB/s?

To which albert response:

Yes, it should be. And I was quickly corrected (both on the forum and from people at the office) for writing the wrong number.

So now he claims after talking to his "technical people" the number should be 218.

But here is damn catch AGAIN......

At the Hotchip Presentation, which was done after GPU upclock contradicts that number, which was actually presented by MS own technical experts at states the number to be 204

So seriously, all this circus of misinformation. To a point they cant even keep up with there own deceptions and lies.

I really want to understand this double bandwidth mode. The way it was originally presented made it seem like a "trick" mode - something you could do, but there were severe limitations on using it as you're kind of abusing the hardware into doing something it wasn't originally designed to do. The fact that the bandwidth number does not double (I believe the slightly lower non-doubled number to be the correct number, it can't just be a typo that they have repeated over and over, including at HotChips) suggests that there are times where perhaps it is totally unsafe to write while reading or vice versa, and if you took into account those times, perhaps you could hit 204GB/s or whatever.

I wonder though if it is ever possible to even come close to 204GB/s. The original article says that they were able to get 133GB/sec doing alpha blending on FP16 render targets, but I wonder what limitations are imposed and how crazy the timing has to be to avoid memory corruption. Maybe you can do alpha blend but can only run a trivial shader? Could still be useful for certain things, but yeah, I really want to understand how it works...