LordOfChaos

Member

My favorite thing about Cell is that 15 years after its release and 6 years after its relevance ended, it can still create a flash of debate on command, lol.

Good to see this. So MS are working on this. Does this mean this is strictly their tech? So I’m hoping this is implemented in their next Xbox (and also hoping Sony have something similar for their PS5).

I wonder how demanding this is on the gpu.

I truly hope so because this looks like the perfect solution for BC.Nvidia tech but they used DirectML & demo'ed it using Forza but I expect even more tech like this to find their way into Next Gen consoles & even the consoles on the market now.

It's a broadband engine and even stamped as such on the PS3 chip, Cell isn't used solely as a CPU and can be found in electronics from other vendors beside Sony.

I think that's what he meant.

Yeah, this is true as Sony was originally going to make a Cell powered GPU as well.

Cell was quite amazing with what it could accomplish, makes me wonder if Sonys collaboration with AMD is to customise Navi with their learnings from Cell.

The results could be quite incredible if they went down that path.

could you say more about the ps2's ps1 emulator? ps2's cpu always been compatible with ps1.

I'm sorry buddy, but you're badly misinformed (and you're not the only one).What do you mean Cell isn’t a CPU? Of course it is. The below article highlights the differences found.

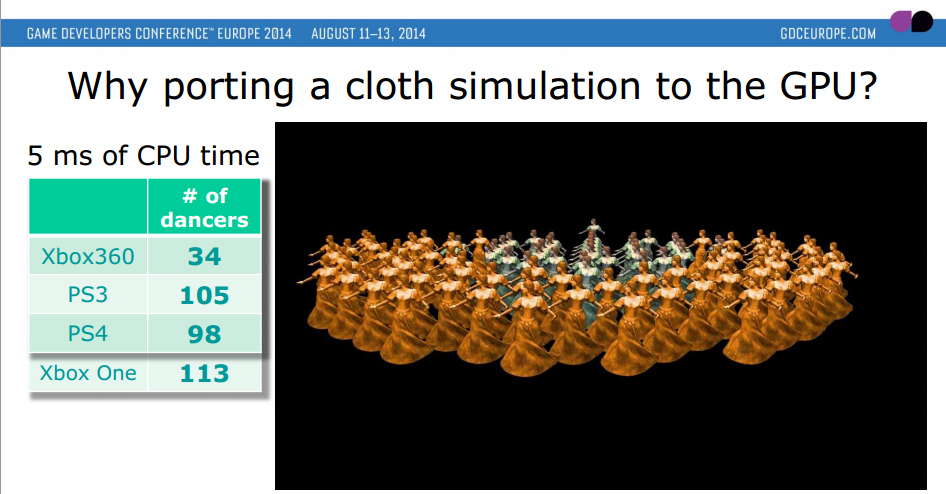

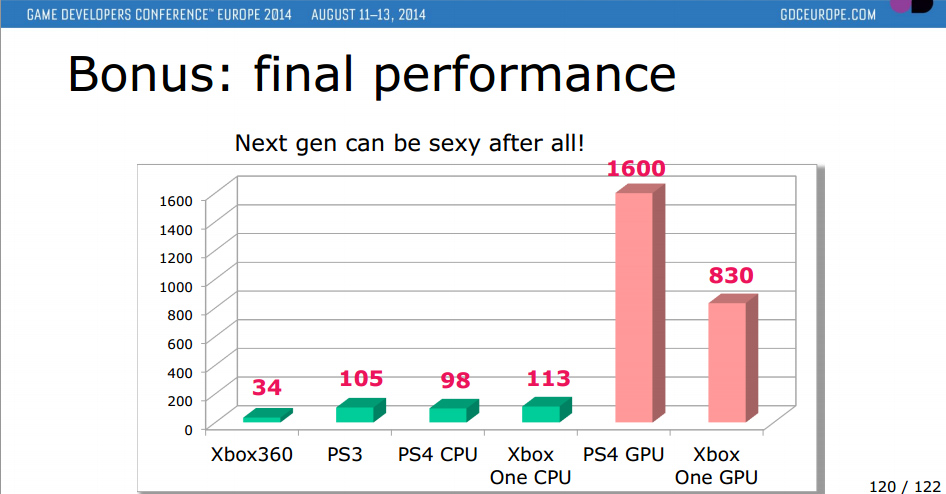

http://www.redgamingtech.com/ubisoft-gdc-presentation-of-ps4-x1-gpu-cpu-performance/

Oh, I remember people saying back in 2005 that it was the first "true" 8-core CPU. Talk about confusing regular CPU cores with dedicated SIMD engines.My favorite thing about Cell is that 15 years after its release and 6 years after its relevance ended, it can still create a flash of debate on command, lol.

I'm sorry buddy, but you're badly misinformed (and you're not the only one).

As I said, Cell isn't even a CPU to begin with: http://vr-zone.com/articles/sony-cell-processor-really-tough-get-grips/63732.html

"The Cell processor was the precursor to today’s accelerated processing units, very much a heterogeneous architecture"

Comparing Jaguar (which is a CPU) to Cell SPEs is disingenuous to say the least... wanna compare it to PPE (which is actually a CPU) and tell us how it performs?

This is a fair comparison: http://media.redgamingtech.com/rgt-...s-xbox-one-vs-xbox-360-vs-ps3-cpu-and-gpu.jpg

Oh, I remember people saying back in 2005 that it was the first "true" 8-core CPU. Talk about confusing regular CPU cores with dedicated SIMD engines.

AMD must be really dumb that it took them over a decade later to deliver a true 8-core CPU design with Zen, right? Even the FX series was a quad-core design with shared FPU.

As I said, most people are misinformed (and it's not entirely their fault)...

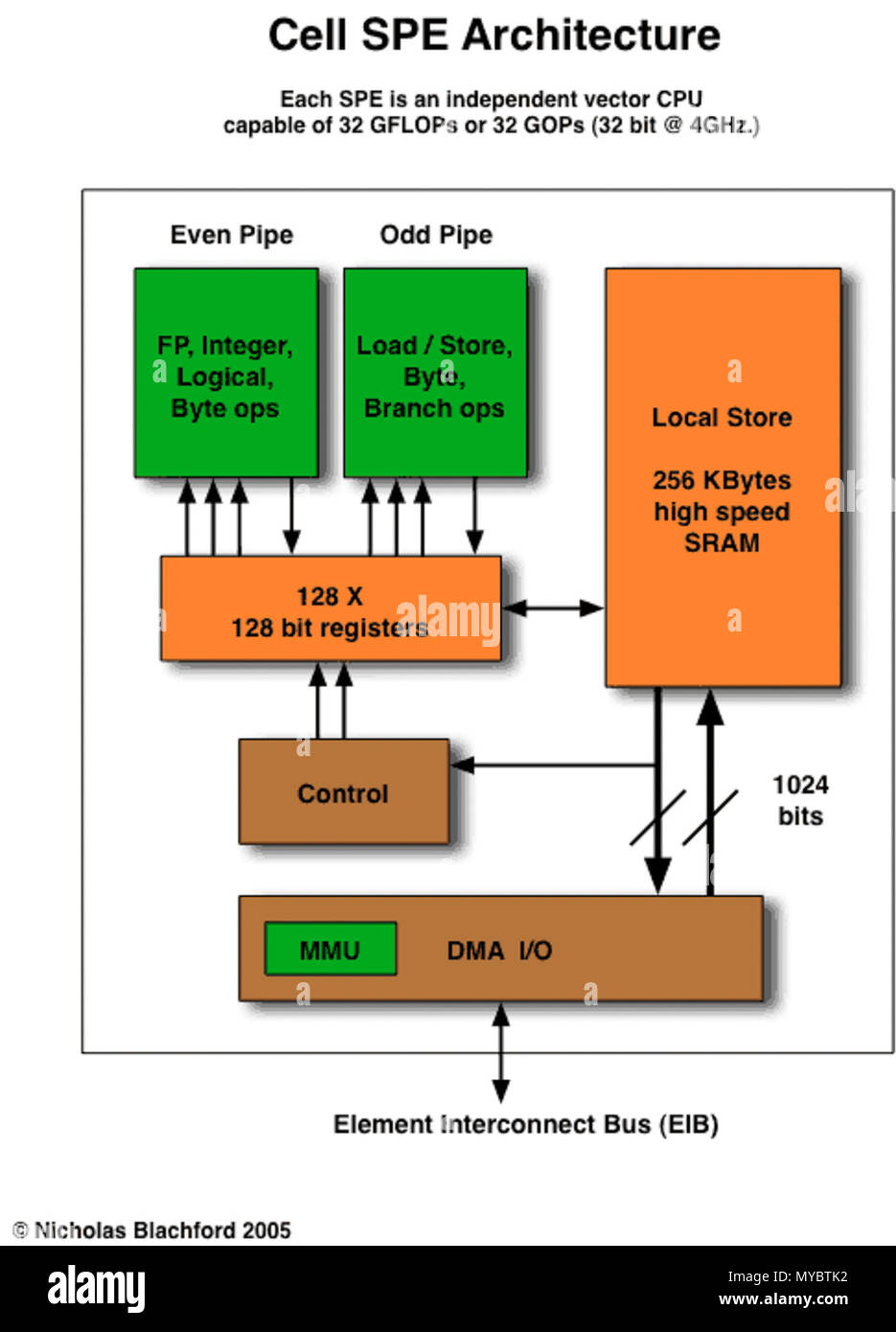

A CPU excels at certain (serial) workloads. Cell SPEs are more analogous to GPU Compute Units. They're designed for parallel tasks like graphics, physics etc.Do you mind breaking down the differences that makes a CPU a CPU and the Cell different, etc.?

Jaguar is a quad-core CPU for laptops (not tablets/smartphones, you won't find it there) that was specifically "customized" for consoles to have 8 cores (2 clusters of 4 cores) and a different memory controller (GDDR5). That's why they call it a "custom" CPU.Also, the Jaguar has 8 cores but you mentioned Zen is being the first true 8 core?

A CPU excels at certain (serial) workloads. Cell SPEs are more analogous to GPU Compute Units. They're designed for parallel tasks like graphics, physics etc.

Cell as a CPU (PPE) is roughly 3 times slower than a modern x86 CPU at the same clock rate. You can see some benchmarks here: https://www.7-cpu.com/

Also this: http://crystaltips.typepad.com/wonderland/2005/03/burn_the_house_.html

"Gameplay code will get slower and harder to write on the next generation of consoles. Modern CPUs use out-of-order execution, which is there to make crappy code run fast. This was really good for the industry when it happened, although it annoyed many assembly language wizards in Sweden. Xenon and Cell are both in-order chips. What does this mean? It’s cheaper for them to do this. They can drop a lot of cores. One out-of-order core is about four times [did I catch that right? Alice] the size of an in-order core. What does this do to our code? It’s great for grinding on floating point, but for anything else it totally sucks. Rumours from people actually working on these chips – straight-line runs 1/3 to 1/10th the performance at the same clock speed. This sucks."

TL;DR: "Jaguar sux" meme is so 2013. Yes, it sucks compared to i5/i7 and even i3 when it comes to single-threaded performance (IPC/clocks), but it's a sizeable improvement compared to 7th gen PowerPC CPUs. As a CPU it was specifically made for multi-threading (most game engines took a while to adapt, Bethesda/Gamebryo is still shit). SIMD tasks are relegated to GPGPU/Compute shaders these days.

You will always be disappointed if you compare consoles to PCs... PCs will always be able to afford a higher thermal envelope. When consoles will have 8 Zen cores @ 3.2 GHz, PCs will have up to 16 Zen cores @ 4-5 GHz.

Jaguar is a quad-core CPU for laptops (not tablets/smartphones, you won't find it there) that was specifically "customized" for consoles to have 8 cores (2 clusters of 4 cores) and a different memory controller (GDDR5). That's why they call it a "custom" CPU.

You could argue that Zen's design (2 CCX) resembles Jaguar's 2 clusters in a sense, but Zen is the first 8-core desktop CPU by AMD.

I'm sorry buddy, but you're badly misinformed (and you're not the only one).

As I said, Cell isn't even a CPU to begin with: http://vr-zone.com/articles/sony-cell-processor-really-tough-get-grips/63732.html

"The Cell processor was the precursor to today’s accelerated processing units, very much a heterogeneous architecture"

Comparing Jaguar (which is a CPU) to Cell SPEs is disingenuous to say the least... wanna compare it to PPE (which is actually a CPU) and tell us how it performs?

This is a fair comparison: http://media.redgamingtech.com/rgt-...s-xbox-one-vs-xbox-360-vs-ps3-cpu-and-gpu.jpg

Oh, I remember people saying back in 2005 that it was the first "true" 8-core CPU. Talk about confusing regular CPU cores with dedicated SIMD engines.

AMD must be really dumb that it took them over a decade later to deliver a true 8-core CPU design with Zen, right? Even the FX series was a quad-core design with shared FPU.

As I said, most people are misinformed (and it's not entirely their fault)...

https://en.m.wikipedia.org/wiki/PlayStation_3

I think I've made my case.That fair comparison still has Cell ahead of the PS4 CPU.

And isn’t the SPE’s and PPE all part of Cell?

Everything I’ve seen referencing Cell as being a CPU with those core components.

Oh, I remember people saying back in 2005 that it was the first "true" 8-core CPU. Talk about confusing regular CPU cores with dedicated SIMD engines.

I'm afraid that's not what Sony fanboys meant back in 2005... if you get my drift.The Cell is truly a 8-core cpu, just that one core has a different architecture than the others but they are all independent from each other. A SIMD unit is not independent, it's only a part of a core that executes some instructions from the program that is being executed by the said core. A SPE is not a part of the PPE, it has its own context and run its own dedicated program independently; it's truly a core on its own.

Wait, didn't Sony fans all cry about how backwards compatibility is a joke and nobody needs it...? Now they are making a big buzz for doing what everybody has been doing for years, and the last word has a boner, even though he himself said it was pointless...??

What the hell am I reading?

I'm afraid that's not what Sony fanboys meant back in 2005... if you get my drift.

Your definition of a CPU core is a bit "loose" to say the least. According to your logic, the Radeon GPU on a PS4 has 18 "cores" and thus it's more powerful than the Jaguar.

This is not a proper comparison either... CPUs and GPUs (or DSPs/SIMD engines if you will) are being tailored for entirely different workloads. Apples and oranges, really.

Cell was a weak CPU with powerful SIMD engines. It was a crap CPU and an excellent vector processor.

How are Jaguar AMD APUs any different?

Btw, if you want to desperately maximize your core count for PR purposes, you may as well add these ones as well (audio DSP, UVD, VCE, Zlib, ARM CPU in the southbridge):

https://en.wikipedia.org/wiki/PlayStation_4_technical_specifications#Hardware_modules

That would make me so happy. I think the problem might be that developers then lose out on the re-buy of the game.To those saying that BC doesn't matter: That USED to be true. That has drastically changed due to so many games being purchased on digital.

While, I'm not ready to call it a deal breaker, I'd definitely say it matters far more going from PS4 to PS5 than it did for PS3 to PS4.

What I expect will happen is that games will be more like apps how they are on phones, where developers will release one game that can scale between consoles generations and after so much time they can stop supporting an older model.

I just hate when the work of dozens (hundreds?) Of people is attributed to one individual. I just hate it.

Well, a jury will have to decide that: https://www.theregister.co.uk/2019/01/22/judge_green_lights_amd_core_lawsuit/"What's a CPU core?"

AMD's Bulldozer architecture had two integer units and one FP unit per shared 'Module'. Was that one core or two? AMD said two, and traditionally they would be right as CPU core counts were based off INT units, but if you ran FP code it sure ran like one.

Judging by 360 BC games, this isn't a big issue. Most 360 games being sold these days are digital, since it's a bit difficult to find physical copies of old games (new or used).That would make me so happy. I think the problem might be that developers then lose out on the re-buy of the game.

But I hope I'm wrong.

I'm afraid that's not what Sony fanboys meant back in 2005... if you get my drift.

Your definition of a CPU core is a bit "loose" to say the least. According to your logic, the Radeon GPU on a PS4 has 18 "cores" and thus it's more powerful than the Jaguar.

This is not a proper comparison either... CPUs and GPUs (or DSPs/SIMD engines if you will) are being tailored for entirely different workloads. Apples and oranges, really.

Cell was a weak CPU with powerful SIMD engines. It was a crap CPU and an excellent vector processor.

How are Jaguar AMD APUs any different?

Btw, if you want to desperately maximize your core count for PR purposes, you may as well add these ones as well (audio DSP, UVD, VCE, Zlib, ARM CPU in the southbridge):

https://en.wikipedia.org/wiki/PlayStation_4_technical_specifications#Hardware_modules

Can it run general purpose code at acceptable performance? PPE sucked at it, so...It's not loose at all. A SPE, while being designed for batch processing, is still able to run all kind of programs and has access to all devices. The SPEs are seen as logic cores by the operating system. For example, you could run a web server on one SPE and, at the same time, run a game server on another SPE. This is not the case with a GPU, the inner cores are invisible and not manageable.

Can it run general purpose code at acceptable performance? PPE sucked at it, so...

You can also use your feet to draw a painting, but I wouldn't recommend it.

There's always a good and a bad tool for every job out there...

Digital Foundry: In our last interview you compared Xbox 360's CPU to Nehalem (first-gen Core architecture from Intel) in terms of performance. So how does PlayStation 3 stack up? And from a PC perspective, has CPU performance really moved on so much since Nehalem?

Oles Shishkovstov: It's difficult to compare such different architectures. SPUs are crazy fast running even ordinary C++ code, but they stall heavily on DMAs if you don't try hard to hide that latency.

Trying hard to hide latency puts it more in the same category as GPUs IMHO. You don't have to try hard in a regular CPU design.If you provided hinting and carefully managed the memory flow, but otherwise it could run standard code. Again the answer is always a fuzzy "if" with Cell, which is why it remains so interesting to this day.

https://www.eurogamer.net/articles/digitalfoundry-inside-metro-last-light

Trying hard to hide latency puts it more in the same category as GPUs IMHO. You don't have to try hard in a regular CPU design.

PPE had issues running branchy code, that even a Pentium 3 could run much better.

for(int i = 0; i < N; i++)

c[i] = a[i] + b[i];__global__ add(int *c, int *a, int*b, int N)

{

int pos = (threadIdx.x + blockIdx.x)

for(; i < N; pos +=blockDim.x)

c[pos] = a[pos] + b[pos];

}What about modern (GP)GPUs?Yet a GPU won't just run your standard CPU oriented C++ code well as long as you hide latency. They're addressed in completely different ways. An SPU would run unoptimized code poorly, a GPU wouldn't run it at all.

A CPU's

Code:for(int i = 0; i < N; i++) c[i] = a[i] + b[i];

Becomes a GPUs

Code:__global__ add(int *c, int *a, int*b, int N) { int pos = (threadIdx.x + blockIdx.x) for(; i < N; pos +=blockDim.x) c[pos] = a[pos] + b[pos]; }

An SPU could run block 1, even if poorly without optimizing it further. The debate isn't if it was the best choice, but it's certainly still more of a GPU-ified CPU than a GPU imo.

You can use the GPU for a lot of things, and it will process the data very fast... but there's a cost to loading the data to the VRAM in split architectures.What about modern (GP)GPUs?

Sophist said that "the inner cores are invisible and not manageable". I think that's only valid for the old GPU paradigm where you just used it for graphics and nothing else.

https://developer.nvidia.com/how-to-cuda-c-cpp

Is that the case with technologies like CUDA?

Can it run general purpose code at acceptable performance? PPE sucked at it, so...

You can also use your feet to draw a painting, but I wouldn't recommend it.

There's always a good and a bad tool for every job out there...

IKR? Jaguar and GCN CUs have a similar relationship... they're meant to assist each other, just in the opposite way compared to the PS3.PPE was the instructor for the SPE’s though, they were not seperate in function.

Think of an Orchestra requiring a conductor to play music, without the conductor everything falls apart.

What about modern (GP)GPUs?

Sophist said that "the inner cores are invisible and not manageable". I think that's only valid for the old GPU paradigm where you just used it for graphics and nothing else.

https://developer.nvidia.com/how-to-cuda-c-cpp

Is that the case with technologies like CUDA?

I'm not sure if you're talking about consoles specifically or other platforms like PC/mobile. Cell and semi-custom AMD APUs are best utilized on a closed platform. Open platforms tend to favor more generalized programming.This patent thing has snowballed thanks to some of the dumbest gaming news reporting ever. At least there is some interesting conversations around this.

They still are for GPGPU with Apple, AMD, NVIDIA, Intel, ARM, Samsung (eventually) etc.... You don't want to be messing with that anyway. From a lower level, we don't want (trust) any user down there. Many of these inner workings are hard wired in and react/instruct/sync/launch etc... at many times faster than an any user can manage. (Except may Carmack.) This was also a welcome trade off when going from pixel + vertex shaders to unified shaders; user is removed from the process. >8]

Edit: When I say "user" I mean programmers. 8P

But people buy new systems to play new games, right? Nobody cares about backwards compatibility, I was told.

Can it run general purpose code at acceptable performance? PPE sucked at it, so...

What about modern (GP)GPUs?

Trying hard to hide latency puts it more in the same category as GPUs IMHO. You don't have to try hard in a regular CPU design.

256 kb that has to hold both the data and the program instructions. With a huge program, you would have to do a lot of DMA operations for the instructions alone

Is that true, though? You could run Linux on the PS3 but did a "top" show the SPUs? I somehow doubt that.To give a concrete example, if you could run Microsoft Windows on a Cell, you would see the SPE cores in the task manager.

Is that true, though? You could run Linux on the PS3 but did a "top" show the SPUs? I somehow doubt that.

Edit:

This is interesting:

On boot, you see two big Tuxes and 7 small ones but a "cat /proc/cpuinfo" only shows the PPC cores.

Of course that's a patch but potentially not exposed to the scheduler.The small penguins is likely due to a patch for the Kernel to see the extra cores: Linux is generally built for homogenous cores.

Is that true, though? You could run Linux on the PS3 but did a "top" show the SPUs? I somehow doubt that.

Edit:

This is interesting:

On boot, you see two big Tuxes and 7 small ones but a "cat /proc/cpuinfo" only shows the PPC cores.