-

Hey, guest user. Hope you're enjoying NeoGAF! Have you considered registering for an account? Come join us and add your take to the daily discourse.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Media Molecule Announces New IP, Dreams

- Thread starter zakislam

- Start date

HomerSimpson-Man

Member

The visuals in this are awe inspiring.

It's nailing the stopmotion/clapmation vibe so well, it has a such a 'solid' look to it's graphics more than any game I've ever seen.

It's nailing the stopmotion/clapmation vibe so well, it has a such a 'solid' look to it's graphics more than any game I've ever seen.

This definitely was not aimed for my gaming tastes.

If I wanted to be a game developer...I'd have got a job doing so. I don't want to create my levels, rules, objects, etc. About all I want to make is my character and customize their appearance throughout an experience.

Games like this are forever lost on me.

This is aimed toward the minecraft audience, so yeah it's not aimed at you.

It's aimed at me though.

jhmtehgamr20xx

Banned

Best Of Show™, hands down. It's even triggered some of my own creative juices and it takes a LOT for a game to do that for me nowadays.

This is a system seller.

The industry needs to expand to more ideas, I'm glad to see a new game tackling the subject of dreams in a creative, fun fashion.

This is a system seller.

Based on what? It's not like it needs the COD/brofisting audience in order to sell. Truth is, we NEED more games like this because most of the other new/inventive ideas are coming from the indie scene, but they don't have the budget this game seems to have.It looks neat, and the tech is impressive no doubt. Nonetheless, I feel like this won't sell.

The industry needs to expand to more ideas, I'm glad to see a new game tackling the subject of dreams in a creative, fun fashion.

James Sawyer Ford

Member

Anton Mikhailov works now at Media Molecule? Hmm, I wonder how it came to that. I thought he was heads deep with Morpheus and new Sony R&D stuff, probably could mean that they will try to implement VR in some fashion with Dreams.

link to him working at MM?

pixelation

Member

Whatever it was,it had some of the most beautiful mind-blowing visuals I've ever seen.

Agreed

Are you sure this wasn't really aimed at your gaming tastes? Ok, you don't want to be a game developer, but you need game developers to make the games you like to play, right? A piece of software like this is a means of further democratizing the game development process. YOU still don't have to be a game developer, but lots of other people can crowdsource basic game development with software like this.This definitely was not aimed for my gaming tastes.

If I wanted to be a game developer...I'd have got a job doing so. I don't want to create my levels, rules, objects, etc.

It's about the same as saying Unreal engine was not aimed at your gaming tastes.

Which is ironic, considering that this is something Dreams seems particularly well suited at letting you do, above and beyond anything else out there.About all I want to make is my character and customize their appearance throughout an experience.

petethepanda

Member

I've long accepted that I'm most likely not the type to actually create content for games like this, but man am I on board to play what other creative folks put together with this. It's like the Stephen Fry LBP intros directly morphed into a game.

blame space

Banned

I had an existential crisis while this guy was trying to explain what this was

HassanJamal

Member

The earlier Dreams tech demo. Lots of hinting there. Probably a big reason why PS Move wasnt in the latest teaser.

In case anyone missed out the bit of E3 3D model scuplting, see here. I know its a bit early to say but damn, Im hyped for this. Looking forward to more at the Paris show. There's so much potential in this game.

In case anyone missed out the bit of E3 3D model scuplting, see here. I know its a bit early to say but damn, Im hyped for this. Looking forward to more at the Paris show. There's so much potential in this game.

chrishackwood

Member

This is basically little big planet in full 3d. But with more customisation than ever. As someone who loves to create things in 3d, I'm in heaven 😀

Discotheque

Banned

the last image gofreak posted (the green fog) is like my dream game visually.

so beautiful. i really hope people make some very freaky stuff with this game.

so beautiful. i really hope people make some very freaky stuff with this game.

Can someone post a graphics 101 on how these graphics are created.

Preferably in Food Babe-level language...

Anyone?

Adam_Roman

Member

I'm really excited for this. Even if it's $60, I'll be getting it day one.

I'm incredibly interested to see more in-depth stuff with creation and sculpting. It would be amazing if they had a way to upload models made in Blender or Maya so you aren't limited to just the sculpt tool and whatever prefabs they include.

I'm incredibly interested to see more in-depth stuff with creation and sculpting. It would be amazing if they had a way to upload models made in Blender or Maya so you aren't limited to just the sculpt tool and whatever prefabs they include.

This seems really daunting. He described it in a way that made it seem as simple as thinking something up, but was so vague about how it all works. It's never that simple, either. You can't just snap your fingers and have game controls and logic rules and all that stuff just work. Not unless it's largely predesigned.

I thought I'd totally dig this, as LittleBigPlanet is one of my absolute favorite game series, but now I'm just thankful for LBP's structure and relative simplicity.

Good luck Mm. Hopefully you can show something later that makes a little more sense and gets my interest.

I thought I'd totally dig this, as LittleBigPlanet is one of my absolute favorite game series, but now I'm just thankful for LBP's structure and relative simplicity.

Good luck Mm. Hopefully you can show something later that makes a little more sense and gets my interest.

nelsonroyale

Member

Anyone?

Gofreak gave some insight earlier in this thread...but I am also still intrigued. What is the upshot of this? If those where the type of visuals you can recreate in a game in Dreams then...these are the best looking visuals I have seen. They straight up looked like super artistic animated movie.

I hope they do not make the same mistakes they did on LBP, limiting some creation stuff in order to have better control on DLC sales.

From the looks of it, this will enable players to create any type of games, and obviously is not just a movie creation suite.

Edit: My Avatar is actually a screenshot from LBP2. So yeah, I'm pretty excited.

From the looks of it, this will enable players to create any type of games, and obviously is not just a movie creation suite.

Edit: My Avatar is actually a screenshot from LBP2. So yeah, I'm pretty excited.

nelsonroyale

Member

I hope they do not make the same mistakes they did on LBP, limiting some creation stuff in order to have better control on DLC sales.

From the looks of it, this will enable players to create any type of games, and obviously is not just a movie creation suite.

I think this is what is giving people pause...they hinted at this but were cryptic. The game sounds insanely ambitious, hence I think why people might have a hint at what they are trying to do but are sceptical based on what they showed. They didn't just show some mini game type stuff. The sequences they showed off stuff they built looked flat out incredible.

Anyone?

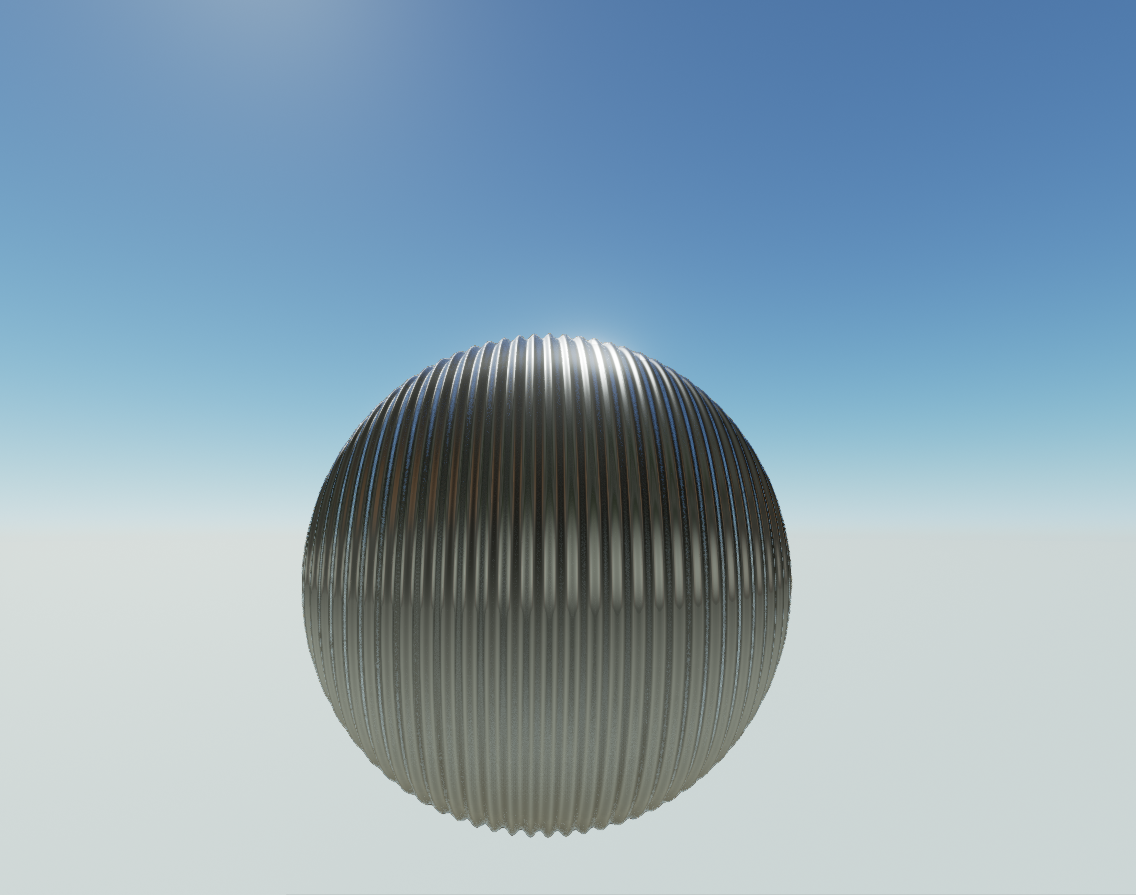

In graphics, you're going to have some kind of geometry representation. The typical one is a mesh of triangles approximating a shape. So typical that it's universal in games.

But you can also use mathematical equations that describe a shape - let's say a sphere.

One such equation is one that takes a point in 3D space, and returns the shortest distance from that point to the surface of the shape.

You can use that function in rendering - in a ray tracer, for example, to figure out the point on the sphere that a camera's pixel should be rendering.

So instead of putting a bunch of polygons representing a sphere down a rendering pipeline, you can trace a ray per pixel and evaluate precisely what point on the sphere that pixel should be looking at. You've probably heard of 'per pixel' effects in other contexts - this would be like 'per pixel geometry'.

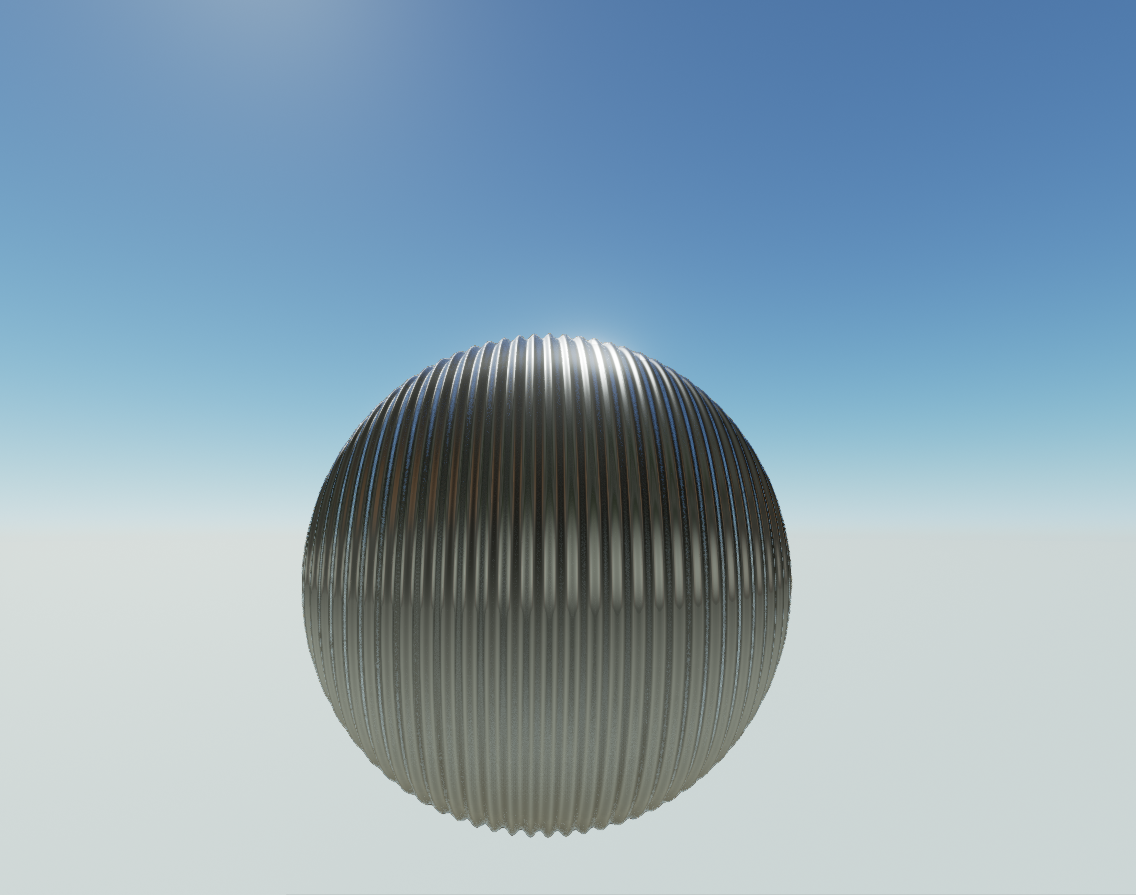

You can do interesting things with these functions. You can very simply blend shapes together with a mathematical operation between two shapes' functions. You can subtract one shape from the other, with another operation. You can deform shapes in lots of interesting ways. Add noise, twist them. For example, here's a shape with a little deformation on the surface described by a function using 'sin':

This render was produced with a tracing of the function - at every pixel the function was evaluated multiple times to figure out the point the pixel was looking at. Notice how smooth it is - you're not seeing any polygonal edges or the like here. It's a very precise kind of way of rendering geometry.

Now this isn't exactly what Media Molecule is doing. And here's where I diverge into speculation based on tweets and stuff.

(I think) MM is taking mathematical functions mentioned above and evaluating them at points in a 3D texture. So turning them into something they can look up at discrete points - which is a lot faster than calculating the functions from scratch. So, when you're sculpting in the game, it'll be baking the results of these geometry defining mathematical functions and operations into a 3D texture. So that's turning the distance functions into a more explicit representation which you might see referred to as a distance field.

To render the object represented by that texture, they have a couple of options. They could trace a ray and look up this 3D texture as necessary to figure out the point on the surface to be shaded - which is what I thought they were doing previously. But a more recent tweet suggests they are 'splatting' the distance field to the screen, which is sort of a reverse way of doing things. They'll be explaining this at Siggraph.

The advantages are the easy of deforming geometry with relatively simple operations. Doing robust deformation and boolean (addition/subtraction/intersection etc.) operations with polygonal meshes is really hard. Knowledge of 'the shortest distance from a given point to that surface' can also be applied in lots of other areas - ambient occlusion, shadowing, lighting, physics (collision detection). It's a handy representation to have for doing things that are trickier with polygons. UE4 has recently added the option to represent geometry with distance fields for high quality shadowing.

The disadvantage of this - and of using it from top to toe in your pipeline! - is that's it tricky to do it fast, and obviously GPUs and content pipelines etc. are so based around the idea of triangle rasterisation. But GPUs have gotten a lot more flexible lately, so maybe as time wears on we'll see even more less traditional 'software rendering' on the GPU.

Shin-Ra

Junior Member

That might uneven the playing field and demoralise players just using the in-game tools.I'm really excited for this. Even if it's $60, I'll be getting it day one.

I'm incredibly interested to see more in-depth stuff with creation and sculpting. It would be amazing if they had a way to upload models made in Blender or Maya so you aren't limited to just the sculpt tool and whatever prefabs they include.

There might be tools in the game for producing more 'perfect' shapes but the pro-tool created models would probably still stick out.

Alex Evans just tweeted about this thread.

I'm optimist. One of the best moments in my gaming life was the moment I played the LBP Beta. Like this time, it was overwhelming and magical.

I'm optimist. One of the best moments in my gaming life was the moment I played the LBP Beta. Like this time, it was overwhelming and magical.

John Bender

Banned

Hi Alex

Can't WAIT to get my hands on "Dreams".

PGW is coming!

Sidewinder

Member

As long as I can actually play these dreams and not just watch short movies I'll be in, I really loved the art style.

link to him working at MM?

http://www.mediamolecule.com/about/team/antonm

I'm incredibly interested to see more in-depth stuff with creation and sculpting. It would be amazing if they had a way to upload models made in Blender or Maya so you aren't limited to just the sculpt tool and whatever prefabs they include.

Hm, since they are building an engine that says FU to polygons, I doubt that would ever be possible.

So have they touched on the actual gameplay yet or is it just a case of walking through an art gallery of dreams?

Haven’t touched it, but we know they are building this to have gameplay, just didn’t have the chance to show it properly.

Allovermahboday.

The bubble in the middle top at the beginning of cycle (castle and bouncy spring) has Pixar like visual quality. Fascinating stuff.

This definitely was not aimed for my gaming tastes.

If I wanted to be a game developer...I'd have got a job doing so. I don't want to create my levels, rules, objects, etc. About all I want to make is my character and customize their appearance throughout an experience.

Games like this are forever lost on me.

There is also the play aspect, and tinkering aspect of creation side. You don’t have to create worlds, you can just remix and poke around stuff other people already created. It is clear they are aiming for collaboration aspect. While the play part will most likely also be strong, it’s hard to think they would not stress it as their priority.

I guess this game isn't for me. Didn't like it when they revealed the trailer.

Just curious? Why do you say that, when we still don’t actually quite know what the game is about.

I

(I think) MM is taking mathematical functions mentioned above and evaluating them at points in a 3D texture. So turning them into something they can look up at discrete points - which is a lot faster than calculating the functions from scratch. So, when you're sculpting in the game, it'll be baking the results of these geometry defining mathematical functions and operations into a 3D texture. So that's turning the distance functions into a more explicit representation which you might see referred to as a distance field.

To render the object represented by that texture, they have a couple of options. They could trace a ray and look up this 3D texture as necessary to figure out the point on the surface to be shaded - which is what I thought they were doing previously. But a more recent tweet suggests they are 'splatting' the distance field to the screen, which is sort of a reverse way of doing things. They'll be explaining this at Siggraph.

The advantages are the easy of deforming geometry with relatively simple operations. Doing robust deformation and boolean (addition/subtraction/intersection etc.) operations with polygonal meshes is really hard. Knowledge of 'the shortest distance from a given point to that surface' can also be applied in lots of other areas - ambient occlusion, shadowing, lighting, physics (collision detection). It's a handy representation to have for doing things that are trickier with polygons. UE4 has recently added the option to represent geometry with distance fields for high quality shadowing.

The disadvantage of this - and of using it from top to toe in your pipeline! - is that's it tricky to do it fast, and obviously GPUs and content pipelines etc. are so based around the idea of triangle rasterisation. But GPUs have gotten a lot more flexible lately, so maybe as time wears on we'll see even more less traditional 'software rendering' on the GPU.

Thanks for explanations. No wonder this game has been in the oven for so long, they are doing some groundbreaking stuff here.

Ffom what we saw in the demo, it seems like quite large scale worlds are doable with this game. Especially noticeable in the intro of that green sf world when Alex is walking onto the stage.

travisbickle

Member

So have they touched on the actual gameplay yet or is it just a case of walking through an art gallery of dreams?

Looks like Sean Murray gets to pass the baton to Alex Evans, good luck Alex.

Callibretto

Member

We saw a bear beating a bunch of zombie and a robot racing with flying motorcycle. I'm sure they wouldn't choose to use those scenario if they can't at least make it playable imo.So have they touched on the actual gameplay yet or is it just a case of walking through an art gallery of dreams?

Shin-Ra

Junior Member

I'm optimist. One of the best moments in my gaming life was the moment I played the LBP Beta. Like this time, it was overwhelming and magical.

Ooh! {assumptions} Who better to help implement the motion controls?

AND VR!

Media Molecule doesn't make movies or walking simulators. They make games. Imagine if Nintendo announced this. It's like that. Movie making was added on to Little Big Planet 2 but was a feature not a focus. The focus of Little Big Planet was the gameplay and the level creation. This is a game and it's obvious that you can play as everything shown in the trailer. I understand a bit of skepticism but people should use their gaming knowledge when watching trailers.

Personally, I'm terrible at making stuff in games. The nice thing is, some people are amazing at it (especially Media Molecule). THAT is what you should look forward to. Media Molecule created content and Mm Picks (the best content chosen by Media Molecule in Little Big Planet). I am interested in this "seamless transitioning between dreams". I hope it gives a sense of open world type freedom. I think it will be a popular game on Twitch and YouTube.

Personally, I'm terrible at making stuff in games. The nice thing is, some people are amazing at it (especially Media Molecule). THAT is what you should look forward to. Media Molecule created content and Mm Picks (the best content chosen by Media Molecule in Little Big Planet). I am interested in this "seamless transitioning between dreams". I hope it gives a sense of open world type freedom. I think it will be a popular game on Twitch and YouTube.

Shin-Ra

Junior Member

This is worth a re-watch.

https://youtu.be/MtY12ziHuII?t=3m10s especially from 3:10 onward.

Not just for the 3D modelling but the "futuristic (3D) interface."

https://youtu.be/MtY12ziHuII?t=3m10s especially from 3:10 onward.

Not just for the 3D modelling but the "futuristic (3D) interface."

VR would/could be perfect for this...the whole concept of sharing digital 'dreams' crossed with the idea of 'Morpheus' seems all too obvious. It could be a media molecule metaverse for VR. BUT...I have extreme doubts they could get this working at a framerate required for VR. I would guess this is targeting 1080p/30fps.

If this takes off, though, it's easy to imagine a VR version in the future. For now I think the fidelity they're targeting might be too much for PS4 VR though :/

Hmm, now with Anton on board I am not certain this will not be a VR compatible game. My bet is that the game does not have 60fps, thus limitting it from VR potential. But that it will allow VR experiences. For example you could have a VR sculpting room.

I'm a big "fan" of Media Molecule (LBP and Tearaway) but this presentation was a "disappointment", in a sense that they didn't manage to explain the concept of their "game" at all. The only thing i got from the presentation is that it includes creation tools of some sort. Maybe it wasn't ready for presentation yet (in which case they probably should have waited until later) or maybe the concept just wasn't explainable in the brief time given in such a conference.

In conclusion, i think it's far too early to judge "In Dreams" because we know virtually nothing about it. However, since MM hasn't managed to show any aspect of there being a game yet, i remain sceptic but hopeful (knowing MM's history of making awesome games).

In conclusion, i think it's far too early to judge "In Dreams" because we know virtually nothing about it. However, since MM hasn't managed to show any aspect of there being a game yet, i remain sceptic but hopeful (knowing MM's history of making awesome games).

In graphics, you're going to have some kind of geometry representation. The typical one is a mesh of triangles approximating a shape. So typical that it's universal in games.

But you can also use mathematical equations that describe a shape - let's say a sphere.

One such equation is one that takes a point in 3D space, and returns the shortest distance from that point to the surface of the shape.

You can use that function in rendering - in a ray tracer, for example, to figure out the point on the sphere that a camera's pixel should be rendering.

So instead of putting a bunch of polygons representing a sphere down a rendering pipeline, you can trace a ray per pixel and evaluate precisely what point on the sphere that pixel should be looking at. You've probably heard of 'per pixel' effects in other contexts - this would be like 'per pixel geometry'.

You can do interesting things with these functions. You can very simply blend shapes together with a mathematical operation between two shapes' functions. You can subtract one shape from the other, with another operation. You can deform shapes in lots of interesting ways. Add noise, twist them. For example, here's a shape with a little deformation on the surface described by a function using 'sin':

This render was produced with a tracing of the function - at every pixel the function was evaluated multiple times to figure out the point the pixel was looking at. Notice how smooth it is - you're not seeing any polygonal edges or the like here. It's a very precise kind of way of rendering geometry.

Now this isn't exactly what Media Molecule is doing. And here's where I diverge into speculation based on tweets and stuff.

(I think) MM is taking mathematical functions mentioned above and evaluating them at points in a 3D texture. So turning them into something they can look up at discrete points - which is a lot faster than calculating the functions from scratch. So, when you're sculpting in the game, it'll be baking the results of these geometry defining mathematical functions and operations into a 3D texture. So that's turning the distance functions into a more explicit representation which you might see referred to as a distance field.

To render the object represented by that texture, they have a couple of options. They could trace a ray and look up this 3D texture as necessary to figure out the point on the surface to be shaded - which is what I thought they were doing previously. But a more recent tweet suggests they are 'splatting' the distance field to the screen, which is sort of a reverse way of doing things. They'll be explaining this at Siggraph.

The advantages are the easy of deforming geometry with relatively simple operations. Doing robust deformation and boolean (addition/subtraction/intersection etc.) operations with polygonal meshes is really hard. Knowledge of 'the shortest distance from a given point to that surface' can also be applied in lots of other areas - ambient occlusion, shadowing, lighting, physics (collision detection). It's a handy representation to have for doing things that are trickier with polygons. UE4 has recently added the option to represent geometry with distance fields for high quality shadowing.

The disadvantage of this - and of using it from top to toe in your pipeline! - is that's it tricky to do it fast, and obviously GPUs and content pipelines etc. are so based around the idea of triangle rasterisation. But GPUs have gotten a lot more flexible lately, so maybe as time wears on we'll see even more less traditional 'software rendering' on the GPU.

Awesome post, most of which I understood

Couple more qustions:

Are the disadvantages such that it's only now with GPUs capable of managing heavy compute loads that this technique is viable for use in games?

Can it be blended with traditional rasterising rendering in hybrid solutions?

Berserker976

Member

Game looks absolutely stunning, especially in motion. Seems like Paris Games Week is when we'll be getting the more meaty information on it though.

But to be honest, with those graphics I would be perfectly ok with it just being a set of creation tools for weird, dreamlike vignettes.

But to be honest, with those graphics I would be perfectly ok with it just being a set of creation tools for weird, dreamlike vignettes.

plasmawave

Banned

Allovermahboday.

All over my face.

Awesome post, most of which I understood

Couple more qustions:

Are the disadvantages such that it's only now with GPUs capable of managing heavy compute loads that this technique is viable for use in games?

I suppose partially, yes, you'd need quite programmable GPUs with a fair bit of ALU to do this with complex scenes at high res. And that's still with lots of clever stuff to make it go fast...a more naive implementation of tracing complex sdf-defined scenes would be super demanding.

I think as big a barrier is just how ingrained triangle based pipelines are. Media Molecule has a luxury in this game of being able to say, 'all our assets will be made in-game out of these signed distance fields' - that's part of the idea of the game, creating everything in-game and allowing users to use all the same tools you do. But if you went in a normal (

And on the render side, obviously triangle rasterisation and shading has just become very efficient.

And various other building blocks of games and pieces of middleware have gravitated around this assumption of triangle meshes. Like physics engines, most notably. Thus if you wanted to go with a distance function representation for rendering you'll either have to swallow the complexity of maintaining two representations for all your geometry, or roll your own physics engine that works just with distance fields/functions. (I think MM may well be doing the latter).

Can it be blended with traditional rasterising rendering in hybrid solutions?

Yep. In the end the evaluation of distance fields/functions will work out as a depth value from the observer. So you could blend the results with a traditional depth buffer that polygonal geometry has been rendered into. As mentioned, UE4 now lets you generate and keep distance field representation of geometry for ray traced shadowing. Other games in the past have used ray marching of functions for spot effects of one or particular part of a scene (e.g. perlin-noised-based volumetric clouds).

I just noticed now for those zealous for some more bubble analysis, try watching the conference because there are couple of more bubbles at the end shown on the peripheral screens.

https://www.youtube.com/watch?v=tMnXzpBPToI&t=36m26s

1080p that baby

New stuff I can see :

* Some guy sitting in a room doesnt have that dreamlike brush stroke, looks like it has much softer visual style with straight lines

* Close up of a flower gently opening its petals

* Some kind of cloudy environmental storm representation cool effects

* Train tracks going to the horizon nice mystical feeling

* Wand casting magic in an alley from a fps view nice light on buildings

* Bird (crow) floating on water (ripples around it)

* Some desolate ruined post-apocalyptic lookish environment

*Humanoid puppet person-a-thing doing a sackboy kind of jump on a sofa (the landing is a bit weird though, because there is no animation to it when a character lands, no bounciness of limbs, just a full stop motion)

* Someone playing drums in a living room beautiful animation all around

* A girl with pinkish hard inside a house looks at the blue avatar cloud

* Some weird purple horse face from front view

* Disco like blocks

* Sculpture of a deer

* Lush and green nature

* Some kind of hypnotic mask

* Head of a huge sculpture

* and more stuff which I cant decipher

https://www.youtube.com/watch?v=tMnXzpBPToI&t=36m26s

1080p that baby

New stuff I can see :

* Some guy sitting in a room doesnt have that dreamlike brush stroke, looks like it has much softer visual style with straight lines

* Close up of a flower gently opening its petals

* Some kind of cloudy environmental storm representation cool effects

* Train tracks going to the horizon nice mystical feeling

* Wand casting magic in an alley from a fps view nice light on buildings

* Bird (crow) floating on water (ripples around it)

* Some desolate ruined post-apocalyptic lookish environment

*Humanoid puppet person-a-thing doing a sackboy kind of jump on a sofa (the landing is a bit weird though, because there is no animation to it when a character lands, no bounciness of limbs, just a full stop motion)

* Someone playing drums in a living room beautiful animation all around

* A girl with pinkish hard inside a house looks at the blue avatar cloud

* Some weird purple horse face from front view

* Disco like blocks

* Sculpture of a deer

* Lush and green nature

* Some kind of hypnotic mask

* Head of a huge sculpture

* and more stuff which I cant decipher

Callibretto

Member

This is worth a re-watch.

https://youtu.be/MtY12ziHuII?t=3m10s especially from 3:10 onward.

Not just for the 3D modelling but the "futuristic (3D) interface."

I can imagine this is really cool in VR, as in you're directly interacting with the object as if you're touching it in front of your eyes.

I imagine doing it in front of tv screen could take some time getting used to since your hand is sculpting air while your eyes are looking at the screen. in VR, you'd see your virtual hand with 1:1 movement directly interacting with the 3d object in front of you. you'd be like"touching" the 3d object directly, which could make creating object far easier.

Ahmad_742009

Member

This is going to be one of most unique games in the history. I'm sure MM will handle this.