Sorry I got a technicality wrong from the 90's. When I was a kid. Unrelated to anything gaming related, rather the broadcast standards of the 90's.

Patched in without frame rate issues = work and optimisation, which is great to see

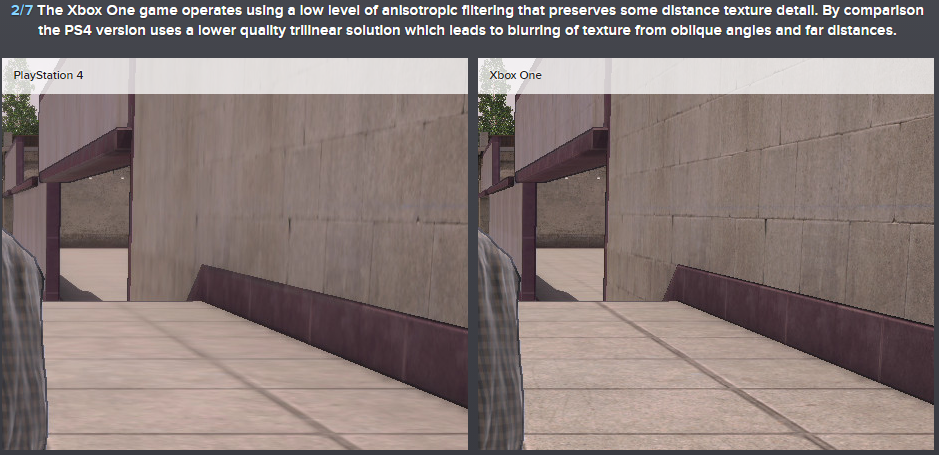

XB1 AF issues = Well it's only 32mb which has to handle the frame buffer, developers may not use the eSRAM like that and attempt to stream between the GPU and DDR which wouldn't be great at all. Depends how their engine was designed.

Evidence of my quote = Well, it's still happening in games. People don't just 'forget'.

Why do people take things so defensive? I was just posting an idea to what I think it could be. The bandwidth will take a large percentage hit when CPU requires access. AF needs a lot of bandwidth.

It doesn't effect PC because modern GPU's have a full pool similar to the PS4 which doesn't need to share a bus with the CPU.

It just makes sense to me. I'm welcome to be disproven, since this is discussion and I like learning.

This is a tech thread, your views are welcome here, like in any other thread. People who just go on a limb and say persons don't know what they're talking about (because they disagree) are just displaying bad forum etiquette.

I will say this, I don't see it your way, certain enhancements were made to the PS4's pipeline to enhance communication between GPU, CPU and Memory. One of these enhancements is that there's an extra bus placed on the PS4 GPU that allows reading and writing directly to system memory, as much as 20 GB p/s can flow through that bus, which is superior to the PCi-E on many PC's, maybe apart from PCi-E 3.0.

There are further enhancements, but that's more or less related to asynchronous compute. The point is, AF is not as resource intensive as you're implying, still, GDDR5 is much faster than DDR3 and better suited for games in the first place. The fact that the PS4 has a better GPU with better memory is enough to give it the logical advantage in all things GPU related, which includes AF.

The evidence of games not having AF on PS4 highlights a problem with devs not doing so, whether it's from lack of awareness (a polite way of putting it) or the lack of an automatic implementation or default preset from the sdk. The former, the latter or the combination of the two all apply and has nothing to do with the hardware.

In any case, thanks for your contribution....it's welcome here.

We already have hindsight on this through Ninja Theory.

Lack of PS4 AF did not make it to QA, they handled the gameplay revisions, another developer handled porting the game to ps4 and xbox one.

They realized the problem after shipping the game, went back to fix it, took about 2 weeks, it did require testing. Then another week for Sony approval.

Then we got a great x8 AF patch with no performance impact.

But it's not as easy as turning it on or off it still requires testing.

So rather than on / off switch, it's just a matter of getting AF to work with decent results without draining performances, which isn't the hardest thing to do on a finished game, with a working version on weaker hardware already made.

I don't think that this proves your point (that's it's more complicated to implement). Every patch requires testing by Sony, so the time it takes to get that approved does not suggest how long it took the dev team to do so. Also, the quick submission of AF patches on many PS4 games suggests that it's a very trivial implementation if you care to do so....